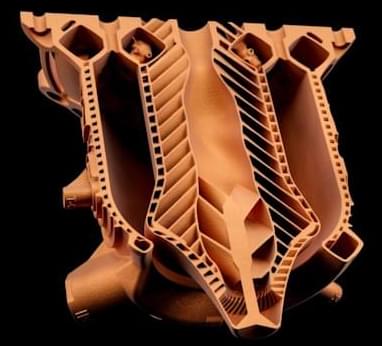

The reason these aerospike style engines haven’t been used more in the past is they’re difficult to design and make. While generally thought of as having the potential to be more efficient, they also require intricate cooling channels to help keep the spike cool. CEO and Co-Founder of LEAP 71, Josefine Lissner, credits the company’s computational AI, Noyron, with the ability to make these advancements.

“We were able to extend Noyron’s physics to deal with the unique complexity of this engine type. The spike is cooled by intricate cooling channels flooded by cryogenic oxygen, whereas the outside of the chamber is cooled by the kerosene fuel.” Said Lissner “I am very encouraged by the results of this test, as virtually everything on the engine was novel and untested. It’s a great validation of our physics-driven approach to computational AI.”

Lin Kayser, Co-Founder of LEAP 71, also believes the AI was paramount in achieving the complex design, explaining “Despite their clear advantages, Aerospikes are not used in space access today. We want to change that. Noyron allows us to radically cut the time we need to re-engineer and iterate after a test and enables us to converge rapidly on an optimal design.”