Neuron-powered computer chips can now be easily programmed to play a first-person shooter game, bringing biological computers a step closer to useful applications

Three centuries after Newton described the universe through fixed laws and deterministic equations, science may be entering an entirely new phase.

According to biochemist and complex systems theorist Stuart Kauffman and computer scientist Andrea Roli, the biosphere is not a predictable, clockwork system. Instead, it is a self-organising, ever-evolving web of life that cannot be fully captured by mathematical models.

Organisms reshape their environments in ways that are fundamentally unpredictable. These processes, Kauffman and Roli argue, take place in what they call a “Domain of No Laws.”

This challenges the very foundation of scientific thought. Reality, they suggest, may not be governed by universal laws at all—and it is biology, not physics, that could hold the answers.

Tap here to read more.

How does the brain know which neurons to adjust during learning in order to optimize behavior? MIT researchers discovered that brains can use cell-by-cell error signals to do this — surprisingly similar to how AI systems are trained via backpropagation.

When we learn a new skill, the brain has to decide—cell by cell—what to change. New research from MIT suggests it can do that with surprising precision, sending targeted feedback to individual neurons so each one can adjust its activity in the right direction.

The finding echoes a key idea from modern artificial intelligence. Many AI systems learn by comparing their output to a target, computing an “error” signal, and using it to fine-tune connections within the network. A longstanding question has been whether the brain also uses that kind of individualized feedback. In a study published in the February 25 issue of the journal Nature, MIT researchers report evidence that it does.

A research team led by Mark Harnett, a McGovern Institute investigator and associate professor in the Department of Brain and Cognitive Sciences at MIT, discovered these instructive signals in mice by training animals to control the activity of specific neurons using a brain-computer interface (BCI). Their approach, the researchers say, can be used to further study the relationships between artificial neural networks and real brains, in ways that are expected to both improve understanding of biological learning and enable better brain-inspired artificial intelligence.

Xu et al. reveal that co-infection of nematodes and pathogens is a global phenomenon. Root-knot nematodes reprogram rhizosphere metabolism, reducing defensive tomatidine while increasing sugars that reshape rhizosphere microbiome. These changes suppress antagonistic microbes and promote pathogen proliferation, which enhances nematode survival and gall formation, leading to complex co-infection dynamics.

In response to changes in illumination, a swimming microorganism reverses the direction of its circular trajectory by tilting its flagella’s planes of motion.

Many microorganisms adjust their swimming trajectories in response to environmental signals such as nutrients or light. Researchers have now discovered a new mode of such behavior in a species of green algae [1]. The microbes swim in wide circles when illuminated and switch from counterclockwise (CCW) to clockwise (CW) swimming when the light intensity is above a threshold value. The researchers determined how this change is generated by the algae’s two whip-like flagella. They say that the results reveal a new navigation strategy that microorganisms can use to find optimal environments.

The single-celled green alga Chlamydomonas reinhardtii is photosynthetic and moves toward light by beating its two flagella, situated close together on its front surface, in a breaststroke pattern. In 2021, Kirsty Wan and Dario Cortese of the University of Exeter in the UK figured out the beating pattern that produces the microbe’s typical corkscrew-shaped trajectory, which follows a tight helix [2]. They showed how changing the frequency, amplitude, and synchronization of the flagellar beating allows the cell to change the overall direction of motion, perhaps to steer it toward or away from a light source and optimize the intensity of light it receives.

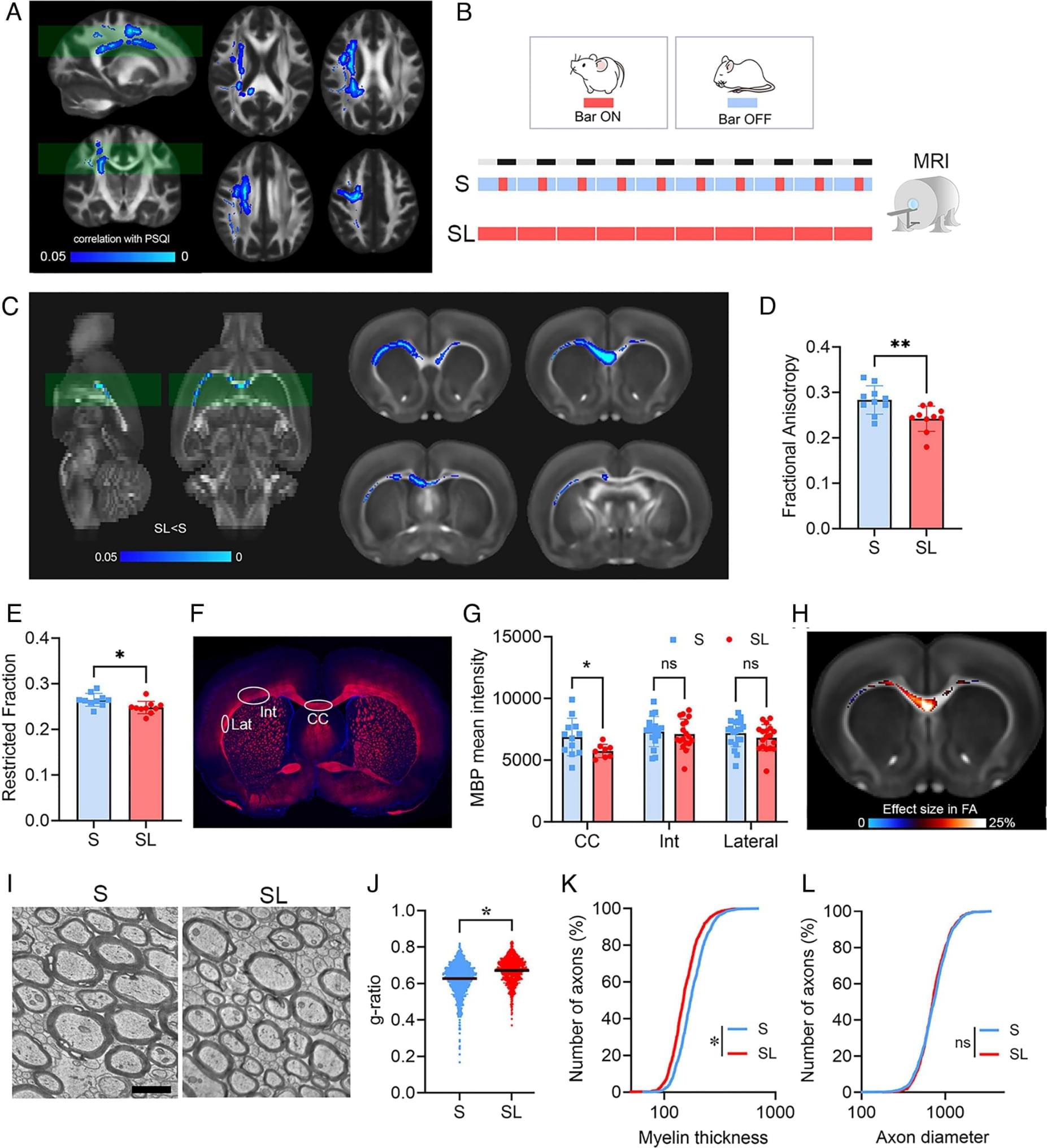

The increasing prevalence of sleep deprivation poses a public health challenge in modern society. Manifestations of reduced alertness, such as slowed reaction times and increased errors, are well-documented behavioral indicators of sleep loss (SL). Yet, the biological consequences of sleep deprivation and their role in behavioral impairment remain elusive. Our study reveals significant effects of sleep deprivation on myelin integrity. As a result, we identify increased conduction delays in nerve signal propagation, hindered interhemispheric synchronization, and impaired cognitive and motor performance associated with SL. By profiling oligodendrocyte transcriptome and lipidome, we observe SL-induced endoplasmic reticulum stress and lipid metabolism dysregulation, particularly affecting cholesterol homeostasis.

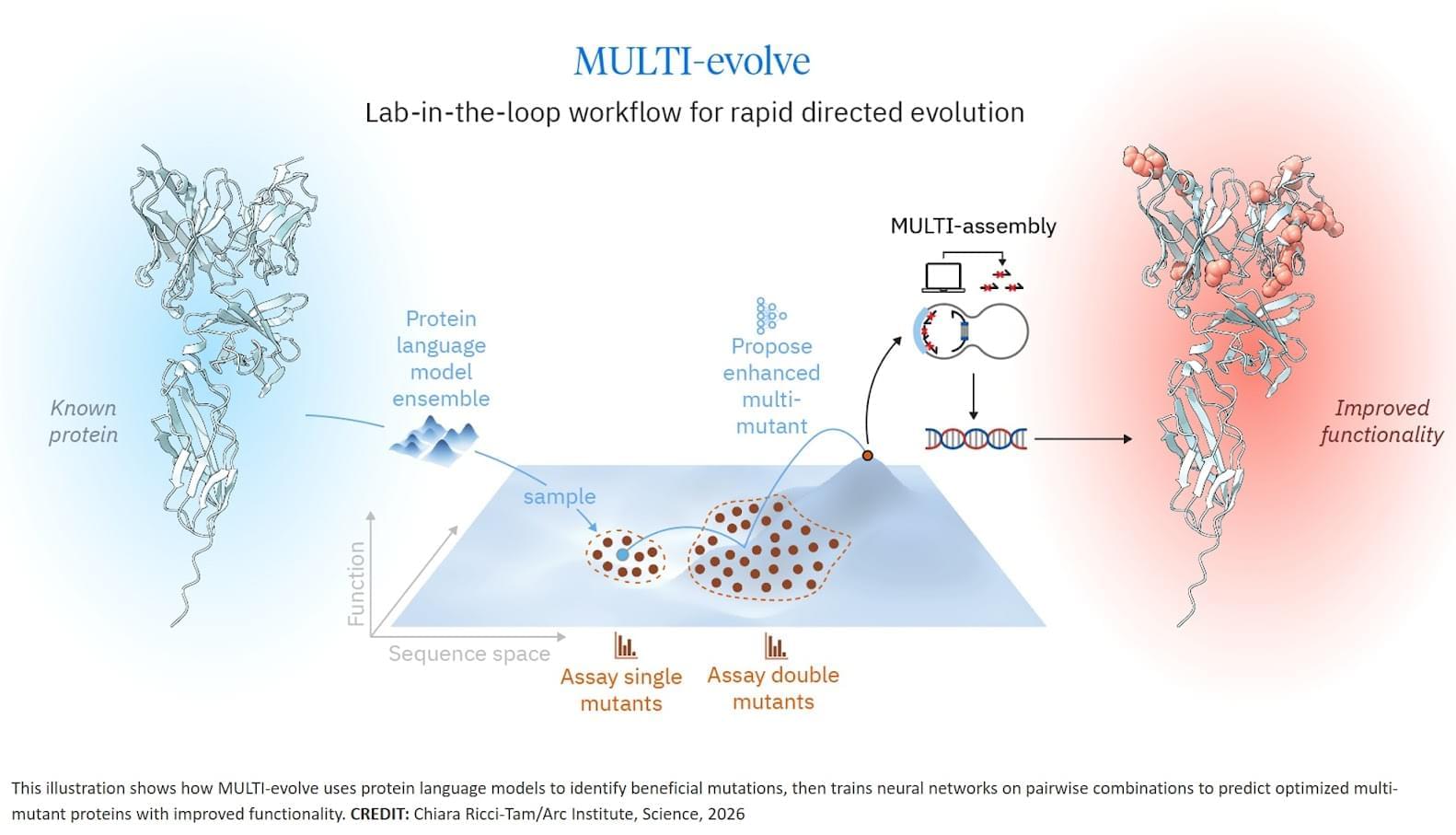

The researchers developed MULTI-evolve, a framework for efficient protein evolution that applies machine learning models trained on datasets of ~200 variants focused specifically on pairs of function-enhancing mutations.

Published in Science, this work represents the first lab-in-the-loop framework for biological design, where computational prediction and experimental design are tightly integrated from the outset, reflecting our broader investment in AI-guided research.

Our insight was to focus on quality over quantity. First identify ~15–20 function-enhancing mutations (using protein language models or experimental screens), then systematically test all pairwise combinations of those beneficial mutations. This generates ~100–200 measurements, and every one is informative for learning beneficial epistatic interactions.

We validated this computationally using 12 existing protein datasets from published studies. Training neural networks on only the single and double mutants, we found models could accurately predict complex multi-mutants (variants with 3–12 mutations) across all 12 diverse protein families. This result held even when we reduced training data to just 10% of what was available.

Training on double mutants works because they reveal epistasis. A double mutant might perform better than the sum of its parts (synergy), worse than expected (antagonism), or exactly as predicted (additivity). These pairwise interaction patterns teach models the rules for how mutations combine, enabling extrapolation to predict which 5-, 6-, or 7-mutation combinations will work synergistically.

We then applied MULTI-evolve to three new proteins: APEX (up to 256-fold improvement over wild-type, 4.8-fold beyond already-optimized APEX2), dCasRx for trans-splicing (up to 9.8-fold improvement), and an anti-CD122 antibody (2.7-fold binding improvement to 1.0 nM, 6.5-fold expression increase). For dCasRx, we started with a deep mutational scan of 11,000 variants, extracted only the function-enhancing mutations, and tested their pairwise combinations—demonstrating the value of strategic data curation for efficient engineering.

Each required experimentally testing only ~100–200 variants in a single round to train models that accurately predicted complex multi-mutants, compressing what traditionally takes 5–10 iterative cycles over many months into weeks. Science Mission sciencenewshighlights.

Plant owners with a so-called green thumb often seem to have a more finely tuned sense of what their plants need than the rest of us. A new “smart lighting” system for indoor vertical farms grants this ability on a facility-wide scale, responsively meeting plants’ needs while reducing energy inefficiencies, clearing a path for indoor farms as an energy-efficient food security strategy.

The system was designed and tested in a study led by Professor of Plant Biology Tracy Lawson, who conducted the research at the University of Essex and is now a member of the Carl R. Woese Institute for Genomic Biology at the University of Illinois Urbana-Champaign. The work, published in Smart Agricultural Technology, emerged from her goal to help establish the viability of vertical farming for large-scale food production.

“One of the key aspects of [vertical farming], of course, is the energy cost associated with using LED lighting,” Lawson said. “So that’s where it all started, trying to save energy.”