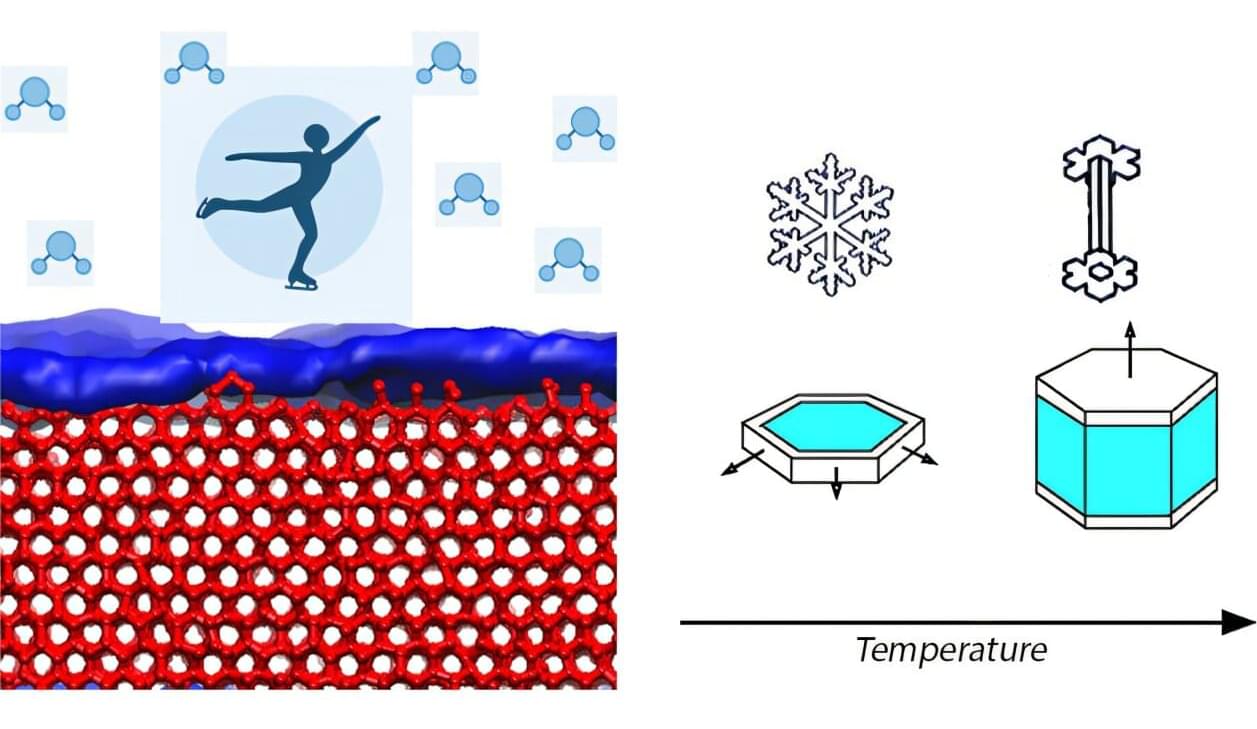

For decades, the ability to visualize the chemical composition of materials, whether for diagnosing a disease, assessing food quality, or analyzing pollution, depended on large, expensive laboratory instruments called spectrometers. These devices work by taking light, spreading it out into a rainbow using a prism or grating, and measuring the intensity of each color. The problem is that spreading light requires a long physical path, making the device inherently bulky.

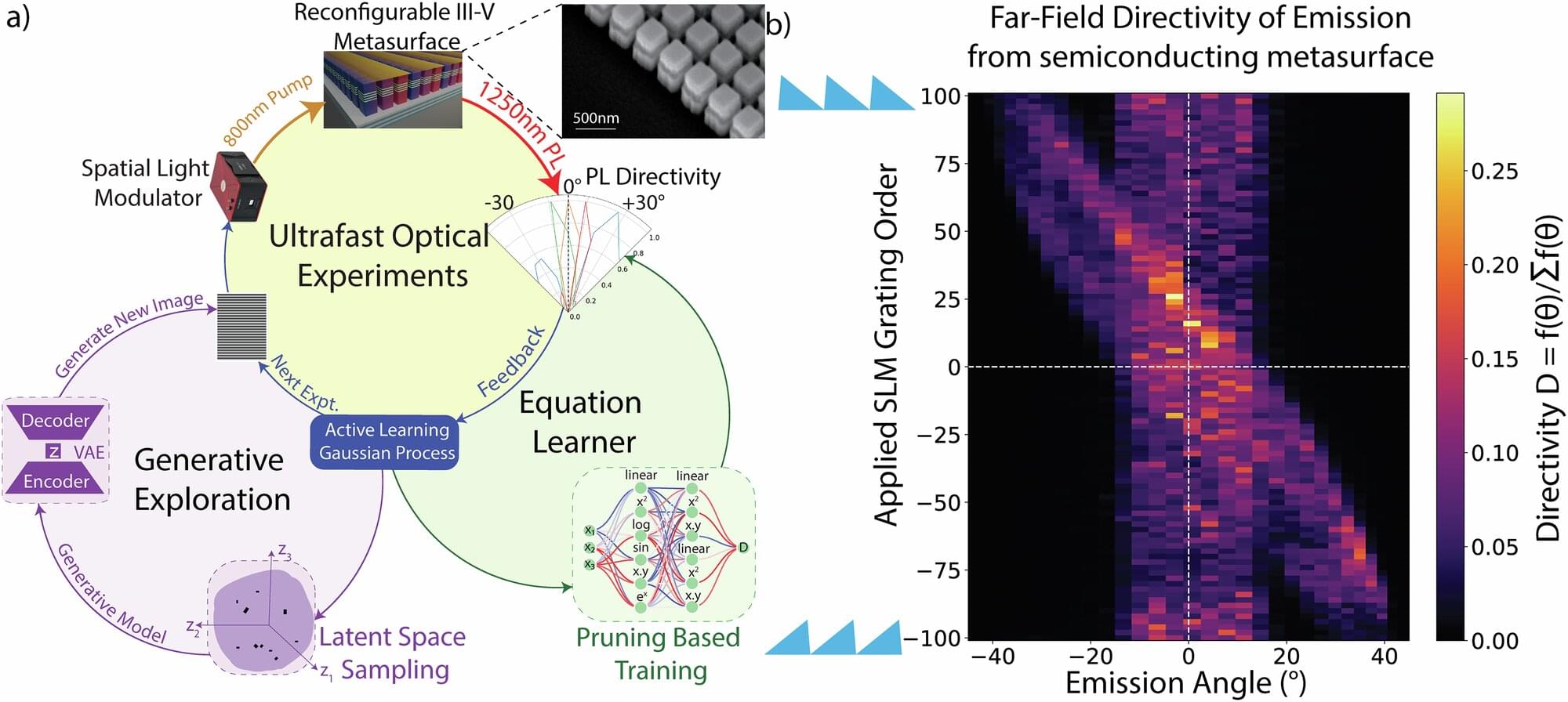

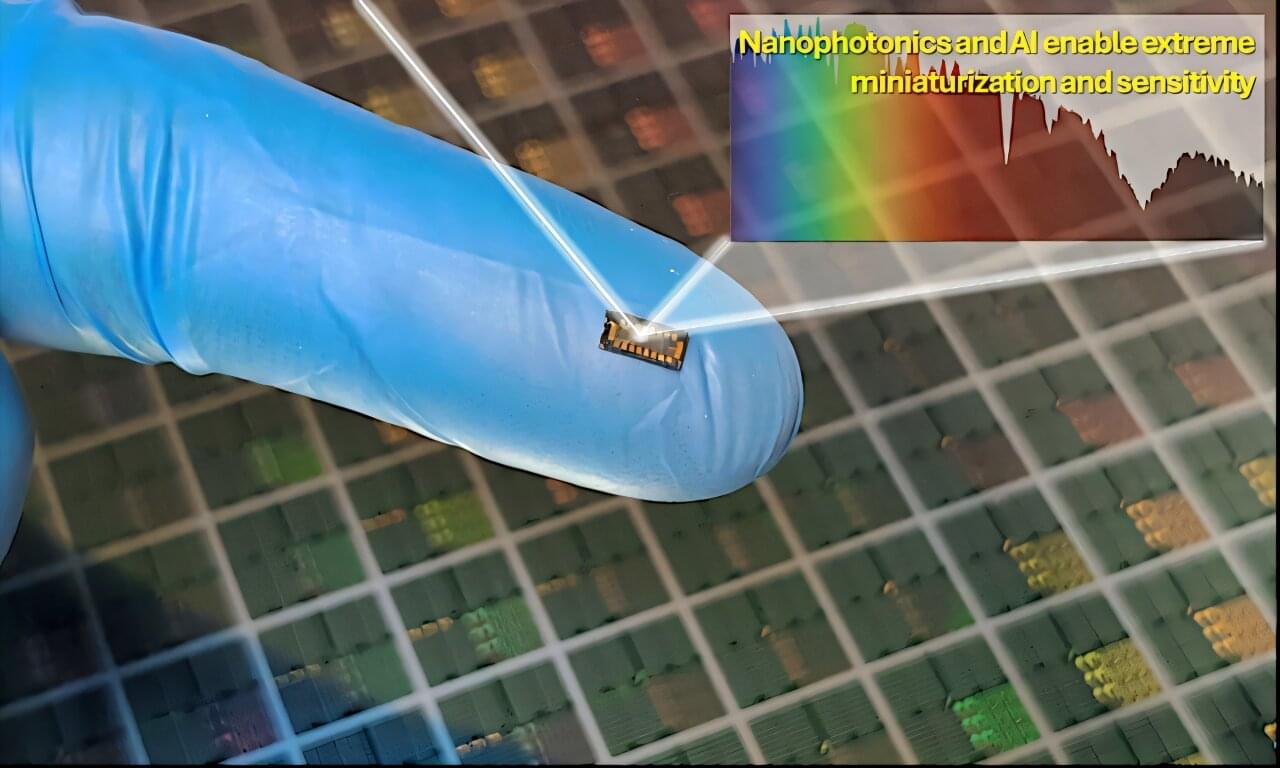

A recent study from the University of California Davis (UC Davis), reported in Advanced Photonics, tackles the challenge of miniaturization, aiming to shrink a lab-grade spectrometer down to the size of a grain of sand, a tiny spectrometer-on-a-chip that can be integrated into portable devices. The traditional approach of spatially spreading light is abandoned in favor of a reconstructive method.

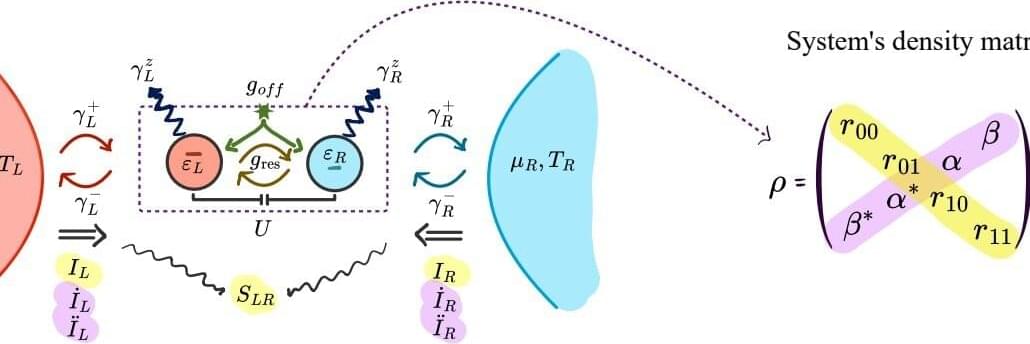

Instead of physically separating each color, the new chip uses only 16 distinct silicon detectors, each engineered to respond slightly differently to incoming light. This is analogous to giving a handful of specialized sensors a mixed drink, with each sensor sampling a different aspect of the drink. The key to deciphering the original recipe is the second part of the invention: artificial intelligence (AI).