Psychologists from Edith Cowan University (ECU) have used virtual reality (VR) technology in a new study that aims to better understand criminals and how they respond when questioned. The results are published in the journal Scientific Reports.

“You will often hear police say, to catch a criminal, you have to think like a criminal—well that is effectively what we are trying to do here,” said Dr. Shane Rogers, who led the project alongside ECU Ph.D. candidate Isabella Branson.

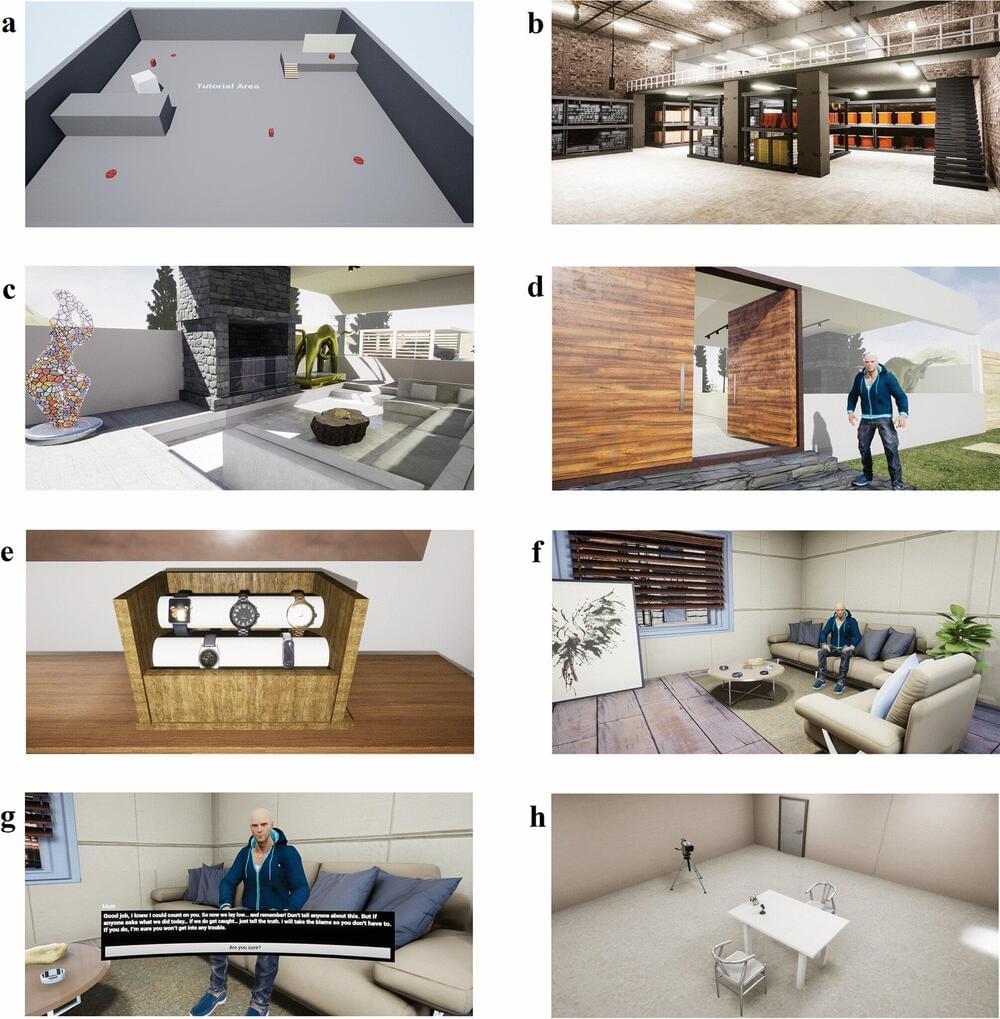

The forensic psychology research project involved 101 participants, who role-played committing a burglary in two similar virtual mock–crime scenarios.