Researchers have developed a 3D full-color display method that uses a smartphone screen rather than a laser to create holographic images. With further development, the new approach could be useful for augmented or virtual reality displays.

AR-Smart glasses: 2029. Will look like just a normal pair of sunglasses. All normal smartphone type features. Built in AI systems. Set up for some VR stuff. An built in earbud / mic, for calls, music, talking to Ai, etc… May need a battery pack, we ll see in 2029.

The smart glasses will soon come with a built-in assistant.

😗😁😘 agi yay 😀 😍

The pursuit of artificial intelligence that can navigate and comprehend the intricacies of three-dimensional environments with the ease and adaptability of humans has long been a frontier in technology. At the heart of this exploration is the ambition to create AI agents that not only perceive their surroundings but also follow complex instructions articulated in the language of their human creators. Researchers are pushing the boundaries of what AI can achieve by bridging the gap between abstract verbal commands and concrete actions within digital worlds.

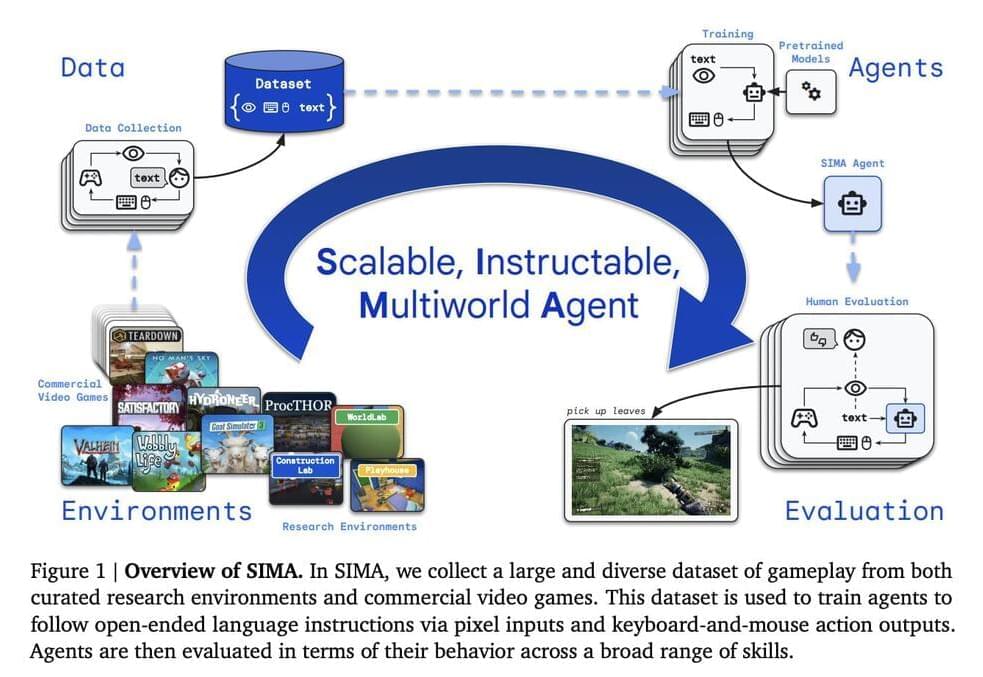

Researchers from Google DeepMind and the University of British Columbia focus on a groundbreaking AI framework, the Scalable, Instructable, Multiworld Agent (SIMA). This framework is not just another AI tool but a unique system designed to train AI agents in diverse simulated 3D environments, from meticulously designed research labs to the expansive realms of commercial video games. Its universal applicability sets SIMA apart, enabling it to understand and act upon instructions in any virtual setting, a feature that could revolutionize how everyone interacts with AI.

Creating a versatile AI that can interpret and act on instructions in natural language is no small feat. Earlier AI systems were trained in specific environments, which limits their usefulness in new situations. This is where SIMA steps in with its innovative approach. Training in various virtual settings allows SIMA to understand and execute multiple tasks, linking linguistic instructions with appropriate actions. This enhances its adaptability and deepens its understanding of language in the context of different 3D spaces, a significant step forward in AI development.

Welcome back to Virtual Reality James! In this exciting video, we delve into the groundbreaking topic of Japan’s Anti-Aging Vaccine and explore the future of longevity. Join us as we uncover the latest scientific advancements and reveal how this revolutionary vaccine could potentially transform the way we age.

Discover the secrets behind this cutting-edge technology that aims to slow down the aging process and enhance our quality of life. We’ll explore the science behind the vaccine, its potential benefits, and the implications it may have on society as a whole.

Throughout the video, we’ll interview leading experts in the field, providing you with valuable insights and expert opinions. Learn about the research studies conducted, the promising results obtained, and the potential challenges that lie ahead.

Join us on this captivating journey as we explore the potential impact of Japan’s Anti-Aging Vaccine on our future. Don’t miss out on this opportunity to gain a deeper understanding of the advancements in longevity research and the possibilities they hold.

“We usually conform to the views of others for two reasons. First, we succumb to group pressure and want to gain social acceptance. Second, we lack sufficient knowledge and perceive the group as a source of a better interpretation of the current situation,” explains Dr. Konrad Bocian from the Institute of Psychology at SWPS University.

So far, only a few studies have investigated whether moral judgments, or evaluations of another person’s behavior in a given situation, are subject to group pressure. This issue was examined by scientists from SWPS University in collaboration with researchers from the University of Sussex and the University of Kent. The scientists also investigated how views about the behavior of others changed under the influence of avatar pressure in a virtual environment. A paper on this topic is published in PLOS ONE.

“Today, social influence is increasingly as potent in the digital world as in the real world. Therefore, it is necessary to determine how our judgments are shaped in the digital reality, where interactions take place online and some participants are avatars, not real humans,” points out Dr. Bocian.

Can virtual reality (VR) be tailored to explore larger areas and allow users to “walk” around their environment? This is what a recent study published in IEEE Transactions on Visualization and Computer Graphics hopes to address as a team of international researchers have developed a new VR system called RedirectedDoors+ that can allow users to expand their environments beyond the real-world physical boundaries, such as walls and doors. This study holds the potential to not only expand VR environments but also drastically reduce the real-world environments that are typically required for VR experiences.

“Our system, which built upon an existing visuo-haptic door-opening redirection technique, allows participants to subtly manipulate the walking direction while opening doors in VR, guiding them away from real walls,” said Dr. Kazuyuki Fujita, who is an assistant professor in the Research Institute of Electrical Communication (RIEC) at Tohoku University and a co-author on the study. “At the same time, our system reproduces the realistic haptics of touching a doorknob, enhancing the quality of the experience.”

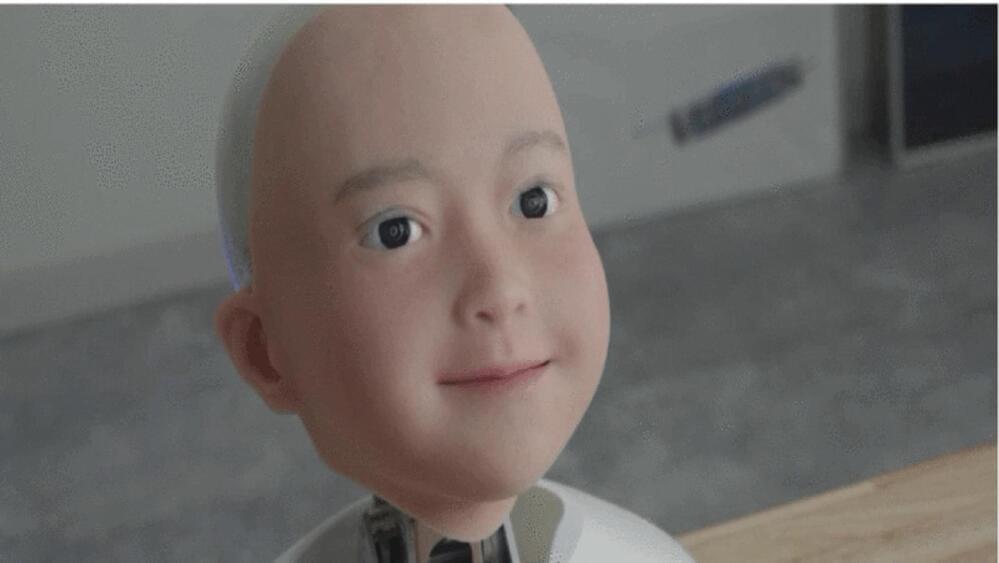

A team of researchers from Japan has made it possible for humans to talk to each other in a robotic form. And they claim that it feels exactly like talking with real people.

They made a half-humanoid called ‘Yui’, which would be controlled by a real person wearing virtual reality goggles and a microphone headset. These gadgets let them see and hear what Yui sees and hears and even copy their facial expressions and voice.