Lex Fridman just interviewed Mark Zuckerberg in the Metaverse, in VR, using Meta’s photorealistic avatars. ‘This is really the most incredible thing I’ve ever seen,’ said the podcast host.

From attending a meeting to enjoying a live performance or, perhaps, taking a class at the University of Tokyo’s Metaverse School of Engineering, the application of virtual reality is expanding in our daily lives. Earlier this year, virtual reality technologies garnered attention as tech giants, including Meta and Apple, unveiled new VR/AR (virtual reality/augmented reality) headsets. We spoke with VR and AR specialist Takuji Narumi, an associate professor at the Graduate School of Information Science and Technology, to learn about his latest research and what VR’s future has to offer.

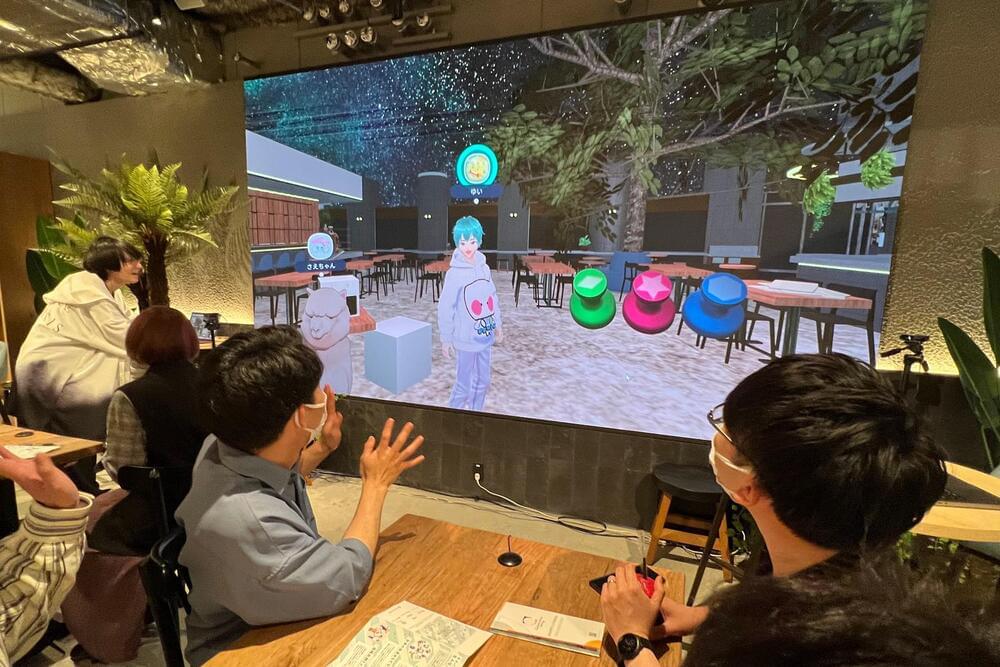

At the Avatar Robot Café DAWN ver. β, employees serve customers via a digital screen and engage in conversation using avatars of their choice, such as an alpaca and a man with blue hair.

Meta CEO Mark Zuckerberg reveals the technology behind codec avatars, which create ultra-realistic VR faces.

Meta, formerly known as Facebook, has been struggling to convince the world that its vision of the metaverse is worth pursuing. The social media giant rebranded itself in October 2022, hoping to create a more immersive and interactive online experience for its users. However, the initial response was far from positive. Many people mocked the cartoonish and unrealistic avatars that Meta showcased in its demonstration video, which lasted for over an hour. Others questioned the need and feasibility of creating a virtual world that mimics real life.

Meta’s ambitious project also faced… More.

Credits: Lex Fridman/YouTube.

Meta’s ambitious project also faced financial challenges. The company reported a loss of $4.28 billion in the first quarter of 2022, with its revenue from Meta Reality Labs, the division responsible for developing the metaverse, being much lower than expected. Meta also faced competition from other tech companies, such as Microsoft and Apple, that were working on their own versions of augmented and virtual reality.

People will laugh and dismiss it and make comparisons to googles clown glasses. But around 2030 Augmented Reality glasses will come out. Basically, it will be a pair of normal looking sunglasses w/ smart phone type features, Ai, AND… VR stuff.

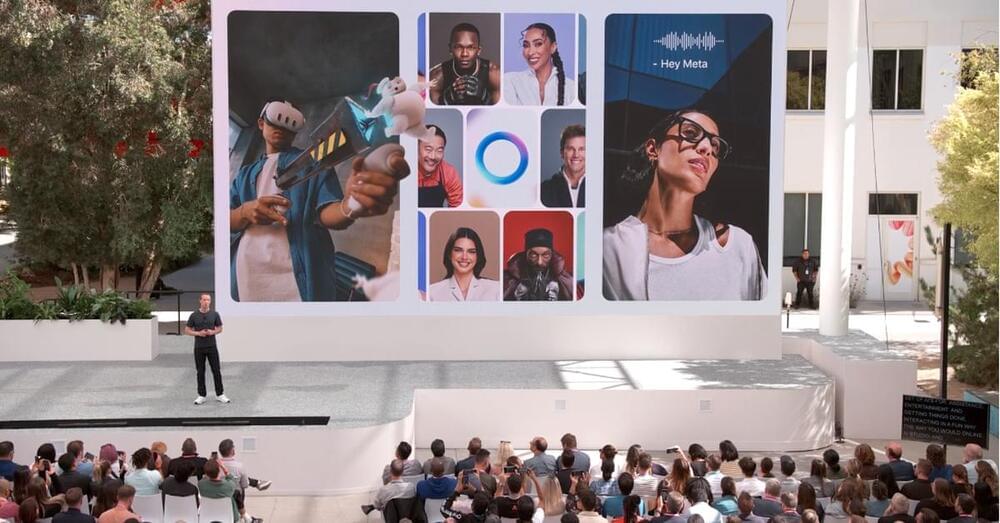

Meta chief Mark Zuckerberg on Wednesday said the tech giant is putting artificial intelligence into digital assistants and smart glasses as it seeks to gain lost ground in the AI race.

Zuckerberg made his announcements at the Connect developers conference at Meta’s headquarters in Silicon Valley, the company’s main annual product event.

“Advances in AI allow us to create different (applications) and personas that help us accomplish different things,” Zuckerberg said as he kicked off the gathering.

AI? VR? The term might just refer to whatever Meta is doing now.

Almost two years ago, Mark Zuckerberg rebranded his company Facebook to Meta — and since then, he has been focused on building the “metaverse,” a three-dimensional virtual reality. But the metaverse has lost some of its luster since 2021. Companies like Disney have closed down their metaverse divisions and deemphasized using the word, while crypto-based startup metaverses have quietly languished or imploded. In 2022, Meta’s Reality Labs division reported an operational loss of $13.7 billion.

But at Meta Connect 2023, Zuckerberg still hasn’t given up on the metaverse — he’s just shifted how he talks about it. He once focused on… More.

Meta launches Quest 3 and continues to focus on the metaverse — even though the market isn’t as positive. Zuckerberg shows a shift in his thinking about the concept.

YouTuber Lucas VRTech has designed and built a pair of finger-tracking VR gloves using just $22 in materials — and he’s released all the details on the build so others can make their own.

The challenge: We use our hands to manipulate objects in the real world, but in VR, users typically have to use controllers with buttons and joysticks.

That breaks some of the immersion, limiting the use of VR for not only gaming, but also applications like therapy and job training.

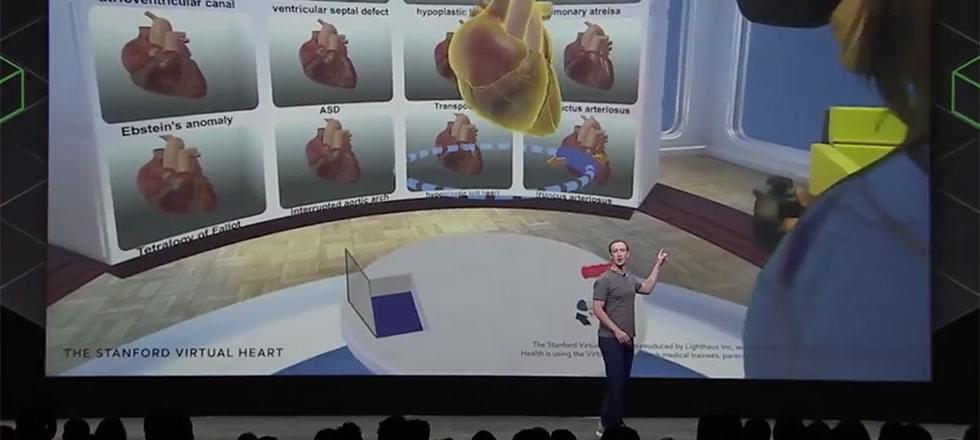

Pediatric specialists at Lucile Packard Children’s Hospital Stanford are implementing innovative uses for immersive virtual reality (VR) and augmented reality (AR) technologies to advance patient care and improve the patient experience.

Through the hospital’s CHARIOT program, Packard Children’s is one of the only hospitals in the world to have VR available on every unit to help engage and distract patients undergoing a range of hospital procedures. Within the Betty Irene Moore Children’s Heart Center, three unique VR projects are influencing medical education for congenital heart defects, preparing patients for procedures and aiding surgeons in the operating room. And for patients and providers looking to learn more about some of the therapies offered within our Fetal and Pregnancy Health Program, a new VR simulation helps them understand the treatments at a much closer level.

Please attend our Virtual Realilty Ending Aging Forum!

This event will showcase the newest breakthroughs in rejuvenation biotechnologies happening at the SENS Research Foundation’s Research Center in Mountain View, CA, as well as the research funded at extramural labs.

The Forum will be hosted virtually through Meetaverse, a state-of-the-art Virtual Reality platform.

This virtual event is your opportunity to hear first-hand about the latest advances that our in-house researchers are making toward new rejuvenation biotechnologies, along with some of our young scientists-in-training and outside researchers whose research we fund.

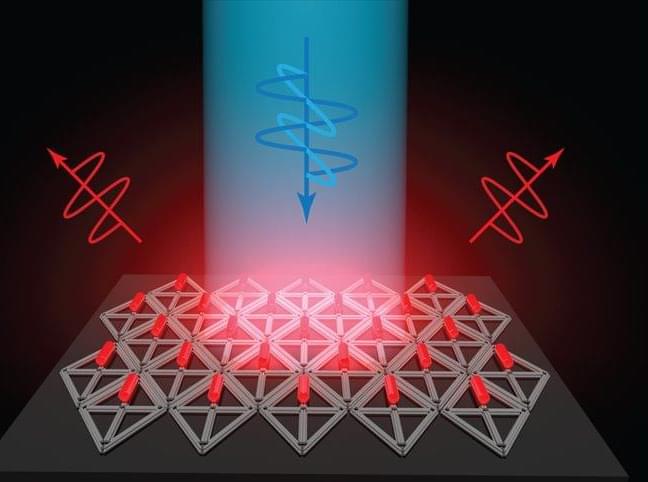

Flat screen TVs that incorporate quantum dots are now commercially available, but it has been more difficult to create arrays of their elongated cousins, quantum rods, for commercial devices. Quantum rods can control both the polarization and color of light, to generate 3D images for virtual reality devices.

Using scaffolds made of folded DNA, MIT engineers have come up with a new way to precisely assemble arrays of quantum rods. By depositing quantum rods onto a DNA scaffold in a highly controlled way, the researchers can regulate their orientation, which is a key factor in determining the polarization of light emitted by the array. This makes it easier to add depth and dimensionality to a virtual scene.

“One of the challenges with quantum rods is: How do you align them all at the nanoscale so they’re all pointing in the same direction?” says Mark Bathe, an MIT professor of biological engineering and the senior author of the new study. “When they’re all pointing in the same direction on a 2D surface, then they all have the same properties of how they interact with light and control its polarization.”

This talk is about how you can use wireless signals and fuse them with vision and other sensing modalities through AI algorithms to give humans and robots X-ray vision to see objects hidden inside boxes or behind other object.

Tara Boroushaki is a Ph.D student at MIT. Her research focuses on fusing radio frequency (RF) sensing with vision through artificial intelligence. She designs algorithms and builds systems that leverage such fusion to enable capabilities that were not feasible before in applications spanning augmented reality, virtual reality, robotics, smart homes, and smart manufacturing. This talk was given at a TEDx event using the TED conference format but independently organized by a local community.