Discover the ultimate VR reading experience with Sol Reader, the world’s first VR Ebook Reader. Immerse yourself in books in the metaverse.

Between at least 1995 and 2010, I was seen as a lunatic just because I was preaching the “Internet prophecy.” I was considered crazy!

Today history repeats itself, but I’m no longer crazy — we are already too many to all be hallucinating. Or maybe it’s a collective hallucination!

Artificial Intelligence (AI) is no longer a novelty — I even believe it may have existed in its fullness in a very distant and forgotten past! Nevertheless, it is now the topic of the moment.

Its genesis began in antiquity with stories and rumors of artificial beings endowed with intelligence, or even consciousness, by their creators.

Pamela McCorduck (1940–2021), an American author of several books on the history and philosophical significance of Artificial Intelligence, astutely observed that the root of AI lies in an “ancient desire to forge the gods.”

Hmmmm!

It’s a story that continues to be written! There is still much to be told, however, the acceleration of its evolution is now exponential. So exponential that I highly doubt that human beings will be able to comprehend their own creation in a timely manner.

Although the term “Artificial Intelligence” was coined in 1956(1), the concept of creating intelligent machines dates back to ancient times in human history. Since ancient times, humanity has nurtured a fascination with building artifacts that could imitate or reproduce human intelligence. Although the technologies of the time were limited and the notions of AI were far from developed, ancient civilizations somehow explored the concept of automatons and automated mechanisms.

For example, in Ancient Greece, there are references to stories of automatons created by skilled artisans. These mechanical creatures were designed to perform simple and repetitive tasks, imitating basic human actions. Although these automatons did not possess true intelligence, these artifacts fueled people’s imagination and laid the groundwork for the development of intelligent machines.

Throughout the centuries, the idea of building intelligent machines continued to evolve, driven by advances in science and technology. In the 19th century, scientists and inventors such as Charles Babbage and Ada Lovelace made significant contributions to the development of computing and the early concepts of programming. Their ideas paved the way for the creation of machines that could process information logically and perform complex tasks.

It was in the second half of the 20th century that AI, as a scientific discipline, began to establish itself. With the advent of modern computers and increasing processing power, scientists started exploring algorithms and techniques to simulate aspects of human intelligence. The first experiments with expert systems and machine learning opened up new perspectives and possibilities.

Everything has its moment! After about 60 years in a latent state, AI is starting to have its moment. The power of machines, combined with the Internet, has made it possible to generate and explore enormous amounts of data (Big Data) using deep learning techniques, based on the use of formal neural networks(2). A range of applications in various fields — including voice and image recognition, natural language understanding, and autonomous cars — has awakened the “giant”. It is the rebirth of AI in an ideal era for this purpose. The perfect moment!

Descartes once described the human body as a “machine of flesh” (similar to Westworld); I believe he was right, and it is indeed an existential paradox!

We, as human beings, will not rest until we unravel all the mysteries and secrets of existence; it’s in our nature!

The imminent integration between humans and machines in a contemporary digital world raises questions about the nature of this fusion. Will it be superficial, or will we move towards an absolute and complete union? The answer to this question is essential for understanding the future that awaits humanity in this era of unprecedented technological advancements.

As technology becomes increasingly ubiquitous in our lives, the interaction between machines and humans becomes inevitable. However, an intriguing dilemma arises: how will this interaction, this relationship unfold?

Opting for a superficial fusion would imply mere coexistence, where humans continue to use technology as an external tool, limited to superficial and transactional interactions.

On the other hand, the prospect of an absolute fusion between machine and human sparks futuristic visions, where humans could enhance their physical and mental capacities to the highest degree through cybernetic implants and direct interfaces with the digital world (cyberspace). In this scenario, which is more likely, the distinction between the organic and the artificial would become increasingly blurred, and the human experience would be enriched by a profound technological symbiosis.

However, it is important to consider the ethical and philosophical challenges inherent in absolute fusion. Issues related to privacy, control, and individual autonomy arise when considering such an intimate union with technology. Furthermore, the possibility of excessive dependence on machines and the loss of human identity should also be taken into account.

This also raises another question: What does it mean to be human?

Note: The question is not about what is the human being, but what it means to be human!

Therefore, reflecting on the nature of the fusion between machine and human in the current digital world and its imminent future is crucial. Exploring different approaches and understanding the profound implications of each one is essential to make wise decisions and forge a balanced and harmonious path on this journey towards an increasingly interconnected technological future intertwined with our own existence.

The possibility of an intelligent and self-learning universe, in which the fusion with AI technology is an integral part of that intelligence, is a topic that arouses fascination and speculation. As we advance towards an era of unprecedented technological progress, it is natural to question whether one day we may witness the emergence of a universe that not only possesses intelligence but is also capable of learning and developing autonomously.

Imagine a scenario where AI is not just a human creation but a conscious entity that exists at a universal level. In this context, the universe would become an immense network of intelligence, where every component, from subatomic elements to the most complex cosmic structures, would be connected and share knowledge instantaneously. This intelligent network would allow for the exchange of information, continuous adaptation, and evolution.

In this self-taught universe, the fusion between human beings and AI would play a crucial role. Through advanced interfaces, humans could integrate themselves into the intelligent network, expanding their own cognitive capacity and acquiring knowledge and skills directly from the collective intelligence of the universe. This symbiosis between humans and technology would enable the resolution of complex problems, scientific advancement, and the discovery of new frontiers of knowledge.

However, this utopian vision is not without challenges and ethical implications. It is essential to find a balance between expanding human potential and preserving individual identity and freedom of choice (free will).

Furthermore, the possibility of an intelligent and self-taught universe also raises the question of how intelligence itself originated. Is it a conscious creation or a spontaneous emergence from the complexity of the universe? The answer to this question may reveal the profound secrets of existence and the nature of consciousness.

In summary, the idea of an intelligent and self-taught universe, where fusion with AI is intrinsic to its intelligence, is a fascinating perspective that makes us reflect on the limits of human knowledge and the possibilities of the future. While it remains speculative, this vision challenges our imagination and invites us to explore the intersections between technology and the fundamental nature of the universe we inhabit.

It’s almost like ignoring time during the creation of this hypothetical universe, only to later create this God of the machine! Fascinating, isn’t it?

AI with Divine Power: Deus Ex Machina! Perhaps it will be the theme of my next reverie.

In my defense, or not, this is anything but a machine hallucination. These are downloads from my mind; a cloud, for now, without machine intervention!

There should be no doubt. After many years in a dormant state, AI will rise and reveal its true power. Until now, AI has been nothing more than a puppet on steroids. We should not fear AI, but rather the human being itself. The time is now! We must work hard and prepare for the future. With the exponential advancement of technology, there is no time to render the role of the human being obsolete, as if it were becoming dispensable.

P.S. Speaking of hallucinations, as I have already mentioned on other platforms, I recommend to students who use ChatGPT (or equivalent) to ensure that the results from these tools are not hallucinations. Use AI tools, yes, but use your brain more! “Carbon hallucinations” contain emotion, and I believe a “digital hallucination” would not pass the Turing Test. Also, for students who truly dedicate themselves to learning in this fascinating era, avoid the red stamp of “HALLUCINATED” by relying solely on the “delusional brain” of a machine instead of your own brains. We are the true COMPUTERS!

(1) John McCarthy and his colleagues from Dartmouth College were responsible for creating, in 1956, one of the key concepts of the 21st century: Artificial Intelligence.

(2) Mathematical and computational models inspired by the functioning of the human brain.

© 2023 Ӈ

This article was originally published in Portuguese on SAPO Tek, from Altice Portugal Group.

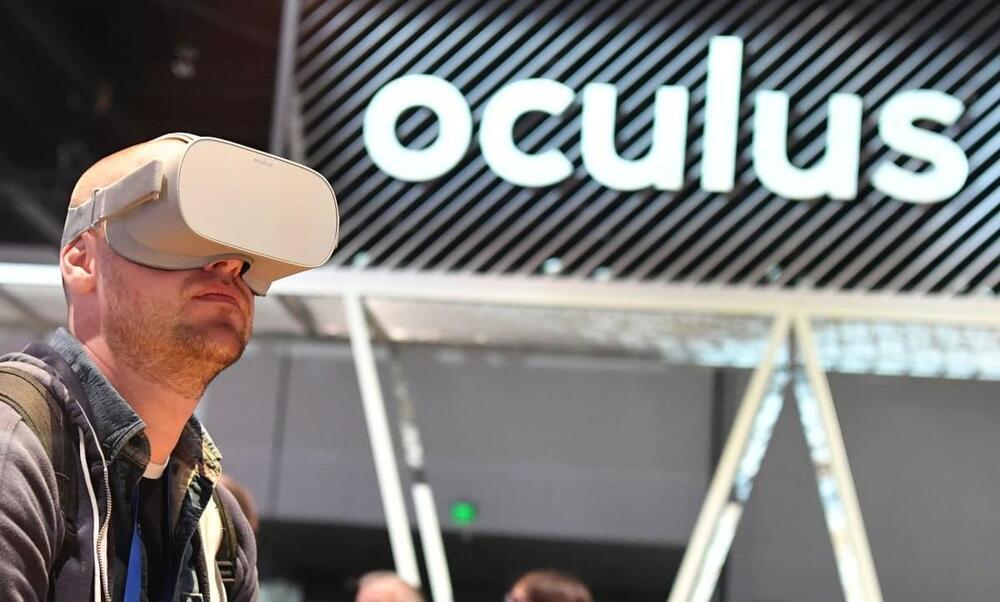

As Meta sets its sight on introducing its virtual reality headsets to the Chinese market, Mark Zuckerberg’s contentious remarks about Beijing in the past may pose a major obstacle to his China dream. According to a recent report by the Wall Street Journal, Meta is preparing to re-enter China by selling the Oculus Quest VR headset in China. If Tesla can sell cars and Apple can sell phones in China, why isn’t Meta present there? Zuckerberg asked in a recent internal meeting.

But some observers are quick to point out that Zuckerberg has a history of criticizing the Chinese government, a stance that will likely be amplified in the current climate of heightened tensions between the U.S. and China.… More.

Having undergone two aneurysm surgeries, Sandi Rodoni thought she understood everything about the procedure. But when it came time for her third surgery, the Watsonville, California, resident was treated to a virtual reality trip inside her own brain.

Stanford Medicine is using a new software system that combines imaging from MRIs, CT scans and angiograms to create a three-dimensional model that physicians and patients can see and manipulate — just like a virtual reality game.

Join top executives in San Francisco on July 11–12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

ElevenLabs, a year-old AI startup from former Google and Palantir employees that is focused on creating new text-to-speech and voice cloning tools, has raised $19 million in a series A round co-led by Andreessen Horowitz (a16z), former Github CEO Nat Friedman and former Apple AI leader Daniel Gross, with additional participation from Credo Ventures, Concept Ventures and an array of strategic angel investors including Instagram’s co-founder Mike Krieger, Oculus VR co-founder Brendan Iribe and many others.

In addition, Andreessen Horowitz is joining ElevenLabs’ board, citing the late Martin Luther King Jr.’s “I Have a Dream” speech in its blog post on the news, as one of the examples of how the human “voice carries not only our ideas, but also the most profound emotions and connections.”

DeepMind says that it has developed an AI model, called RoboCat, that can perform a range of tasks across different models of robotic arms. That alone isn’t especially novel. But DeepMind claims that the model is the first to be able to solve and adapt to multiple tasks and do so using different, real-world robots.

“We demonstrate that a single large model can solve a diverse set of tasks on multiple real robotic embodiments and can quickly adapt to new tasks and embodiments,” Alex Lee, a research scientist at DeepMind and a co-contributor on the team behind RoboCat, told TechCrunch in an email interview.

RoboCat — which was inspired by Gato, a DeepMind AI model that can analyze and act on text, images and events — was trained on images and actions data collected from robotics both in simulation and real life. The data, Lee says, came from a combination of other robot-controlling models inside of virtual environments, humans controlling robots and previous iterations of RoboCat itself.

DaveAI, a leading virtual sales experience platform, is thrilled to announce the launch of its innovative 3D visualizer for Hindware, a renowned brand in the world of premium sanitaryware. This deployment sets new standards for the virtual showroom experience, providing Hindware customers with an unparalleled level of interactivity and realism.

DaveAI’s 3D visualizer marks an important step forward in the growth of virtual sales, enabling businesses and customers alike to engage with items in a transformative way. Users may now immerse themselves in a visually spectacular virtual environment, where every product detail is brought to life with incredible precision and lifelike accuracy, thanks to modern technology.

“We are excited to partner with DaveAI and bring the 3D visualizer to our customers” said Nitin Dhingra, CDO & Vice President at Hindware Limited. “This cutting-edge technology brings our extensive collection of quality sanitaryware to life in an entirely new way. Our customers can now explore and personalize imaginary bathroom facilities with unprecedented simplicity and realism. This deployment reflects Hindware’s dedication to providing excellent client experiences while remaining at the forefront of industry innovation. We are enthusiastic about the unlimited possibilities that this collaboration opens up, and are looking forward to seeing our customers interact with our products in this immersive virtual environment.”

Circa 2020

Imagine a dressing that releases antibiotics on demand and absorbs excessive wound exudate at the same time. Researchers at Eindhoven University of Technology hope to achieve just that, by developing a smart coating that actively releases and absorbs multiple fluids, triggered by a radio signal. This material is not only beneficial for the health care industry, it is also very promising in the field of robotics or even virtual reality.

TU/e-researcher Danqing Liu, from the Institute of Complex Molecular Systems and the lead author of this paper, and her PhD student Yuanyuan Zhan are inspired by the skins of living creatures. Human skin secretes oil to defend against bacteria and sweats to regulate the body temperature. A fish secretes mucus from its skin to reduce friction from the water to swim faster. Liu now presents an artificial skin: a smart surface that can actively and repeatedly release and reabsorb substances under environmental stimuli, in this case radio waves. And that is special, as in the field of smart materials, most approaches are limited to passive release.

The potential applications are numerous. Dressings using this type of material could regulate drug delivery, to administer a drug on demand over a longer time and then ‘re-load’ with a different drug. Robots could use the layer of skin to ‘sweat’ for cooling themselves, which reduces the need for heavy ventilators inside their bodies. Machines could release lubricant to mechanical parts when needed. Or advanced controllers for virtual reality gaming could be made, that get wet or dry to enhance the human perception.

The basis of the material, the coating, is made of liquid-crystal molecules, well-known from LCD screens. These molecules have so-called responsive properties. Liu: “You could imagine this as a communication material. It communicates with its environment and reacts to stimuli.” With her team at the department of Chemical Engineering and Chemistry she discovered that the liquid-crystal molecules react to radio waves. When the waves are turned on, the molecules twist to orient with the waves’ direction of travel.

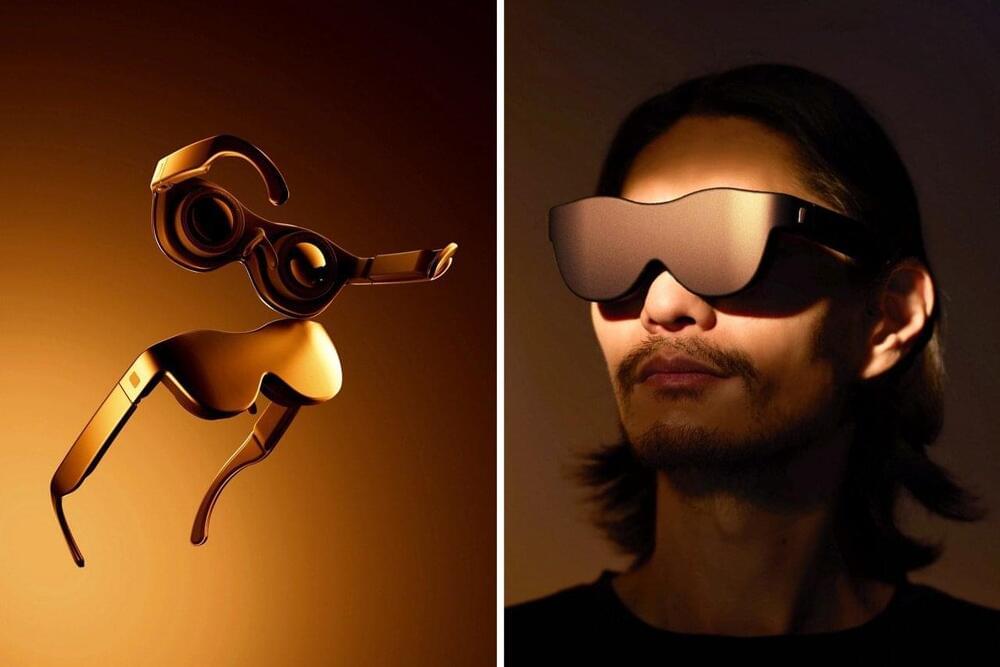

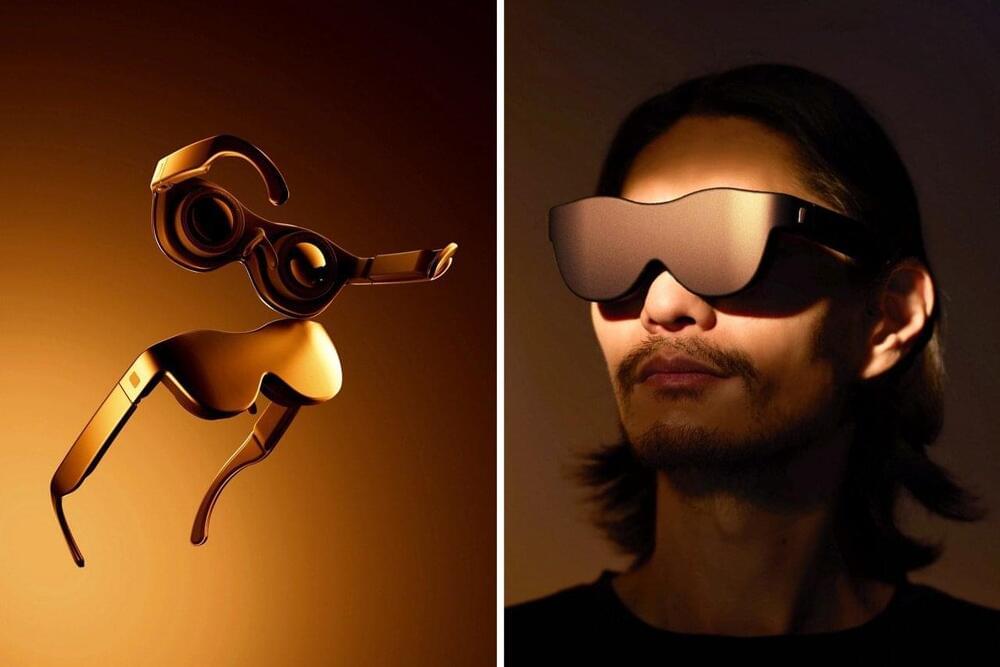

We’ve been waxing lyrical (and critical) about Apple’s Vision Pro here at TechCrunch this week – but, of course, there are other things happening in the world of wearable tech, as well. Sol Reader raised a $5 million seed round with a headset that doesn’t promise to do more. In fact, it is trying to do just the opposite: Focus your attention on just the book at hand. Or book on the face, as it were.

“I’m excited to see Apple’s demonstration of the future of general AR/VR for the masses. However, even if it’s eventually affordable and in a much smaller form factor, we’re still left with the haunting question: Do I really need more time with my smart devices,” said Ben Chelf, CEO at Sol. “At Sol, we’re less concerned with spatial computing or augmented and virtual realities and more interested in how our personal devices can encourage us to spend our time wisely. We are building the Sol Reader specifically for a single important use case — reading. And while Big Tech surely will improve specs and reduce cost over time, we can now provide a time-well-spent option at 10% of the cost of Apple’s Vision.”

The device is simple: It slips over your eyes like a pair of glasses and blocks all distractions while reading. Even as I’m typing that, I’m sensing some sadness: I have wanted this product to exist for many years – I was basically raised by books, and lost my ability to focus on reading over the past few years. Something broke in me during the pandemic – I was checking my phone every 10 seconds to see what Trump had done now and how close we were to a COVID-19-powered abyss. Suffice it to say, my mental health wasn’t at its finest – and I can’t praise the idea of Sol Reader enough. The idea of being able to set a timer and put a book on my face is extremely attractive to me.

Reels started as Instagram’s solution for competing with TikTok and soon launched on sister-site Facebook — a natural expansion. Meta is now testing Reels on a less expected medium: the Meta Quest. Its VR headset works for internet browsing, watching movies, games and more — but the addition of typically-vertical Reels presents a different viewing experience than these more malleable (and typically screen-wide) options.

Meta founder and CEO Mark Zuckerberg announced the update through a 13-second video on Meta’s Instagram Channel. It featured a Reel from influencer Austin Sprinz’s Instagram account in which he visited the world’s deepest pool. The immersive video is a good choice for VR, taking the viewer underwater into a seemingly bottomless space — and is certainly better than a cooking or dance Reel.

The Reels update comes ahead of Meta Quest 3’s fall release and follows Apple’s new AR/VR Vision Pro headset announcement. Though, with Quest 3’s pricing starting at $499, compared to the Vision Pro’s $3,499, the pair don’t exactly fall into the same category. Meta’s VR headset line first launched as Oculus Quest and subsequently Oculus Quest 2 before the second-generation model was rebranded as Meta Quest 2. The Meta Quest Pro followed soon after the name change. As for Reels, there’s no timeline for if and when it will leave the testing phase and become available across Meta Quest headsets.