Vacuum ultraviolet (VUV, 100–200 nm) light sources are indispensable for advanced spectroscopy, quantum research, and semiconductor lithography. Although second harmonic generation (SHG) using nonlinear optical (NLO) crystals is one of the simplest and most efficient methods for generating VUV light, the scarcity of suitable NLO crystals has long been a bottleneck.

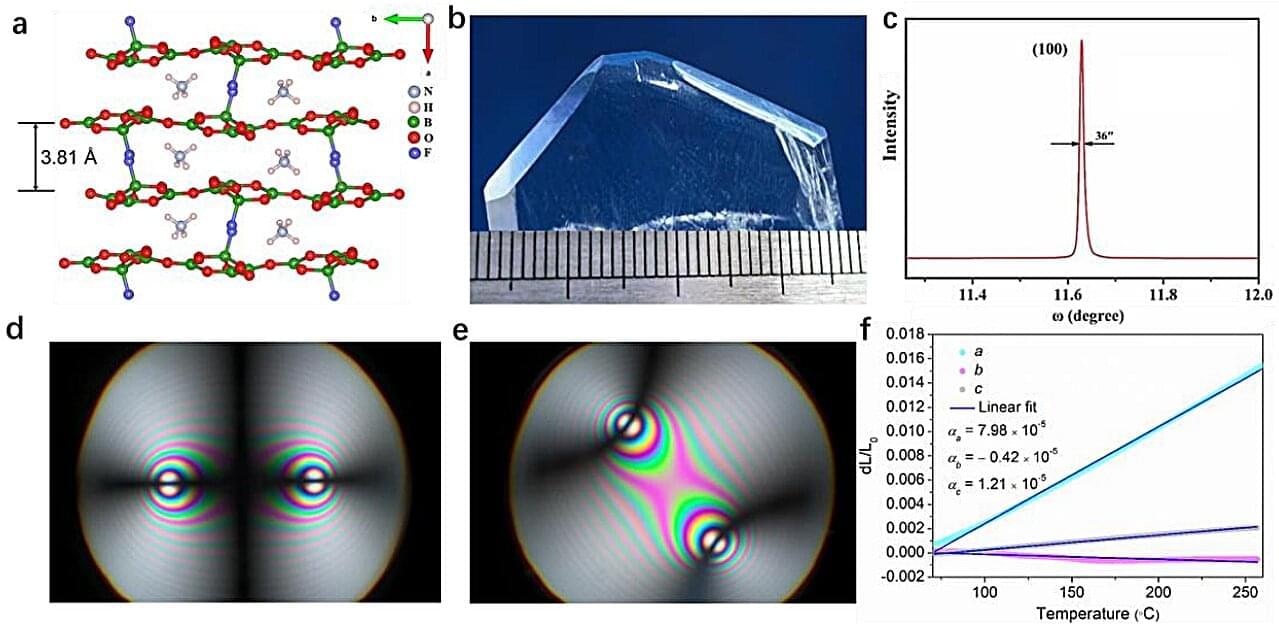

To address this problem, a research team led by Prof. Pan Shilie at the Xinjiang Technical Institute of Physics and Chemistry of the Chinese Academy of Sciences (CAS) has developed the fluorooxoborate crystal NH4B4O6F (ABF)—offering an effective solution to the practical challenges of VUV NLO materials. The team’s findings were recently published in Nature.

The team’s key achievement is the development of centimeter-scale, high-quality ABF crystal growth and advanced anisotropic crystal processing technologies. Notably, ABF uniquely integrates a set of conflicting yet critical properties required for VUV NLO materials—excellent VUV transparency, a strong NLO coefficient, and substantial birefringence for VUV phase-matching—while fulfilling stringent practical criteria: large crystal size for fabricating devices with specific phase-matching angles, stable physical/chemical properties, a high laser-induced damage threshold, and suitable processability. This breakthrough resolves the long-standing field challenge where no prior crystal has met all these criteria simultaneously.