Topological materials could usher in a new age of electronics, but scientists are still discovering surprising aspects of their quantum nature.

At first glance, some scientific research can seem, well, impractical. When physicists began exploring the strange, subatomic world of quantum mechanics a century ago, they weren’t trying to build better medical tools or high-speed internet. They were simply curious about how the universe worked at its most fundamental level.

Yet without that “curiosity-driven” research—often called basic science—the modern world would look unrecognizable.

“Basic science drives the really big discoveries,” says Steve Kahn, UC Berkeley’s dean of mathematical and physical sciences. “Those paradigm changes are what really drive innovation.”

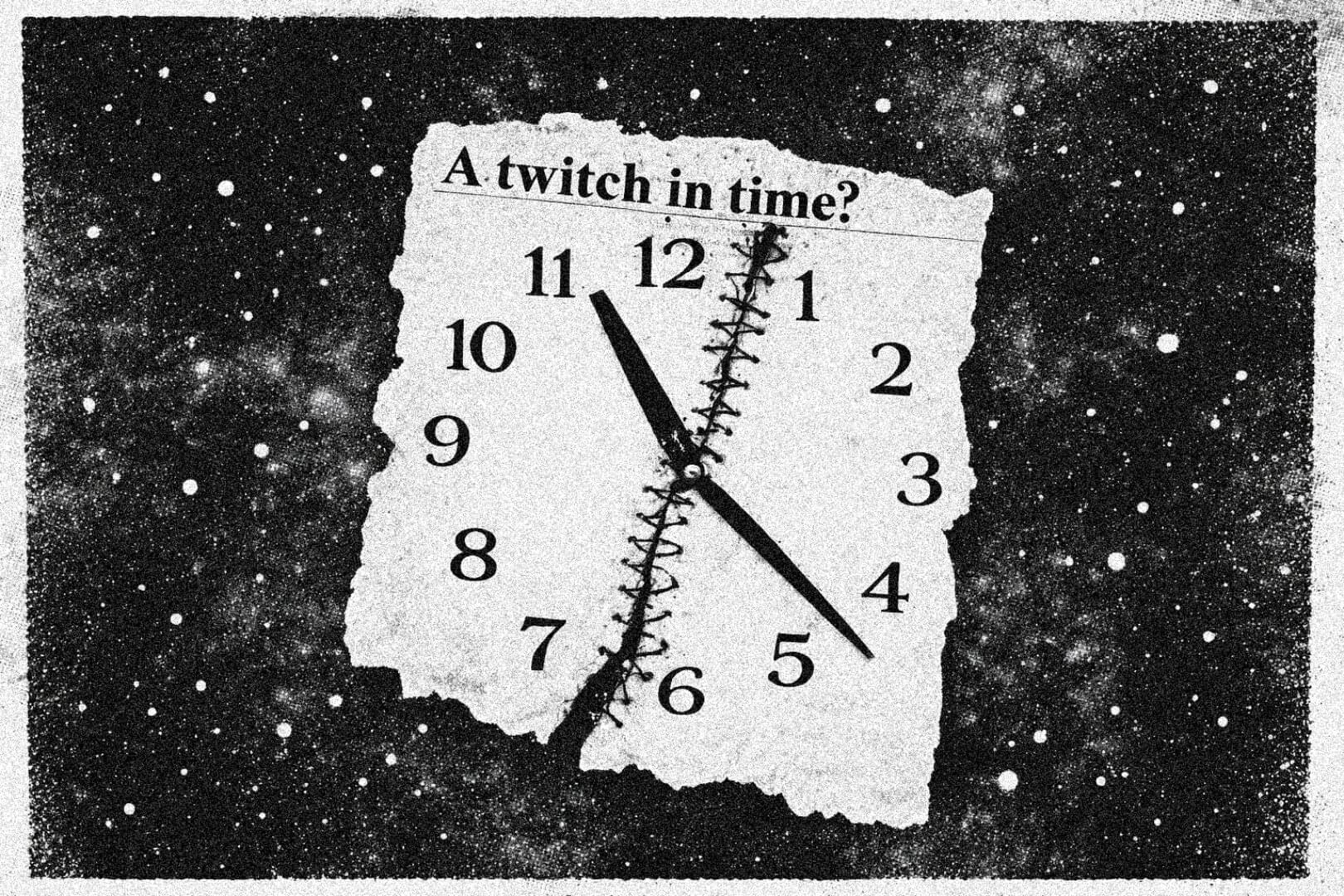

Quantum mechanics is rich with paradoxes and contradictions. It describes a microscopic world in which particles exist in a superposition of states—being in multiple places and configurations all at once, defined mathematically by what physicists call a “wavefunction.” But this runs counter to our everyday experience of objects that are either here or there, never both at the same time.

Typically, physicists manage this conflict by arguing that, when a quantum system comes into contact with a measuring device or an experimental observer, the system’s wavefunction “collapses” into a single, definite state. Now, with support from the Foundational Questions Institute, FQxI, an international team of physicists has shown that a family of unconventional solutions to this measurement problem—called “quantum collapse models”—has far-reaching implications for the nature of time and for clock precision.

They published their results suggesting a new way to distinguish these rival models from standard quantum theory, in Physical Review Research, in November 2025.

A hundred years ago, quantum mechanics was a radical theory that baffled even the brightest minds. Today, it’s the backbone of technologies that shape our lives, from lasers and microchips to quantum computers and secure communications.

In a sweeping new perspective published in Science, Dr. Marlan Scully, a university distinguished professor at Texas A&M University, traces the journey of quantum mechanics from its quirky beginnings to its role in solving some of science’s toughest challenges.

“Quantum mechanics started as a way to explain the behavior of tiny particles,” said Scully, who is also affiliated with Princeton University. “Now it’s driving innovations that were unimaginable just a generation ago.”

A new unified theory connects two fundamental domains of modern quantum physics: It joins two opposite views of how a single exotic particle behaves in a many-body system, namely as a mobile or static impurity among a large number of fermions, a so-called Fermi sea.

This new theoretical framework was developed at the Institute for Theoretical Physics of Heidelberg University. It describes the emergence of what is known as quasiparticles and furnishes a connection between two different quantum states that, according to the Heidelberg researchers, will have far-reaching implications for current quantum matter experiments.

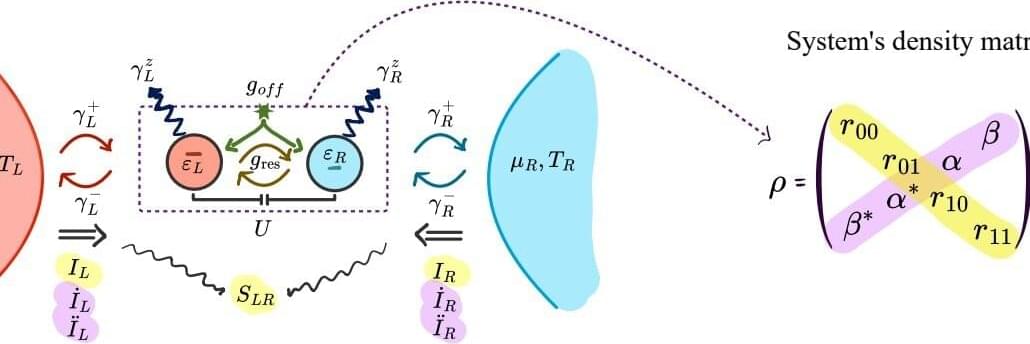

A team from UNIGE shows that it is possible to determine the state of a quantum system from indirect measurements when it is coupled to its environment.

What is the state of a quantum system? Answering this question is essential for exploiting quantum properties and developing new technologies. In practice, this characterization generally relies on direct measurements, which require extremely well-controlled systems, as their sensitivity to external disturbances can distort the results. This constraint limits their applicability to specific experimental contexts.

A team from the University of Geneva (UNIGE) presents an alternative approach, tailored to open quantum systems, in which the interaction with the environment is turned into an advantage rather than an obstacle. Published in Physical Review Letters —with the “Editor’s Suggestion” label—this work brings quantum technologies a step closer to real-world conditions.

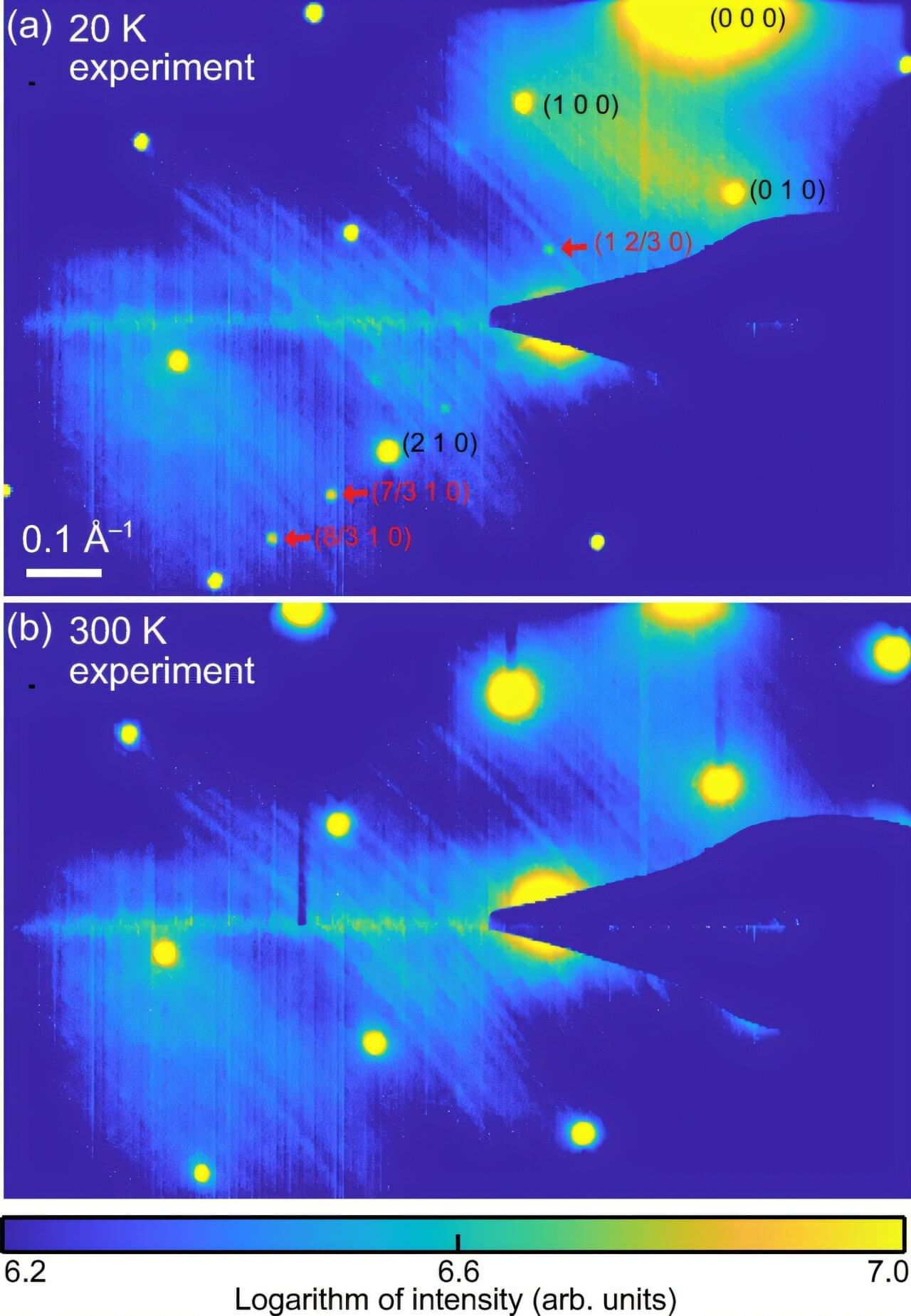

The mystery of quantum phenomena inside materials—such as superconductivity, where electric current flows without energy loss—lies in when electrons move together and when they break apart. KAIST researchers have succeeded in directly observing the moments when electrons form and dissolve ordered patterns.

Research teams led by Professors Yongsoo Yang, SungBin Lee, Heejun Yang, and Yeongkwan Kim of the Department of Physics, in an international collaboration with Stanford University, have become the first in the world to spatially visualize the formation and disappearance of charge density waves (CDWs) inside quantum materials.

The research is published in Physical Review Letters.