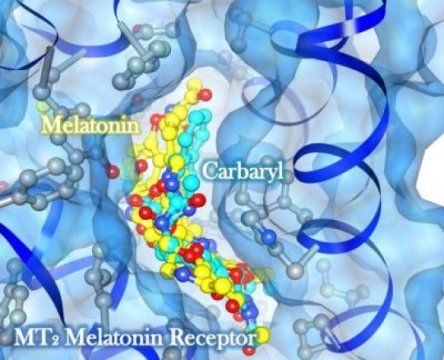

Synthetic chemicals commonly found in insecticides and garden products bind to the receptors that govern our biological clocks, University at Buffalo researchers have found. The research suggests that exposure to these insecticides adversely affects melatonin receptor signaling, creating a higher risk for metabolic diseases such as diabetes.

Published online on Dec. 27 in Chemical Research in Toxicology, the research combined a big data approach, using computer modeling on millions of chemicals, with standard wet-laboratory experiments. It was funded by a grant from the National Institute of Environmental Health Sciences, part of the National Institutes of Health.

Disruptions in human circadian rhythms are known to put people at higher risk for diabetes and other metabolic diseases but the mechanism involved is not well-understood.