A new AI algorithm can create the most advanced deepfakes yet, complete with emotions and gestures, after just a few minutes of training.

Category: information science – Page 324

How an algorithm may decide your career

WANT a job with a successful multinational? You will face lots of competition. Two years ago Goldman Sachs received a quarter of a million applications from students and graduates. Those are not just daunting odds for jobhunters; they are a practical problem for companies. If a team of five Goldman human-resources staff, working 12 hours every day, including weekends, spent five minutes on each application, they would take nearly a year to complete the task of sifting through the pile.

Little wonder that most large firms use a computer program, or algorithm, when it comes to screening candidates seeking junior jobs. And that means applicants would benefit from knowing exactly what the algorithms are looking for.

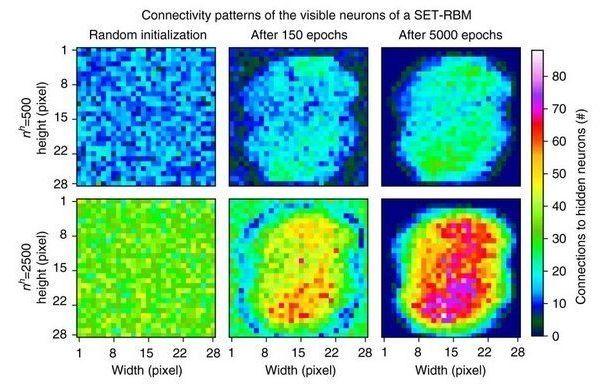

New AI method increases the power of artificial neural networks

An international team of scientists from Eindhoven University of Technology, University of Texas at Austin, and University of Derby, has developed a revolutionary method that quadratically accelerates artificial intelligence (AI) training algorithms. This gives full AI capability to inexpensive computers, and would make it possible in one to two years for supercomputers to utilize Artificial Neural Networks that quadratically exceed the possibilities of today’s artificial neural networks. The scientists presented their method on June 19 in the journal Nature Communications.

Artificial Neural Networks (or ANN) are at the very heart of the AI revolution that is shaping every aspect of society and technology. But the ANNs that we have been able to handle so far are nowhere near solving very complex problems. The very latest supercomputers would struggle with a 16 million-neuron network (just about the size of a frog brain), while it would take over a dozen days for a powerful desktop computer to train a mere 100,000-neuron network.

Caltech’s new machine learning algorithm predicts IQ from fMRI

Scientists at the California Institute of Technology can now assess a person’s intelligence in moments with nothing more than a brain scan and an AI algorithm, university officials announced this summer.

Caltech researchers led by Ralph Adolphs, PhD, a professor of psychology, neuroscience and biology and chair of the Caltech Brain Imaging Center, said in a recent study that they, alongside colleagues at Cedars-Sinai Medical Center and the University of Salerno, were successfully able to predict IQ in hundreds of patients from fMRI scans of resting-state brain activity. The work is pending publication in the journal Philosophical Transactions of the Royal Society.

Adolphs and his team collected data from nearly 900 men and women for their research, all of whom were part of the National Institutes of Health (NIH)-driven Human Connectome Project. The researchers trained their machine learning algorithm on the complexities of the human brain by feeding the brain scans and intelligence scores of these hundreds of patients into the algorithm—something that took very little effort on the patients’ end.

Billionaire Ray Dalio: A.I. is widening the wealth gap, ‘national emergency should be declared’

It’s amusing that these people know where this is headed, but arent interested enough to stop it.

The co-chief investment officer and co-chairman of Bridgewater Associates shared his thoughts in a Facebook post on Thursday.

Dalio says he was responding to a question about whether machine intelligence would put enough people out of work that the government will have to pay people to live with a cash handout, a concept known as universal basic income.

My view is that algorithmic/automated decision making is a two edged sword that is improving total productivity but is also eliminating jobs, leading to big wealth and opportunity gaps and populism, and creating a national emergency.

Most highly paid programmers know Python. You can learn it via an online course for just $44

Contrary to what Silicon Valley portrays, you’ll need more than drive and intelligence to land a high-paying job in the tech world. You’ll need to be well versed in one of the most popular and fastest growing programming languages: Python.

SEE ALSO: Walmart’s new text service bypasses app, website to order stuff online

Python made its debut in 1990, and since then it’s been focused and refined by some of the brightest programmers in the industry. That’s resulted in its current status as a multi-faceted, yet beautifully simple language with a wide variety of applications, from interfacing with SQL databases to building websites.

‘Breakthrough’ algorithm exponentially faster than any previous one

What if a large class of algorithms used today—from the algorithms that help us avoid traffic to the algorithms that identify new drug molecules—worked exponentially faster?

Computer scientists at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have developed a completely new kind of algorithm, one that exponentially speeds up computation by dramatically reducing the number of parallel steps required to reach a solution.

The researchers will present their novel approach at two upcoming conferences: the ACM Symposium on Theory of Computing (STOC), June 25–29 and International Conference on Machine Learning (ICML), July 10 −15.

Ubiquitous Computing (The Future of Computing)

Recommended Books ➤

📖 Life 3.0 — http://azon.ly/ij9u

📖 The Master Algorithm — http://azon.ly/excm

📖 Superintelligence — http://azon.ly/v8uf

This video is the twelfth and final in a multi-part series discussing computing. In this video, we’ll be discussing the future of computing, more specifically – the evolution of the field of computing and extrapolating forward based on topics we’ve discussed so far in this series!

[0:31–5:50] Starting off we’ll discuss, the 3 primary eras in the evolution of the field of computing since its inception, the: tabulating, programming and cognitive eras.

Afterwards, we’ll discuss infinite computing, a paradigm that incorporates cloud computing and the principles of heterogenous architecture that is accelerating the transition to cognitive computing.

Finally, to wrap up, we’ll discuss the future of computing, ubiquitous computing, fueled by the rise of abundant, affordable and smart computing devices, where computing is done using any device, in any location and in any format.

How your brain decides between knowledge and ignorance

We have a ‘thirst for knowledge’ but sometime ‘ignorance is bliss’, so how do we choose between these two mind states at any given time?

UCL psychologists have discovered our brains use the same algorithm and neural architecture to evaluate the opportunity to gain information, as it does to evaluate rewards like food or money.

Funded by the Wellcome Trust, the research, published in the Proceedings of the National Academy of Sciences, also finds that people will spend money to both obtain advance knowledge of a good upcoming event and to remain ignorant of an upcoming bad event.

What Is Optical Computing (Computing At The Speed of Light)

Recommended Books ➤

📖 Life 3.0 — http://azon.ly/ij9u

📖 The Master Algorithm — http://azon.ly/excm

📖 Superintelligence — http://azon.ly/v8uf

This video is the eighth in a multi-part series discussing computing and the first discussing non-classical computing. In this video, we’ll be discussing what optical computing is and the impact it will have on the field of computing.

[0:27–6:03] Starting off we’ll discuss, what optical computing/photonic computing is. More specifically, how this paradigm shift is different from typical classical (electron-based computers) and the benefits it will bring to computational performance and efficiency!

[6:03–10:25] Following that we’ll look at, current optical computing initiatives including: optical co-processors, optical RAM, optoelectronic devices, silicon photonics and more!

Thank you to the patron(s) who supported this video ➤