Despite the simplicity of their visual system, fruit flies are able to reliably distinguish between individuals based on sight alone. This is a task that even humans who spend their whole lives studying Drosophila melanogaster struggle with. Researchers have now built a neural network that mimics the fruit fly’s visual system and can distinguish and re-identify flies. This may allow the thousands of labs worldwide that use fruit flies as a model organism to do more longitudinal work, looking at how individual flies change over time. It also provides evidence that the humble fruit fly’s vision is clearer than previously thought.

In an interdisciplinary project, researchers at Guelph University and the University of Toronto, Mississauga combined expertise in fruit fly biology with machine learning to build a biologically-based algorithm that churns through low-resolution videos of fruit flies in order to test whether it is physically possible for a system with such constraints to accomplish such a difficult task.

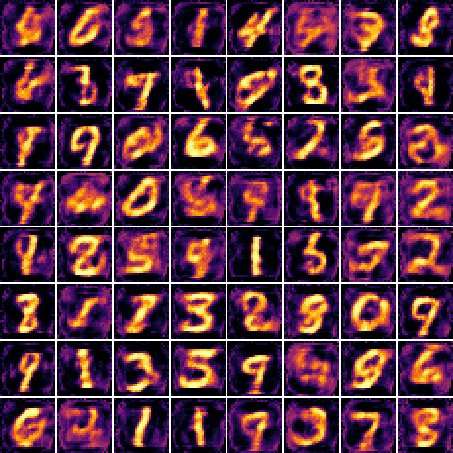

Fruit flies have small compound eyes that take in a limited amount of visual information, an estimated 29 units squared (Fig. 1A). The traditional view has been that once the image is processed by a fruit fly, it is only able to distinguish very broad features (Fig. 1B). But a recent discovery that fruit flies can boost their effective resolution with subtle biological tricks (Fig. 1C) has led researchers to believe that vision could contribute significantly to the social lives of flies. This, combined with the discovery that the structure of their visual system looks a lot like a Deep Convolutional Network (DCN), led the team to ask: “can we model a fly brain that can identify individuals?”