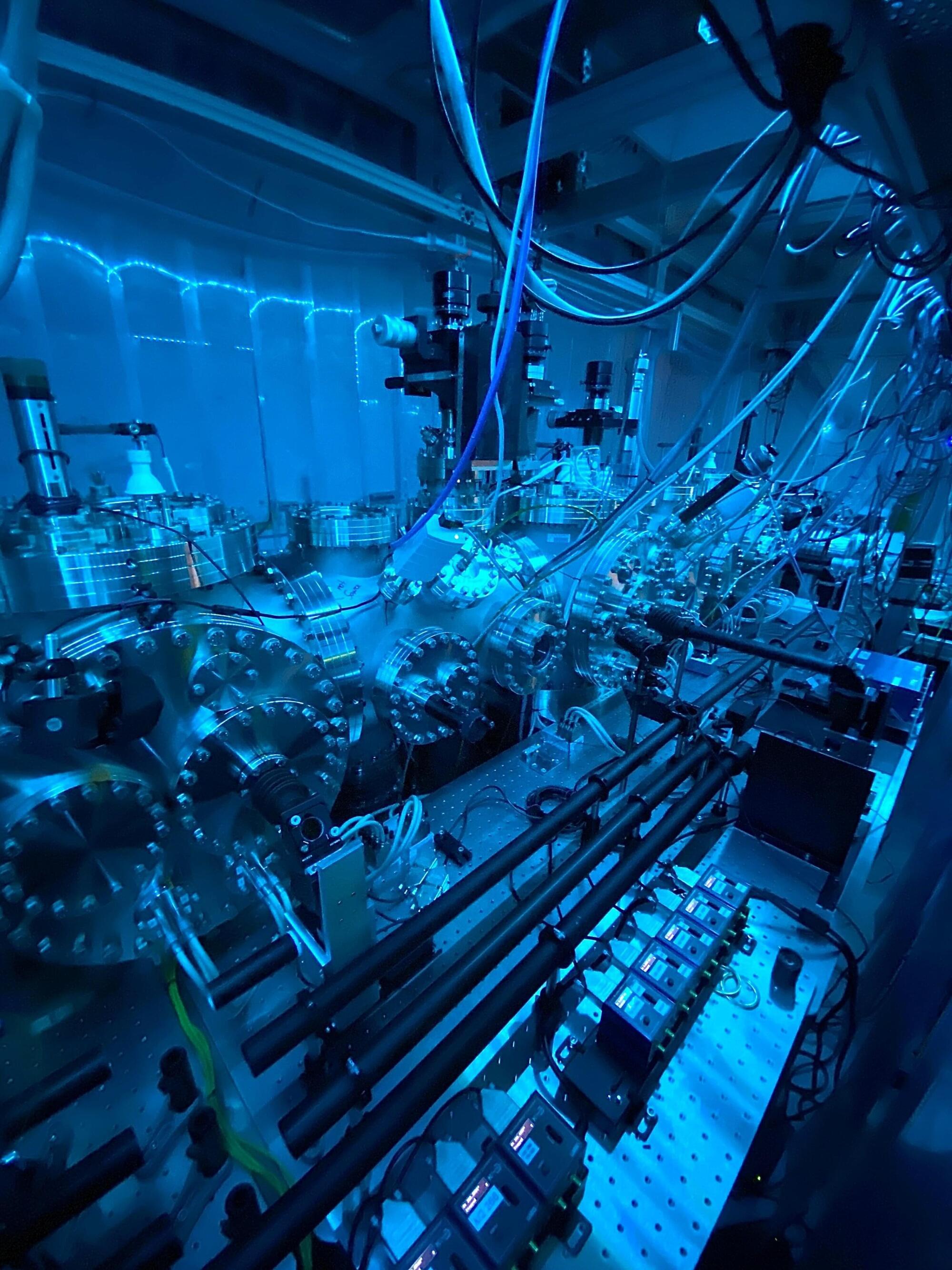

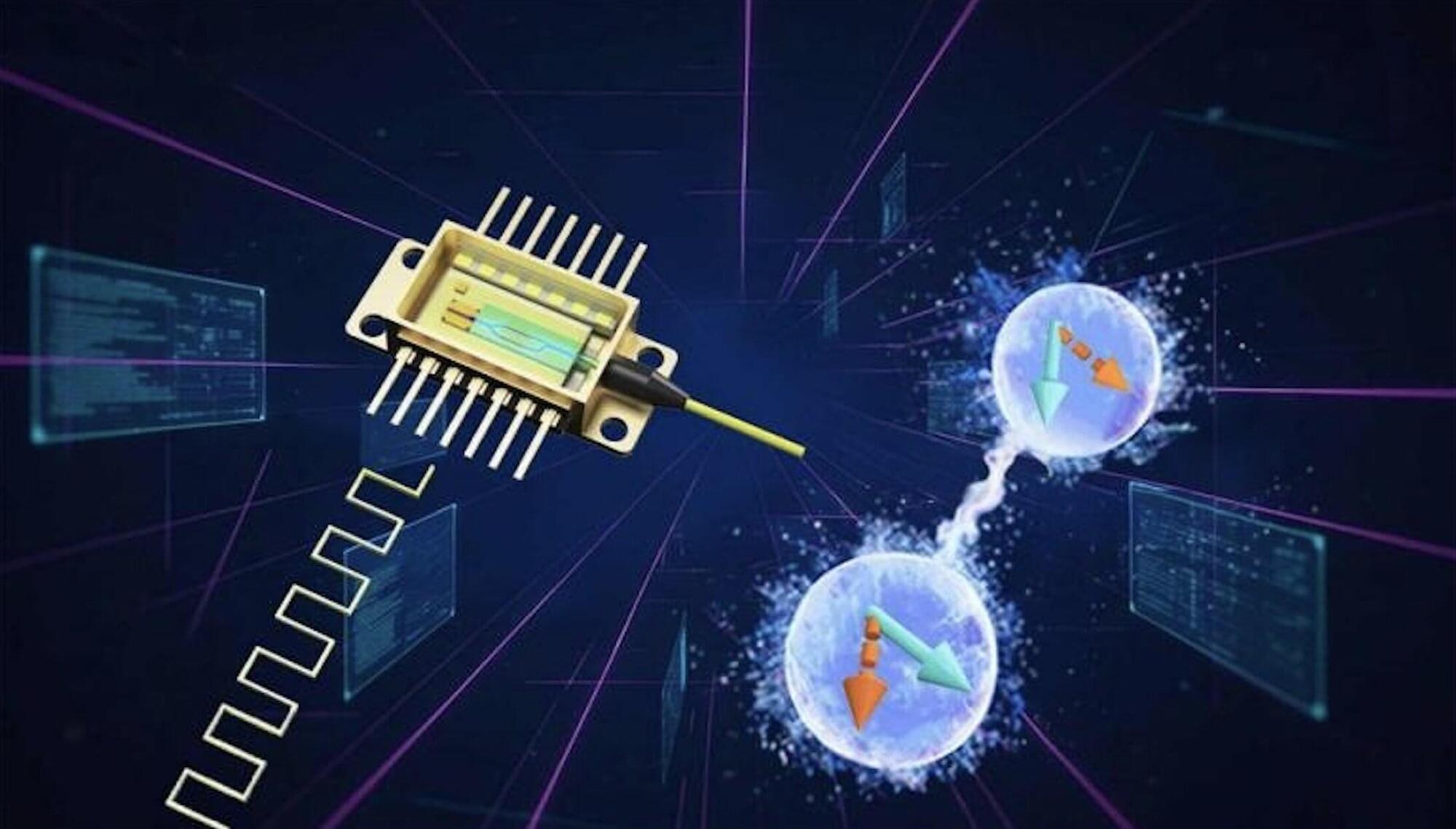

For many years, cesium atomic clocks have been reliably keeping time around the world. But the future belongs to even more accurate clocks: optical atomic clocks. In a few years’ time, they could change the definition of the base unit second in the International System of Units (SI). It is still completely open, which of the various optical clocks will serve as the basis for this.

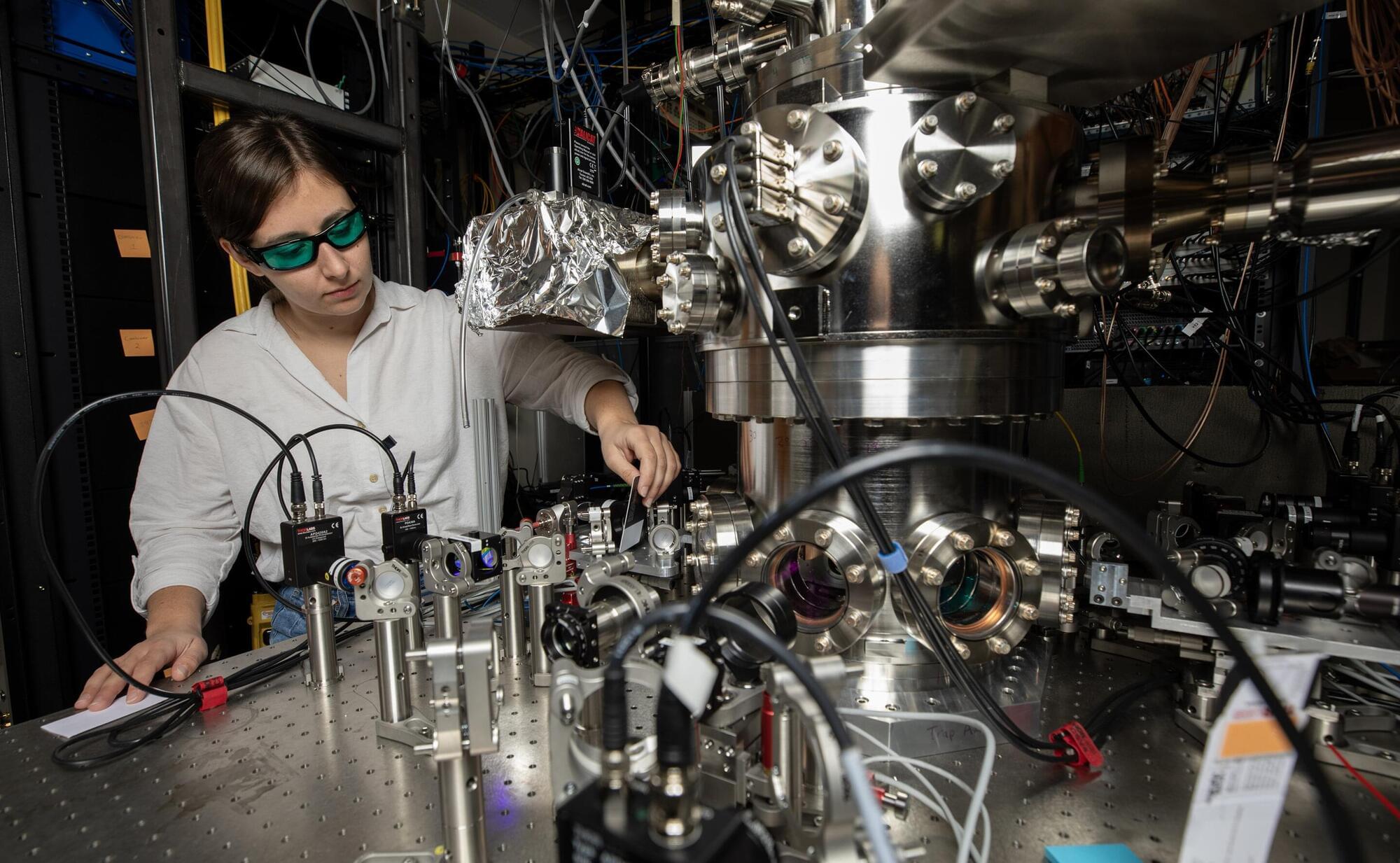

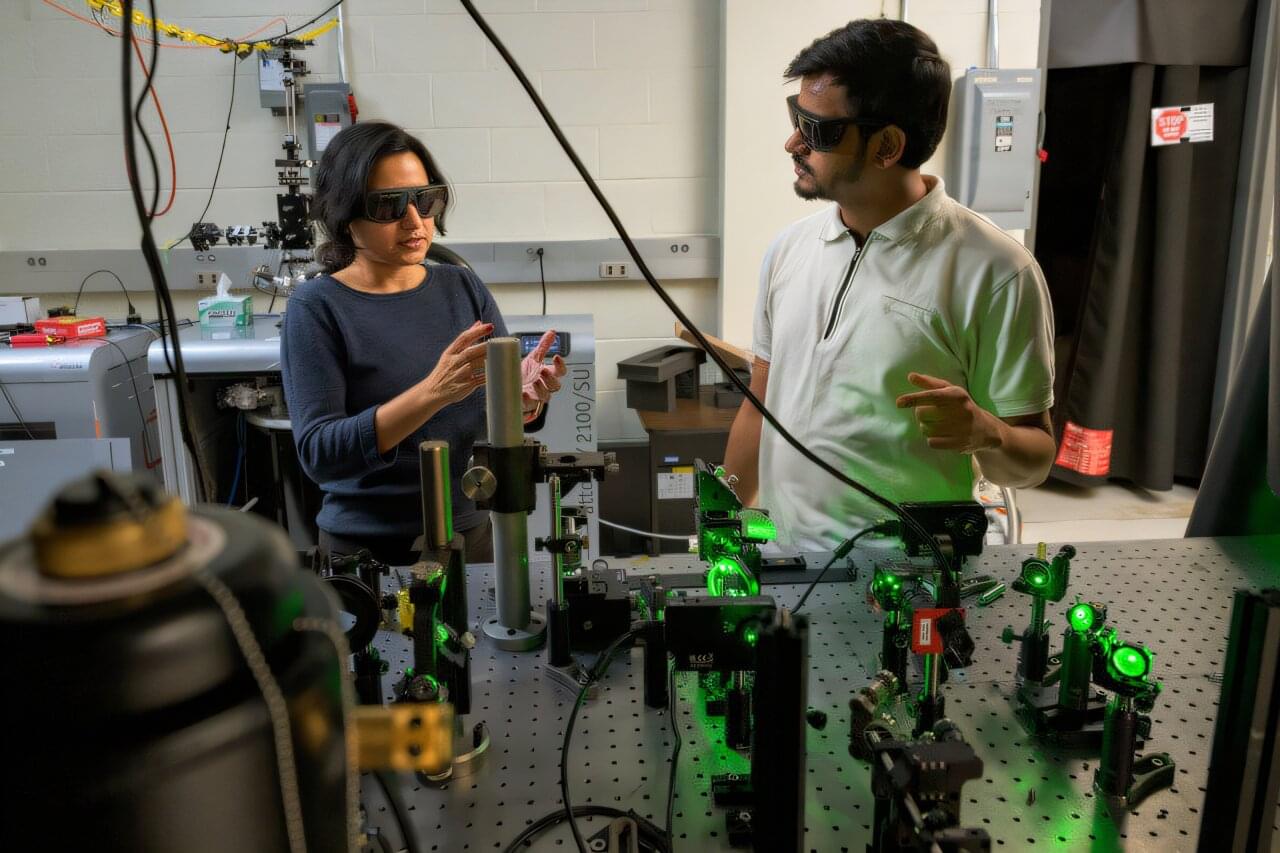

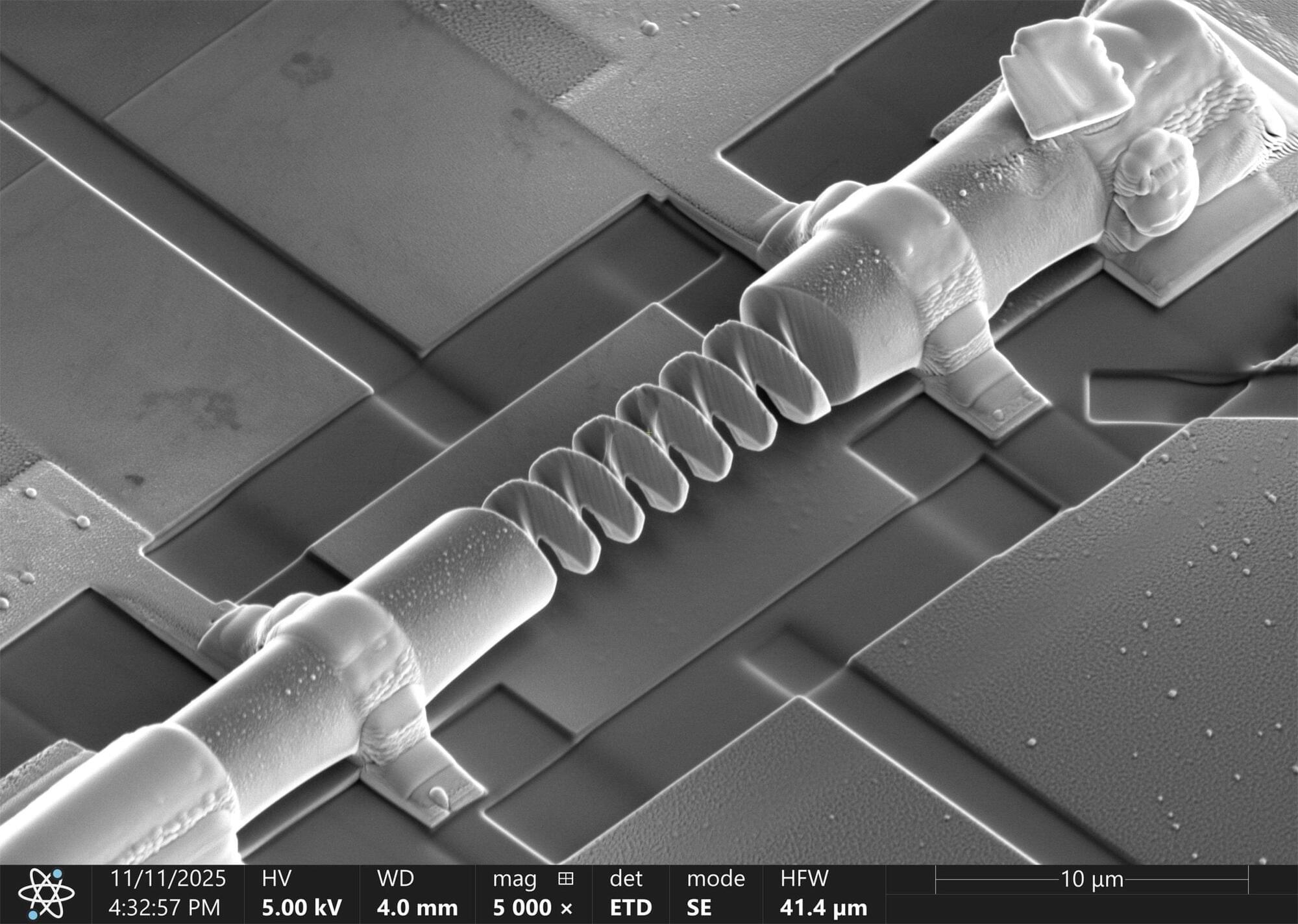

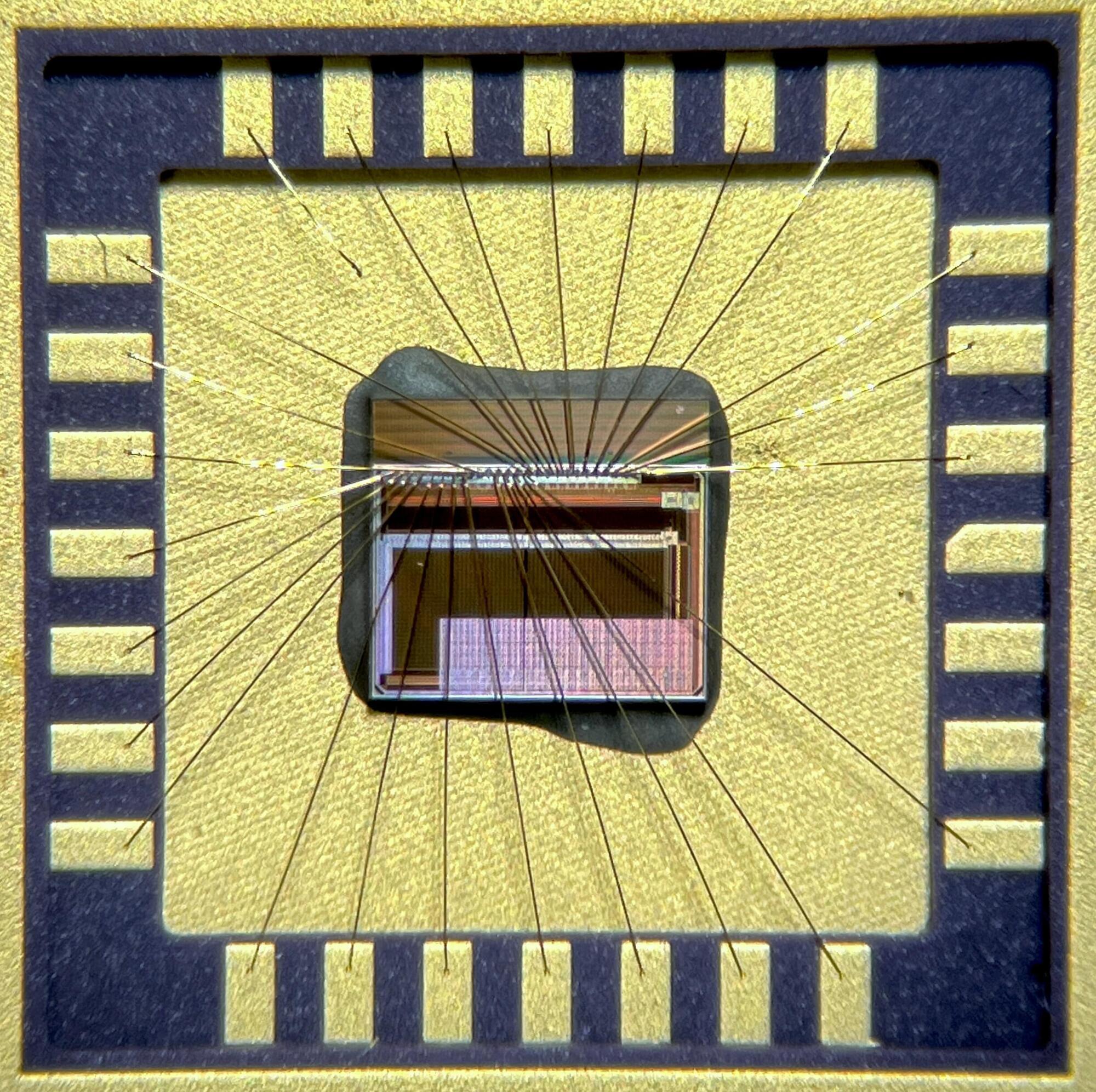

The large number of optical clocks that the Physikalisch-Technische Bundesanstalt (PTB), as a leading institute in this field, has realized could be joined by another type: an optical multi-ion clock with ytterbium-173 ions. It could combine the high accuracy of individual ions with the improved stability of several ions. This is the result of a cooperation between PTB and the Thai metrology institute NIMT.

The team led by Tanja Mehlstäubler reports on this in the current issue of the journal Physical Review Letters. The results are also interesting for quantum computing and, with a new look inside the atom, for fundamental research.