Year 2025 portable dialysis machine.

Where (C: molar concentration, R: ideal gas constant, T: absolute temperature).

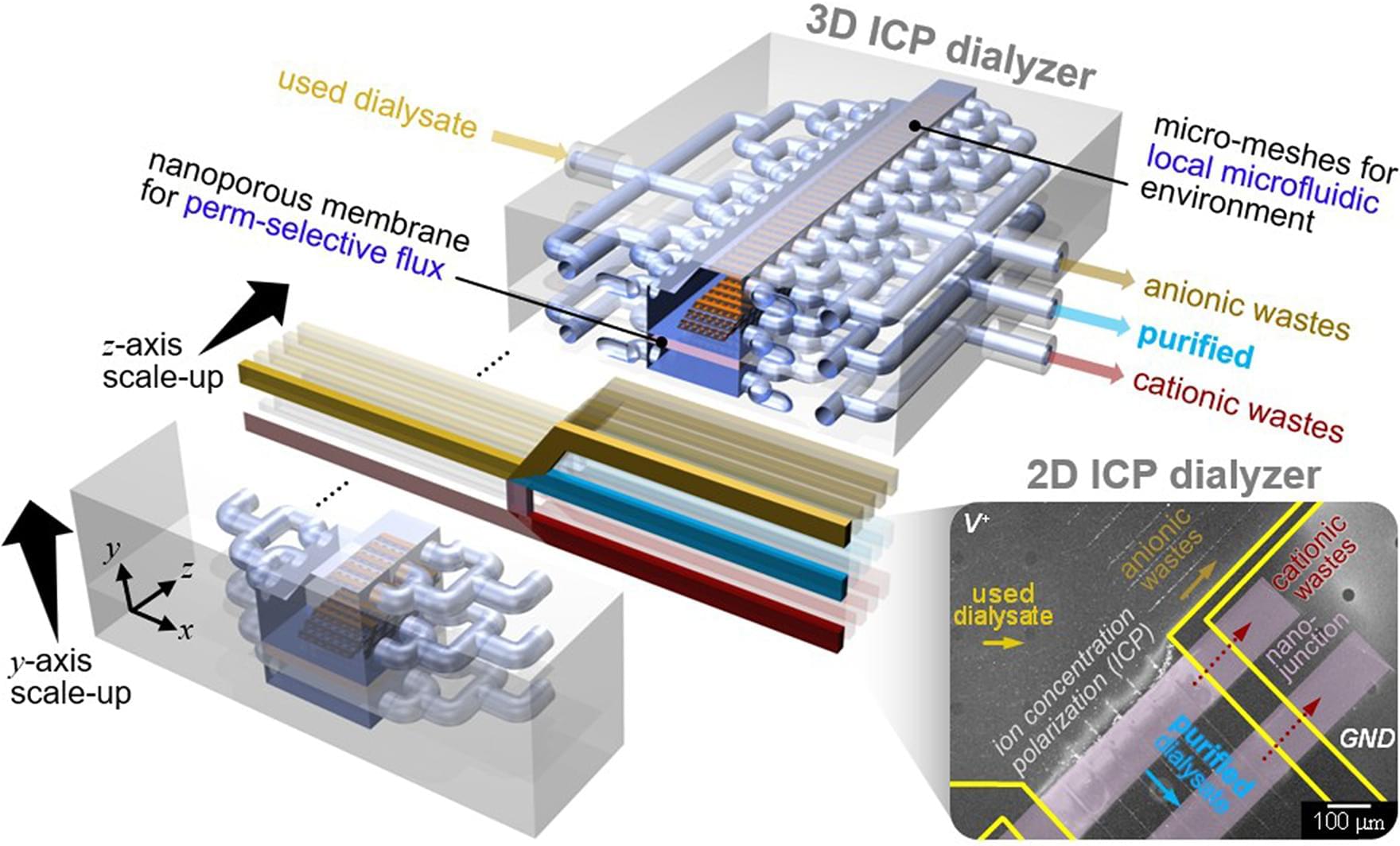

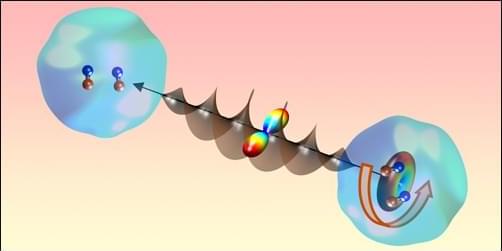

While ED uses both cation and anion exchange membranes to remove charged components, it cannot purify neutral species because they are not affected by the electric field (Fig. 1 A). Therefore, its application as a dialyzer is limited by its inability to simultaneously remove neutral urea and positively charged creatinine. Despite their merits, none of these techniques can simultaneously purify a wide size-range of target species, spanning from salt ions to biomolecular contaminants, in a single-step process. In contrast, one of the nanoelectrokinetic phenomenon, the ion concentration polarization (ICP) based purification technology [28,29,30,31,32], as reported recently, aligns with these criteria, owing to its distinctive electrical filtration capabilities and scalability. Briefly, the perm-selectivity of nanoporous membranes initiates an electrolyte concentration polarization on both sides of the membrane. In the case of cation-selective membranes, an ion depletion zone forms on the anodic side of the membrane [33, 34]. Charged species reroute their trajectories along the concentration gradients near this ion depletion zone, serving as a pivotal site for the purification of a broad range of contaminants.

In this study, for portable PD, we firstly proposed a scalable ICP dialysate regeneration device. ICP removes cationic components through the cation exchange membrane, anionic components by electrostatic repulsion and neutral species through an electrochemical reaction at the electrode (Fig. 1B). When urea, a neutrally charged body toxin, begins to undergo direct oxidation at the electrode inlet, the concentration of urea around the electrode decreases. The urea concentration profile exhibits a decrease closer to the electrode, and as urea diffuses towards the electrode vicinity, a chain reaction of direct oxidation occurs. As a result, a purified dialysate could be continuously obtained by extracting a stream from the ion depletion zone. Micro-nanofluidic dialysate regeneration platform was upscaled in two-and three-dimensional directions using a commercial 3D printer as shown in Fig. 1C.