Just like Taylor Swift’s wildly successful Eras tour, Nvidia has taken center stage in their own widely successful AI Era tour. From Wall Street to Main Street, everyone is talking about Nvidia, and rightfully so. By powering the latest innovations in AI, Nvidia has achieved 126% revenue growth and 286% net income growth in the past fiscal year, an achievement most companies can only dream about, to become one of the most world’s most valuable companies. All of this is a result of being able to take existing core competencies like their GPU expertise and successfully applying it to an adjacent, yet still emerging use case like artificial intelligence (AI).

Much of Nvidia’s success can be attributed to one of its founders and the only CEO the company has ever had, Jensen Huang. Mr. Huang was recently recognized as one of the world’s most accomplished engineers with his election to the National Academy of Engineering (NAE), a nonprofit organization with more than 2,000 peer-elected members from industry, academia, and government that “provides engineering leadership in service to the nation.” This is a huge career achievement, one of the highest professional distinctions possible for an engineer.

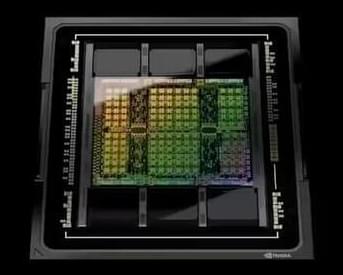

Mr. Huang likes to say that “Nvidia innovates at the speed of light.” To his credit, Mr. Huang has continued to drive this kind of innovation at Nvidia since its inception. Nvidia was one of many companies developing graphics in the early days of PC gaming and one of the few to survive. Nvidia pioneered the Graphics Processing Unit (GPU) and was the first company to promote the concept of using GPUs for general computing purposes, which became known as GPGPU compute and led to the development of the Compute Unified Device Architecture (CUDA) software framework aimed at fully utilizing the massively parallel processing capabilities of Nvidia GPUs. With the advent of deep-learning techniques to train neural network models, Nvidia quickly adapted both its hardware and software solutions to enable an exponential growth in processing capabilities that led to the traditional and generative AI innovations that are sweeping the world today.