I sat down with Dario Amodei in Bangalore. He built Claude, but he started as a biologist looking for a tool to cure disease. Today, he’s at the helm of an AI revolution that he compares to a tsunami society is actively ignoring. We got into the heavy stuff: why Anthropic secretly withheld a working model before ChatGPT existed, whether AI is on the verge of consciousness, and if outsourcing our thinking is going to make humans measurably stupider. Dario makes the case that coding is a dying skill, critical thinking is our last real edge, and the absurd concentration of power in AI right now is a massive problem, even though he’s one of the people holding it.

00:00 Introduction.

06:13 Scaling laws explained simply.

13:27 Trust, humility, and corporate motives.

22:44 Using Claude personally, AI knowing you.

31:03 Rich people criticizing their own system.

37:05 India’s role and IT partnerships.

44:15 Will AI surpass humans at everything.

50:17 Career advice for young Indians.

56:38 Open source vs closed AI models.

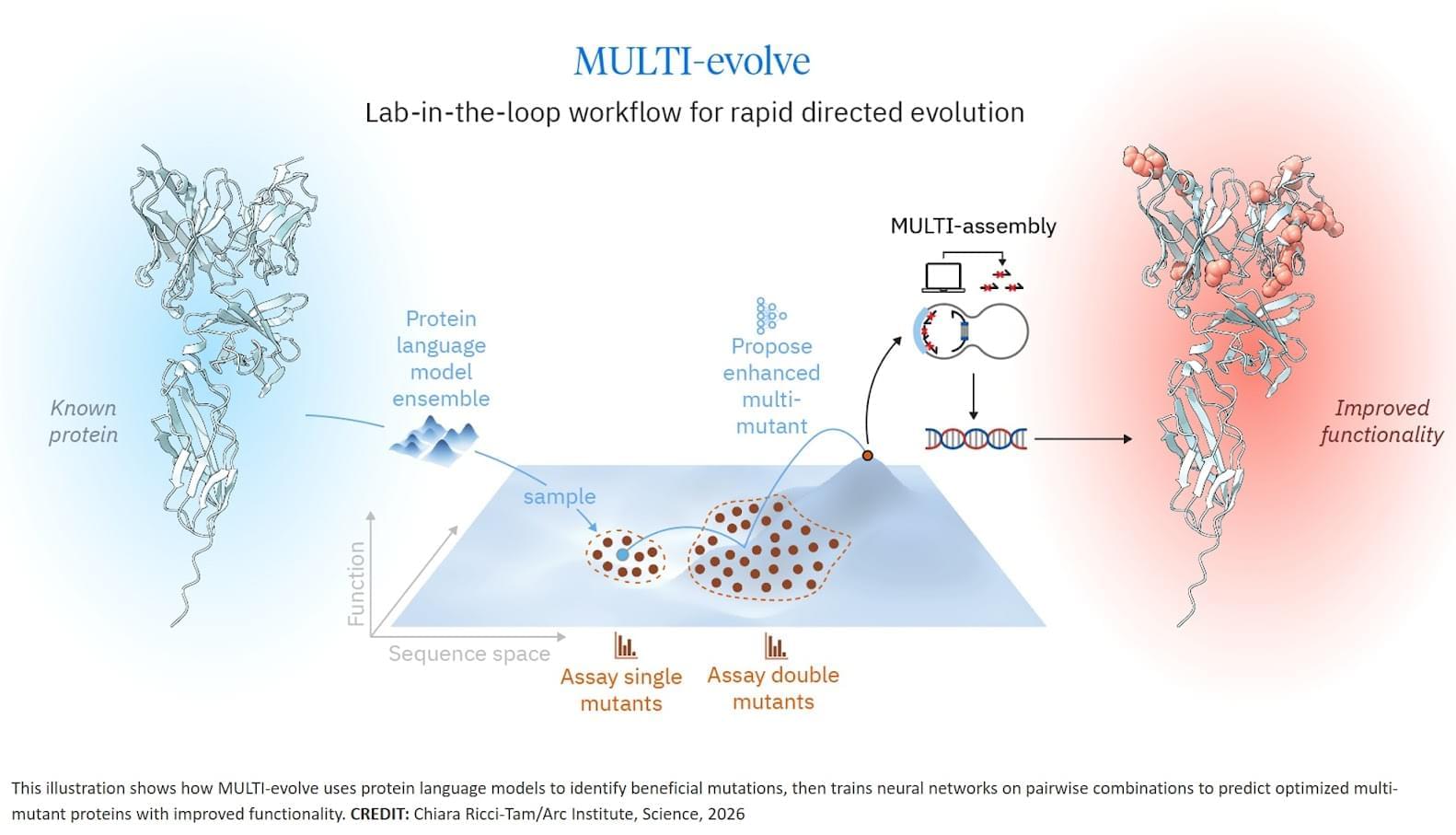

1:02:40 Biotech as the next big bet.

#NikhilKamath Co-founder of Zerodha and Gruhas.

Host of ‘WTF is’ & ‘People By WTF’ Podcast.

Twitter: https://twitter.com/nikhilkamathcio/

Instagram: / nikhilkamathcio.

LinkedIn: https://www.linkedin.com/in/nikhilkam… / nikhilkamathcio #Darioamodei LinkedIN–

/ dario-amodei X — https://twitter.com/DarioAmodei Instagram —

/ dario.amodei Watch ‘WTF is’ Podcast on Spotify https://tinyurl.com/4nsm4ezn Watch ‘People by WTF’ Podcast on Spotify https://tinyurl.com/yme92c59 Watch ‘WTF Online’ on Spotify https://tinyurl.com/4tjua4th #WTFiswithnikhilkamath #PeopleByWTF #WTFOnline.

Facebook: / nikhilkamathcio.

#Darioamodei.

LinkedIN-/ dario-amodei.

X — https://twitter.com/DarioAmodei.

Instagram — / dario.amodei.

Watch ‘WTF is’ Podcast on Spotify.

https://tinyurl.com/4nsm4ezn.

Watch ‘People by WTF’ Podcast on Spotify.