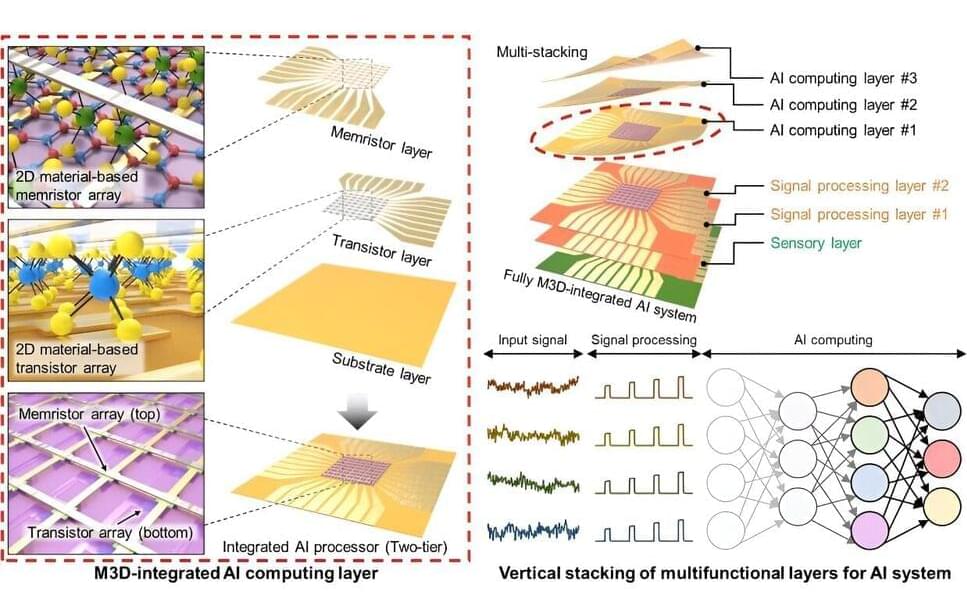

Multifunctional computer chips have evolved to do more with integrated sensors, processors, memory and other specialized components. However, as chips have expanded, the time required to move information between functional components has also grown.

“Think of it like building a house,” said Sang-Hoon Bae, an assistant professor of mechanical engineering and materials science at the McKelvey School of Engineering at Washington University in St. Louis. “You build out laterally and up vertically to get more function, more room to do more specialized activities, but then you have to spend more time moving or communicating between rooms.”

To address this challenge, Bae and a team of international collaborators, including researchers from the Massachusetts Institute of Technology, Yonsei University, Inha University, Georgia Institute of Technology and the University of Notre Dame, demonstrated monolithic 3D integration of layered 2D material into novel processing hardware for artificial intelligence (AI) computing.