Devin comes with some advanced capabilities in software development, including coding, debugging, problem-solving, etc. Here’s all you need to know about it.

Coral.ai @raspberrypi =???Raspberry Pi 4 👉 https://amzn.to/3SBCRW0Coral AI USB Accelerator 👉 https://amzn.to/3SBGrzMRaspberry Pi Camera V3 Module 👉 https…

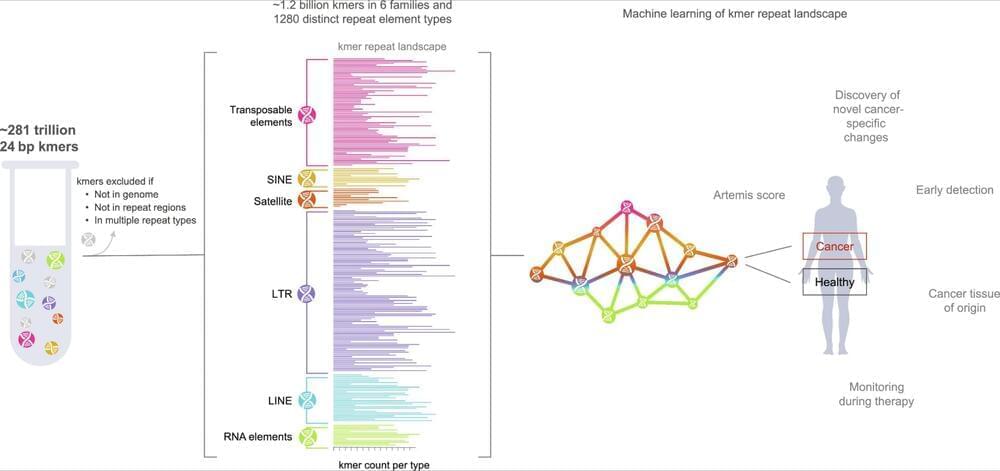

A machine learning pipeline named ARTEMIS captures how the landscape of repeat genomic sequences shifts in patients with cancer, and could facilitate earlier detection and monitoring of tumor progression.

📄:

ARTEMIS is a new approach to characterizing genome-wide repeat elements in cancer and cell-free DNA.

Apple acquired Canada-based company DarwinAI earlier this year to build out its AI team, reports Bloomberg. DarwinAI created AI technology for inspecting components during the manufacturing process, and it also had a focus on making smaller and more efficient AI systems.

DarwinAI’s website and social media accounts have been taken offline following Apple’s purchase. Dozens of former DarwinAI companies have now joined Apple’s artificial intelligence division. AI researcher Alexander Wong, who helped build DarwinAI, is now a director in Apple’s AI group.

Devin, SIMA, Figure 1, all in 24 hours. What does it mean and are AI models taking the wheel? I’ll go through 5 relevant papers and 11 articles to get you all the relevant details, from what exactly Devin accomplished, and didn’t, to DeepMind’s new AGI-attempt-in-3D (SIMA) to just how far AI agents have come and what that means for the future of jobs. They’ll also be a guest star … discussing … me?

AI Insiders [Exclusive videos, Discord, Interviews and More]: / aiexplained.

Devin: https://www.cognition-labs.com/blog.

Devin YT: • AI trains an AI!

SWE-bench: https://arxiv.org/pdf/2310.06770.pdf.

Cognition Twitter: / with_replies.

Reality Check: / 1768056098995814836

Karpathy Tweet: / 1767598414945292695

Bloomberg: https://www.bloomberg.com/news/articl…

Chollet Prediction: / 1767935813646716976

https://magic.dev/

SIMA: https://deepmind.google/discover/blog…

SIMA Paper: https://storage.googleapis.com/deepmi…

MobileAgent: https://arxiv.org/pdf/2401.16158.pdf.

OpenAI Agent: https://www.theinformation.com/articl…

Red Dead Redemption AI: https://arxiv.org/pdf/2403.03186.pdf.

RT-X: https://deepmind.google/discover/blog…

Figure 1 Hz: / 1767928771875868677

MasterPlan: https://www.figure.ai/master-plan.

Unit Cost: https://www.cnbc.com/2024/02/29/robot…

MMMU: https://arxiv.org/pdf/2311.16502.pdf.

https://github.com/MMMU-Benchmark/MMMU

Jeff Clune Tweet: / 1768320487627579466

Semianalysis: https://www.semianalysis.com/p/ai-dat…

Huang AGI Quote: https://www.reuters.com/technology/nv…

Altman Quote: https://www.marketingaiinstitute.com/.…

US Govt Report: https://twitter.com/jeffclune?ref_src…

AI Insiders: / aiexplained.

Last summer, Breakthrough Energy, Google Research, and American Airlines announced some promising results from a research collaboration, as first reported in the New York Times. They employed satellite imagery, weather data, software models, and AI prediction tools to steer pilots over or under areas where their planes would be likely to produce contrails. American Airlines used these tools in 70 test flights over six months, and subsequent satellite data indicated that they reduced the total length of contrails by 54%, relative to flights that weren’t rerouted.

There would, of course, be costs to implementing such a strategy. It generally requires more fuel to steer clear of these areas, which also means the flights would produce more greenhouse-gas emissions (more on that wrinkle in a moment).

More fuel also means greater expenses, and airlines aren’t likely to voluntarily implement such measures if it’s not relatively affordable.

Pilot season has officially begun for the world of humanoid robotics. Last year, Amazon began testing Agility’s Digit robots in select fulfillment centers, while this January, Figure announced a deal with BMW. Now Apptronik is getting in on the action, courtesy of a partnership with Mercedes-Benz.

According to the Austin-based robotics startup, “as part of the agreement Apptronik and Mercedes-Benz will collaborate on identifying applications for highly advanced robotics in Mercedes-Benz Manufacturing.” Specific figures have not been disclosed, as is customary for these sorts of deals. Generally, the actual number of systems included in a pilot are fairly small — understandably so, given the early nature of the technology.

Even so, these deals are regarded as a win-win for both parties. Apptronik can demonstrate clear interest from a leading automotive name, while Mercedes signals to customers and shareholders alike that it’s looking to the future. What comes next is what really matters. Should the pilot go well, causing the carmaker to put in a big order, that would be a massive feather in Apptronik’s cap — and the industry at large.

Apple has added another AI startup to its acquisition list with Canada-based DarwinAI, which specializes in vision-based tech to observe components during manufacturing to improve efficiency, Bloomberg reported.

While Apple and DarwinAI haven’t announced this deal, several members of the startup’s team joined Apple’s machine learning teams in January, as per their LinkedIn profiles.

DarwinAI had raised over $15 million in funding across various rounds from investors, including BDC Capital’s Deep Tech Venture Fund, Honeywell Ventures, Obvious Ventures and Inovia Capital. BDC Capital confirms on its website that it has received an exit from DarwinAI, whereas Obvious Ventures has updated its portfolio to reflect that the startup has been acquired.