Tech giants dominate research but the line between real breakthrough and product showcase can be fuzzy. Some scientists have had enough.

Mark Rober’s Tesla crash story and video on self-driving cars face significant scrutiny for authenticity, bias, and misleading claims, raising doubts about his testing methods and the reliability of the technology he promotes.

Questions to inspire discussion.

Tesla Autopilot and Testing 🚗 Q: What was the main criticism of Mark Rober’s Tesla crash video? A: The video was criticized for failing to use full self-driving mode despite it being shown in the thumbnail and capable of being activated the same way as autopilot. 🔍 Q: How did Mark Rober respond to the criticism about not using full self-driving mode? A: Mark claimed it was a distinction without a difference and was confident the results would be the same if he reran the experiment in full self-driving mode. 🛑 Q: What might have caused the autopilot to disengage during the test?

Unveiled at CES 2025, Roborock’s innovative robot vacuum with an arm, Saros Z70, is now available as a pre-order bundle in the US store. According to the company, consumers can get the Saros Z70 for $1,899 with another Robocok product. This device’s availability is expected in early May.

Roborock previewed the Saros Z70 to BGR a little before its official announcement at CES, and the company’s view for the future of the robot vacuum segment future is impressive. Roborock says the Saros Z70 features a foldable robotic arm with five axes that can deploy itself to clean previously obstructed areas and put away small items such as socks, small towels, tissue papers, and sandals under 300g.

While I can understand the appeal of the robot vacuum going a step further–I think the ability to climb different areas is more interesting with the latest Roborock Qrevo Curv and Saros 10R –it feels a bit too much not removing your dirty socks from the floor; you know?

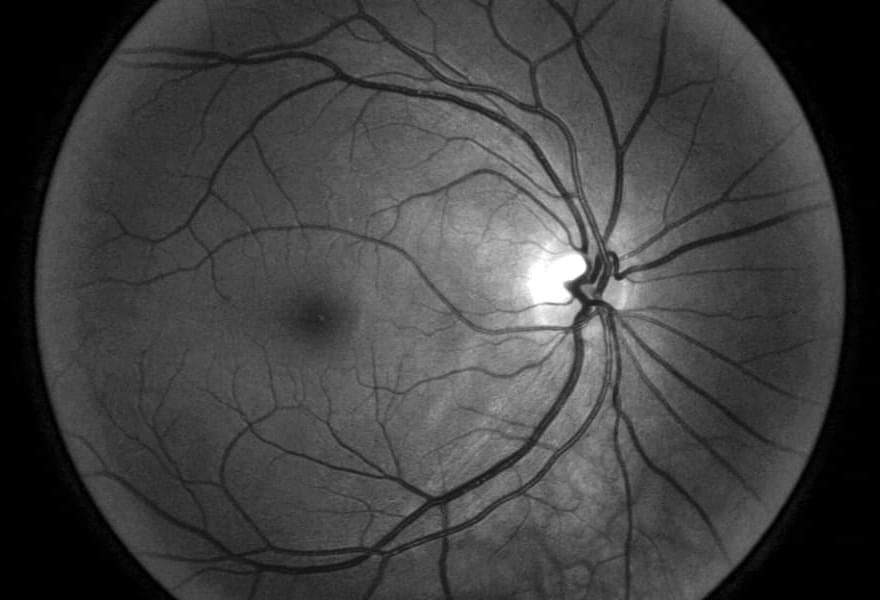

The healthcare industry faces a significant shift towards digital health technology, with a growing demand for real-time and continuous health monitoring and disease diagnostics [1, 2, 3]. The rising prevalence of chronic diseases, such as diabetes, heart disease, and cancer, coupled with an aging population, has increased the need for remote and continuous health monitoring [4, 5, 6, 7]. This has led to the emergence of artificial intelligence (AI)-based wearable sensors that can collect, analyze, and transmit real-time health data to healthcare providers so that they can make efficient decisions based on patient data. Therefore, wearable sensors have become increasingly popular due to their ability to provide a non-invasive and convenient means of monitoring patient health. These wearable sensors can track various health parameters, such as heart rate, blood pressure, oxygen saturation, skin temperature, physical activity levels, sleep patterns, and biochemical markers, such as glucose, cortisol, lactates, electrolytes, and pH and environmental parameters [1, 8, 9, 10]. Wearable health technology includes first-generation wearable technologies, such as fitness trackers, smartwatches, and current wearable sensors, and is a powerful tool in addressing healthcare challenges [2].

The data collected by wearable sensors can be analyzed using machine learning (ML) and AI algorithms to provide insights into an individual’s health status, enabling early detection of health issues and the provision of personalized healthcare [6,11]. One of the most significant advantages of AI-based wearable health technology is to promote preventive healthcare. This enables individuals and healthcare providers to proactively address symptomatic conditions before they become more severe [12,13,14,15]. Wearable devices can also encourage healthy behavior by providing incentives, reminders, and feedback to individuals, such as staying active, hydrating, eating healthily, and maintaining a healthy lifestyle by measuring hydration biomarkers and nutrients.

Which of these technologies excites you the most? CHAPTERS:00:00 20 Space Megastructures That Will Transform Our Future01:15:51 15 Robotics TechnologiesThat…

NVIDIA has just unveiled the Isaac GR00T N1, a foundation model that is revolutionizing humanoid robotics. This AI-driven system can learn tasks, make decisions, and adapt like never before!

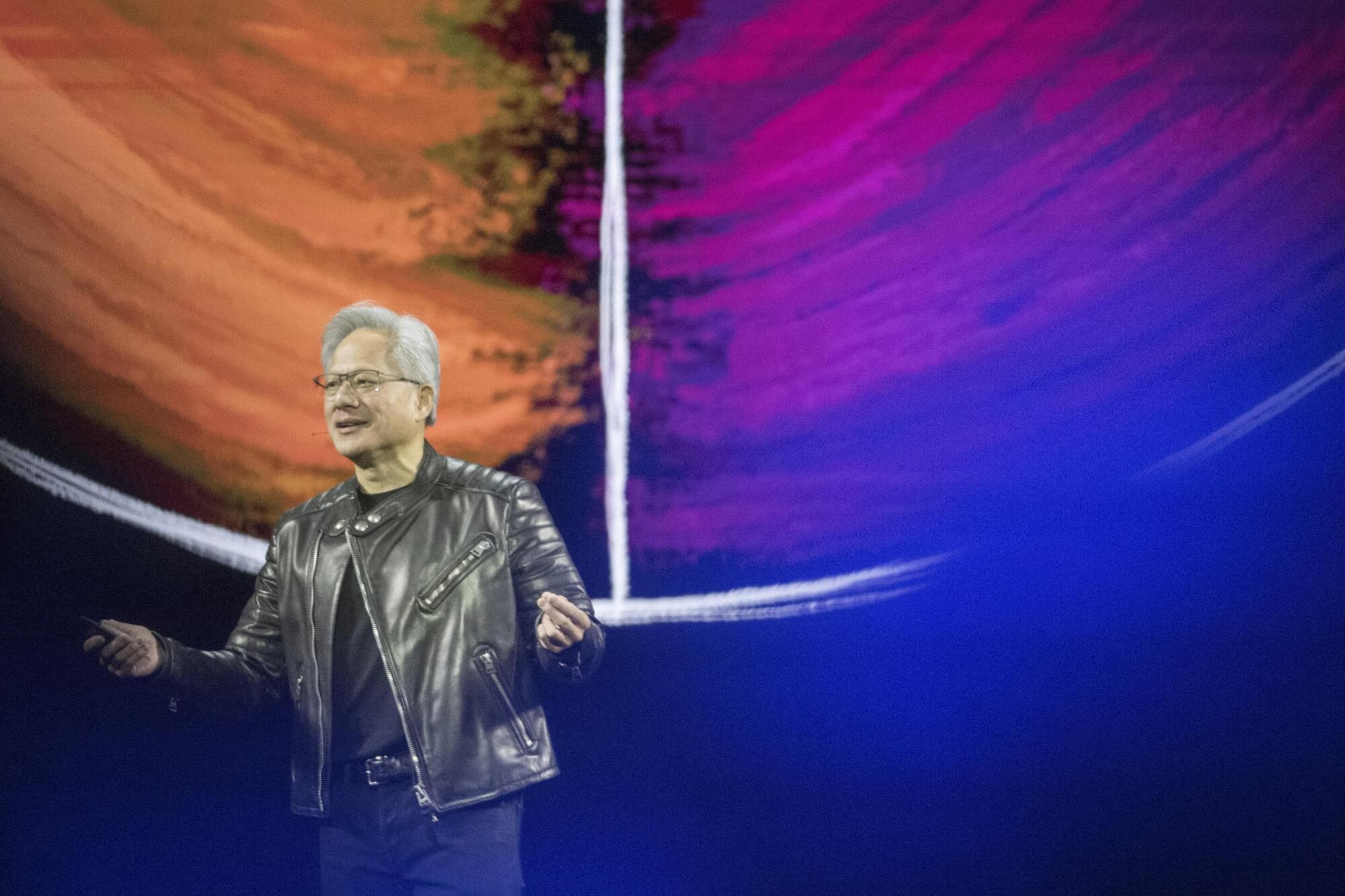

At GTC 2025, NVIDIA CEO Jensen Huang revealed the Isaac GR00T N1, a next-generation AI model designed to train humanoid robots with unprecedented efficiency. It uses a dual-system approach—one for instant reactions and another for strategic thinking. NVIDIA also introduced Newton, a physics engine developed in collaboration with Google DeepMind and Disney, aiming to enhance robotic motion.

Additionally, NVIDIA’s Isaac GR00T Blueprint enables large-scale training with synthetic data. In just 11 hours, the system generated over 780,000 training examples, drastically improving robot accuracy. These advancements could reshape industries by making humanoid robots more intelligent and useful in real-world applications.

What do you think of NVIDIA’s latest robotics breakthrough? Let us know in the comments! Do not forget to like, subscribe, and turn on notifications for more updates on AI and robotics.

#NVIDIA #AI #Robotics.

Get More Great Car Videos — Subscribe: https://goo.gl/BSIaFc

This research note deploys data from a simulation experiment to illustrate the very real effects of monolithic views of technology potential on decision-making within the Homeland Security and Emergency Management field. Specifically, a population of national security decision-makers from across the United States participated in an experimental study that sought to examine their response to encounter different kinds of AI agency in a crisis situation. The results illustrate wariness of overstep and unwillingness to be assertive when AI tools are observed shaping key situational developments, something not apparent when AI is either absent or used as a limited aide to human analysis. These effects are mediated by levels of respondent training. Of great concern, however, these restraining effects disappear and the impact of education on driving professionals towards prudent outcomes is minimized for those individuals that profess to see AI as a full viable replacement of their professional practice. These findings constitute proof of a “Great Machine” problem within professional HSEM practice. Willingness to accept grand, singular assumptions about emerging technologies into operational decision-making clearly encourages ignorance of technological nuance. The result is a serious challenge for HSEM practice that requires more sophisticated solutions than simply raising awareness of AI.

Keywords: artificial intelligence; cybersecurity; experiments; decision-making.

Nvidia founder Jensen Huang kicked off the company’s artificial intelligence developer conference on Tuesday by telling a crowd of thousands that AI is going through “an inflection point.”

At GTC 2025—dubbed the “Super Bowl of AI”—Huang focused his keynote on the company’s advancements in AI and his predictions for how the industry will move over the next few years. Demand for GPUs from the top four cloud service providers is surging, he said, adding that he expects Nvidia’s data center infrastructure revenue to hit $1 trillion by 2028.

Huang’s highly anticipated announcement revealed more details around Nvidia’s next-generation graphics architectures: Blackwell Ultra and Vera Rubin—named for the famous astronomer. Blackwell Ultra is slated for the second half of 2025, while its successor, the Rubin AI chip, is expected to launch in late 2026. Rubin Ultra will take the stage in 2027.