Words of the prophet.

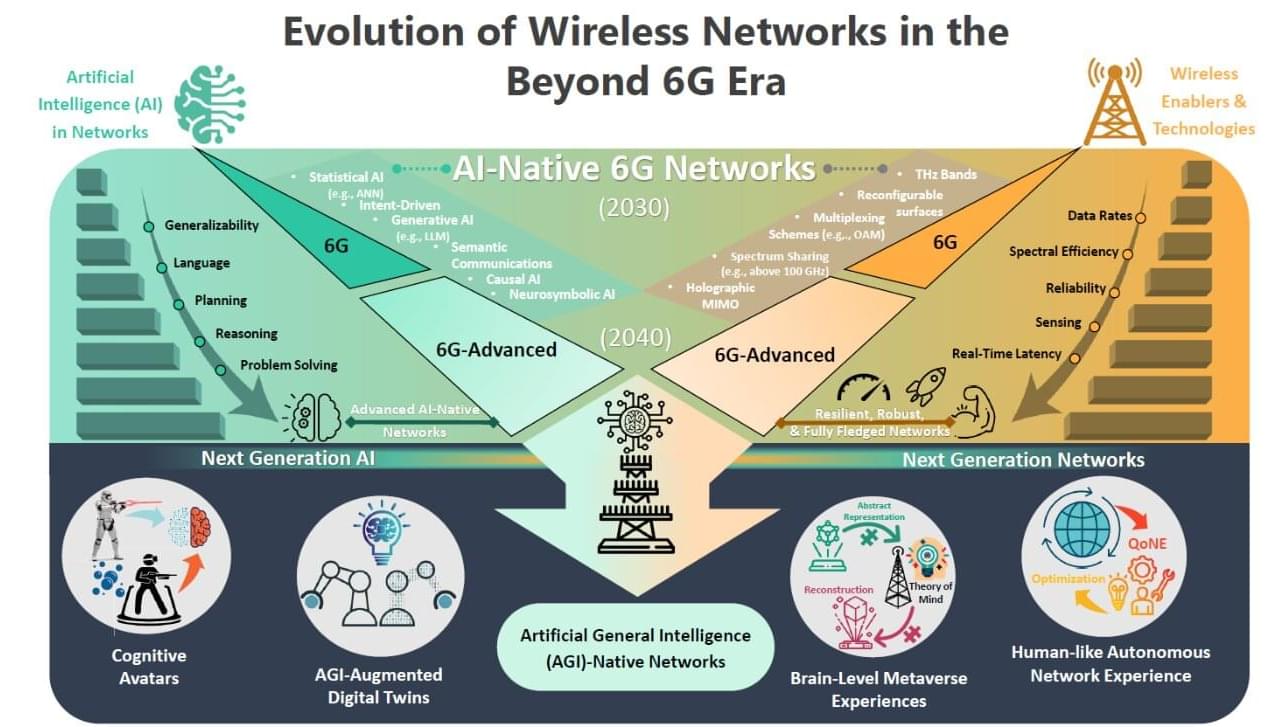

What happens when AI surpasses human intelligence, accelerating its own evolution beyond our control? This is the Singularity, a moment where technology reshapes the world in ways we can’t yet imagine.

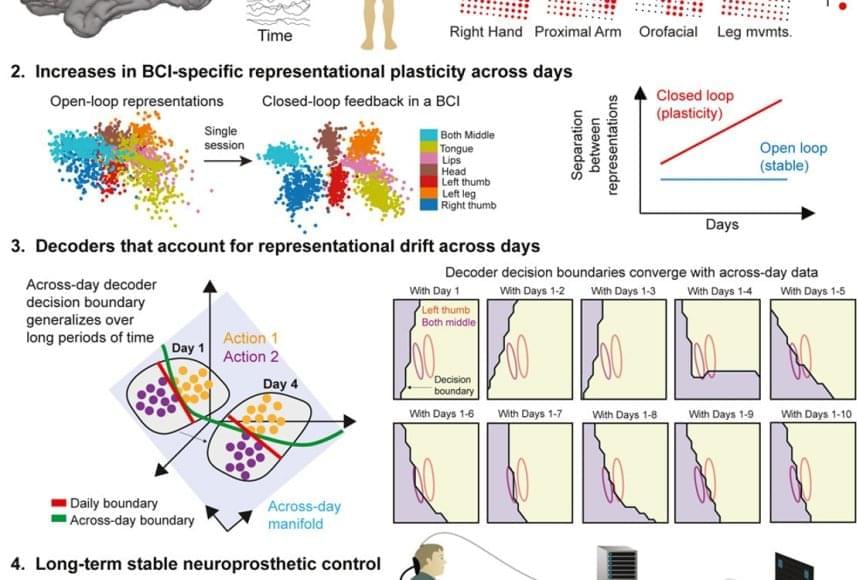

Futurist Ray Kurzweil predicts that by 2045, AI will reach this point, merging with human intelligence through Brain-Computer Interfaces (BCIs) and redefining the future of civilization. But as we move closer to this reality, we must ask: Will the Singularity be humanity’s greatest leap or its greatest risk?

Chapters.

00:00 — 00:48 Intro.

00:48 — 01:51 Technological Singularity.

01:51 — 05:09 Kurzweil’s Predictions and Accuracy.

05:09 — 07:32 The Path to the Singularity.

07:32 — 08:51 Brain-Computer Interfaces (BCIs)

08:51 — 12:14 The Singularity: What Happens Next?

12:14 — 14:14 The Concerns: Are We Ready?

14:14 — 15:11 The Countdown to 2045

The countdown has already begun. Are we prepared for what’s coming?

#RayKurzweil #Singularity #AI #FutureTech #ArtificialIntelligence #BrainComputerInterface