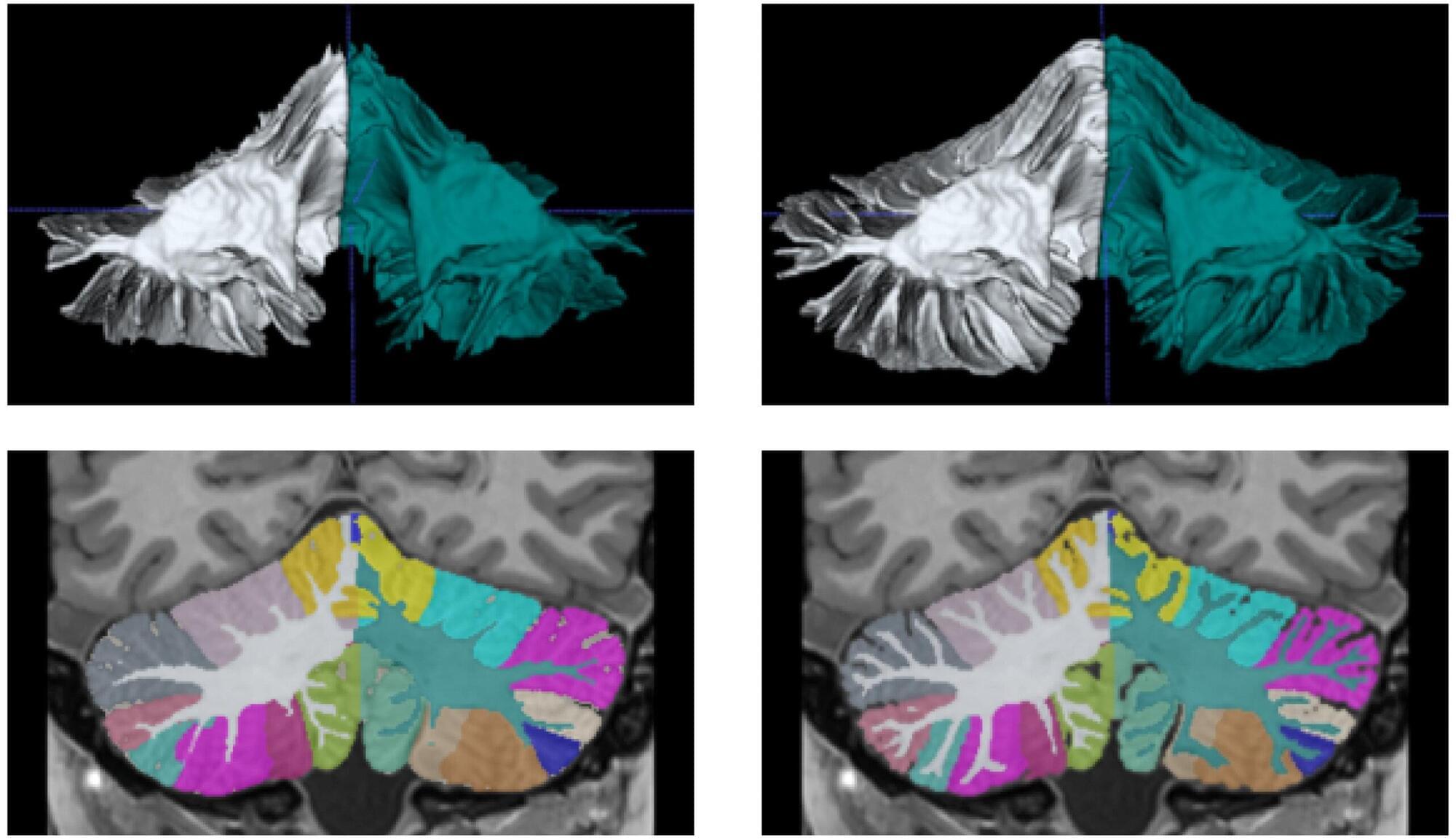

A team of researchers from the Universitat Politècnica de València (UPV) and the French National Center for Scientific Research (CNRS) has developed the world’s most advanced software to study the human cerebellum using high-resolution NMR images.

Called DeepCeres, this software will help in the research and diagnosis of diseases such as ALS, schizophrenia, autism and Alzheimer’s, among others. The work of the Spanish and French researchers has been published in the journal NeuroImage.

Despite its small size compared to the rest of the brain, the cerebellum contains approximately 50% of all brain neurons and plays a fundamental role in cognitive, emotional and motor functions.