Human Augmentation Examples. What are examples of augmentations to the human body? Should we allow such augmentation methods and technologies? What are dangers, and risks involved? How can society benefit from these developments?

On Brave New World conference 2020 I gave this webinar with the title: ‘The Human Body. The Next Frontier’.

Please leave a comment if you like the video or when you have a question!

Content:

0:00 Start.

0:29 Introduction by moderator Jim Stolze.

1:05 My story.

2:54 Jetson Fallacy (by professor Michael Bess)

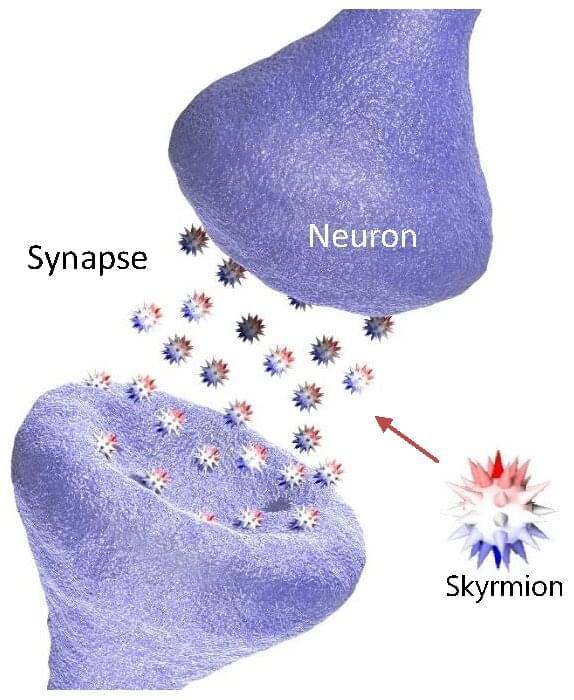

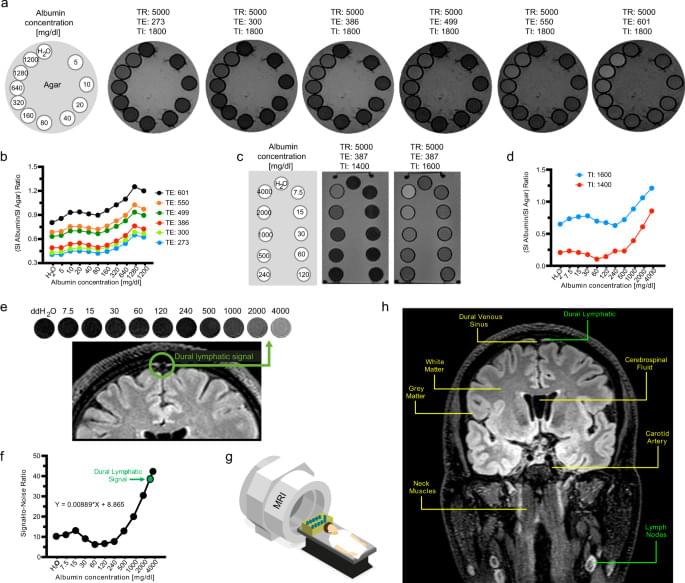

3:43 Examples: data, genetic modification, and neuroscience.

6:09 Benefits.

7:09 Risks and dangers.

8:28 Stakeholders: companies, countries, and military organizations.

11:54 Solutions: regulation, debate, and stories.

14:42 My conclusion.

15:03 End.

🙌 Hire me:

Keynote or webinar: https://peterjoosten.org.

🚀 Want to know more: