When Dr. Shiran Barber-Zucker joined the lab of Prof. Sarel Fleishman as a postdoctoral fellow, she chose to pursue an environmental dream: breaking down plastic waste into useful chemicals. Nature has clever ways of decomposing tough materials: Dead trees, for example, are recycled by white-rot fungi, whose enzymes degrade wood into nutrients that return to the soil. So why not coax the same enzymes into degrading man-made waste?

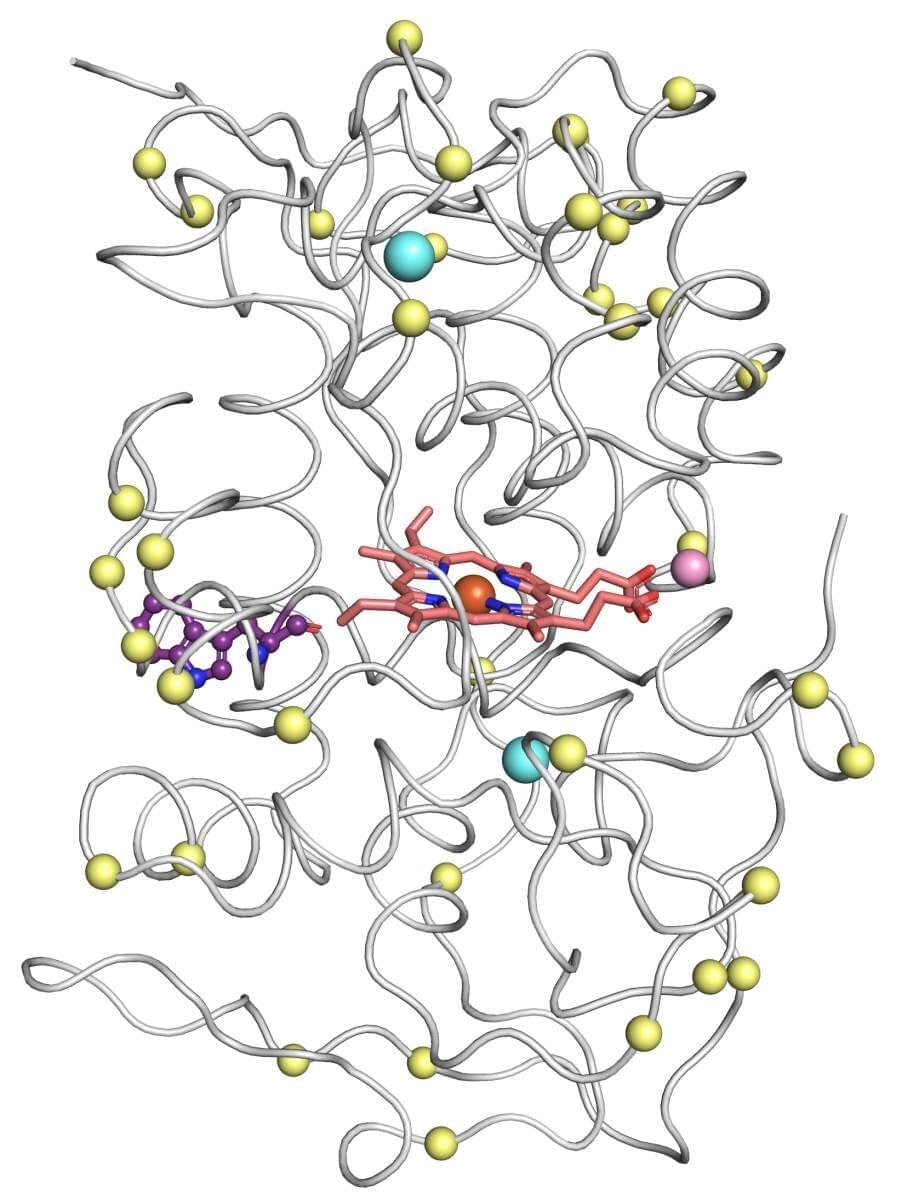

Barber-Zucker’s problem was that these enzymes, called versatile peroxidases, are notoriously unstable. “These natural enzymes are real prima donnas; they are extremely difficult to work with,” says Fleishman, of the Biomolecular Sciences Department at the Weizmann Institute of Science. Over the past few years, his lab has developed computational methods that are being used by thousands of research teams around the world to design enzymes and other proteins with enhanced stability and additional desired properties. For such methods to be applied, however, a protein’s precise molecular structure must be known. This typically means that the protein must be sufficiently stable to form crystals, which can be bombarded with X-rays to reveal their structure in 3D. This structure is then tweaked using the lab’s algorithms to design an improved protein that doesn’t exist in nature.