Immune and stromal orchestration of the pre-metastatic niche👇

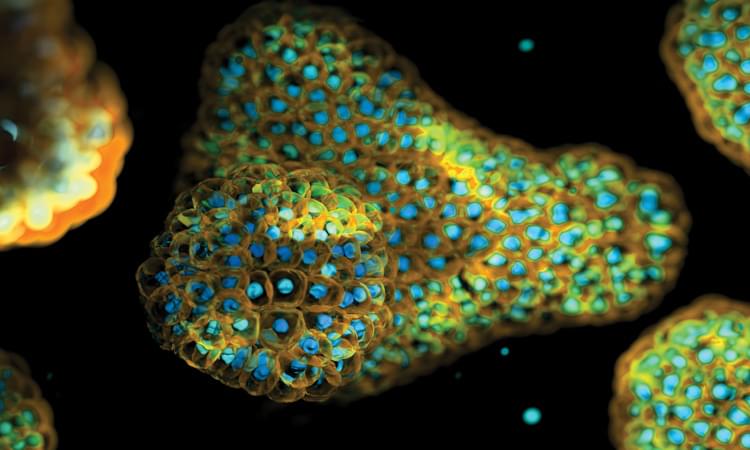

✅Priming distant organs before tumor cell arrival Primary tumors actively condition distant organs by releasing soluble factors, cytokines, and tumor-derived exosomes. These signals recruit monocytes and neutrophils and reprogram resident immune and stromal cells, initiating the formation of a pre-metastatic niche (PMN) that becomes permissive to future metastatic seeding.

✅Role of monocytes and macrophages Recruited monocytes differentiate into inflammatory or immunosuppressive macrophages depending on the local context. In organs such as the lung and liver, these cells promote extracellular matrix (ECM) remodeling, fibrotic deposition, and secretion of growth factors, creating a supportive scaffold for disseminated tumor cells (DTCs).

✅Neutrophils as niche architects Neutrophils contribute to PMN formation through the release of matrix metalloproteinases (MMPs), inflammatory cytokines, and neutrophil extracellular traps (NETs). These processes alter tissue architecture, enhance inflammation, and support tumor cell survival and reactivation.

✅Organ-specific niche specialization Different organs develop distinct PMNs. In the lung, inflammatory macrophages and neutrophils drive ECM remodeling and leukotriene signaling. In the liver, fibrosis, granulins, and chemokine-driven immune cell recruitment promote an immunosuppressive environment favorable for metastatic colonization.

✅Fate of disseminated tumor cells Once DTCs arrive, they face multiple outcomes. Some are eliminated by immune surveillance, others enter long-term dormancy, and a subset evades immunity to initiate metastatic outgrowth. ECM composition, immune pressure, and stromal signaling critically determine these divergent fates.

✅Dormancy and reawakening Dormant DTCs can persist in a latent state for prolonged periods. Changes in ECM remodeling, inflammatory signaling, or immune suppression can trigger their reawakening, leading to renewed proliferation and metastatic progression.