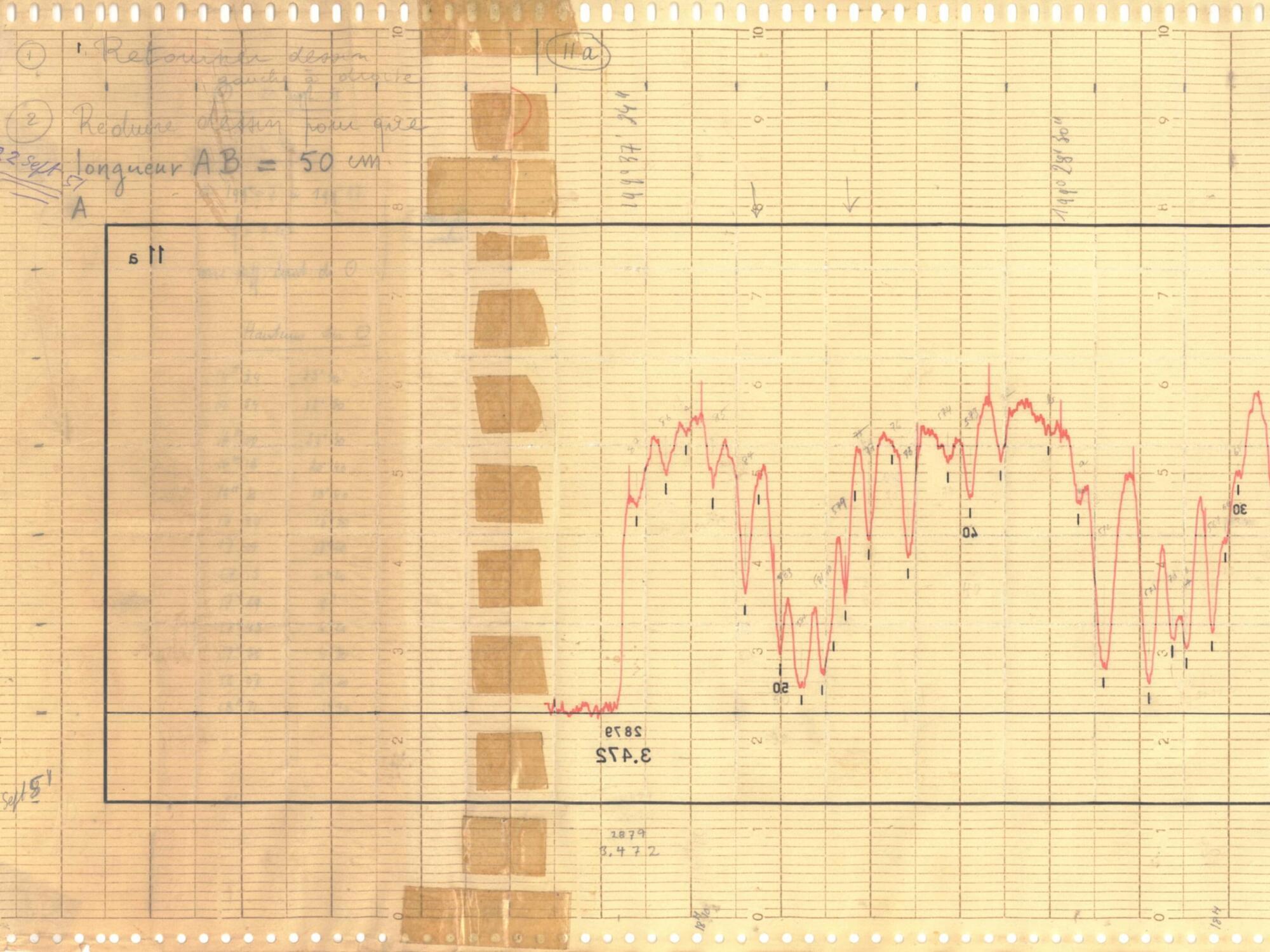

An international research team led by the University of Bremen has detected chlorofluorocarbons (CFCs) in Earth’s atmosphere for the first time in historical measurements from 1951—20 years earlier than previously known. This surprising glimpse into the past was made possible by analyzing historical measurement data from the Jungfraujoch research station in the Swiss Alps. The study has now been published in Geophysical Research Letters.

“This discovery provides quantitative data for the concentration of a CFC for the year 1951,” explains Professor Justus Notholt from the Institute of Environmental Physics at the University of Bremen. “Without the archived measurements from the Jungfraujoch station, this unique look into the past would have been impossible.”