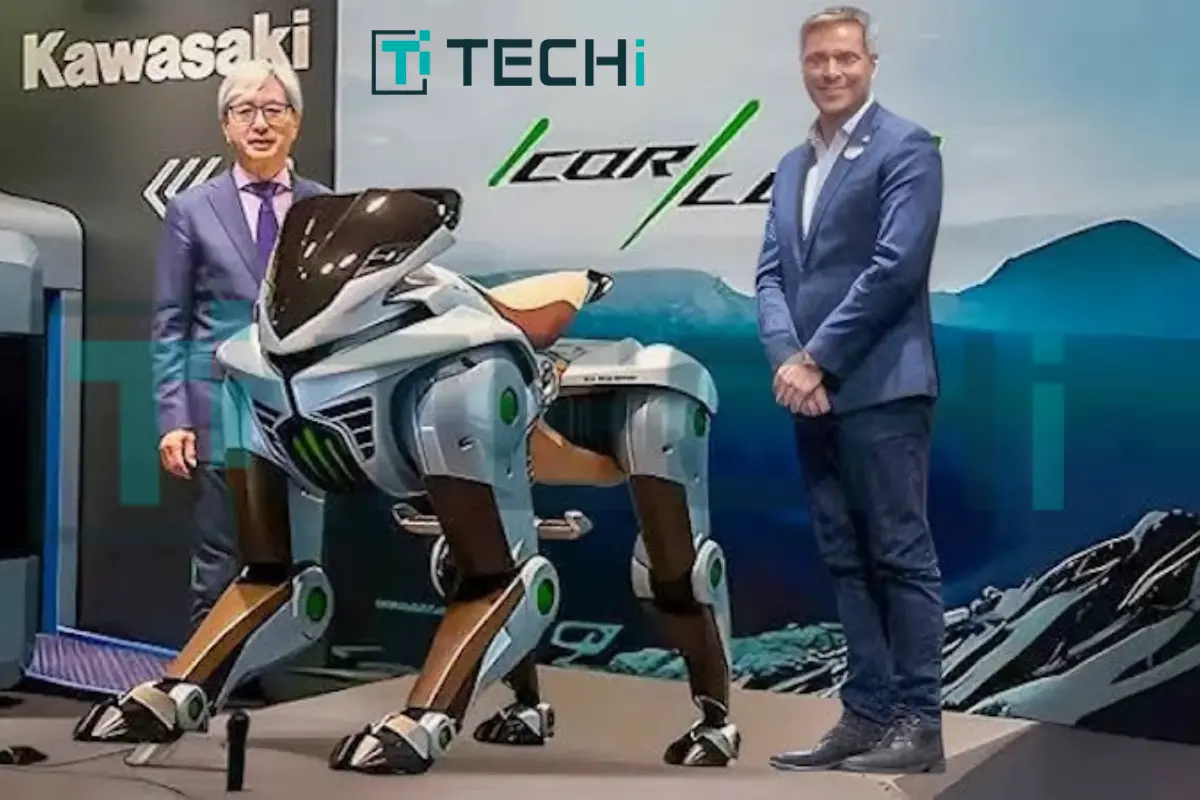

In a bold showcase of futuristic design and green innovation, Kawasaki Heavy Industries has unveiled the Kawasaki Corleo robot—a hydrogen-powered, four-legged robotic ride—at the Osaka-Kansai Expo 2025. This revolutionary concept reimagines mobility by blending clean energy, robotics, and artificial intelligence into a rider-ready machine that can walk, adapt, and navigate across rugged terrains.

The Kawasaki Corleo robot walks on four independently powered legs, offering impressive stability and terrain agility that wheels often can’t match. Built with carbon fiber and metal, Corleo echoes the iconic DNA of Kawasaki’s motorcycle lineage—featuring sleek contours, aerodynamic symmetry, and a headlight faceplate that resembles a mechanical creature ready to roam.

At the heart of Corleo lies a 150cc hydrogen engine that generates electricity to drive its limbs—making it a clean energy alternative to gas-powered off-roaders. Ditching the conventional handlebars, the robot interprets a rider’s body movement to move forward, turn, or stop. A built-in heads-up display (HUD) provides live feedback on hydrogen levels, motion stability, and terrain tracking. This unique interface between biomechanics and artificial intelligence makes the Kawasaki Corleo robot one of the most immersive robotic riding experiences developed to date.