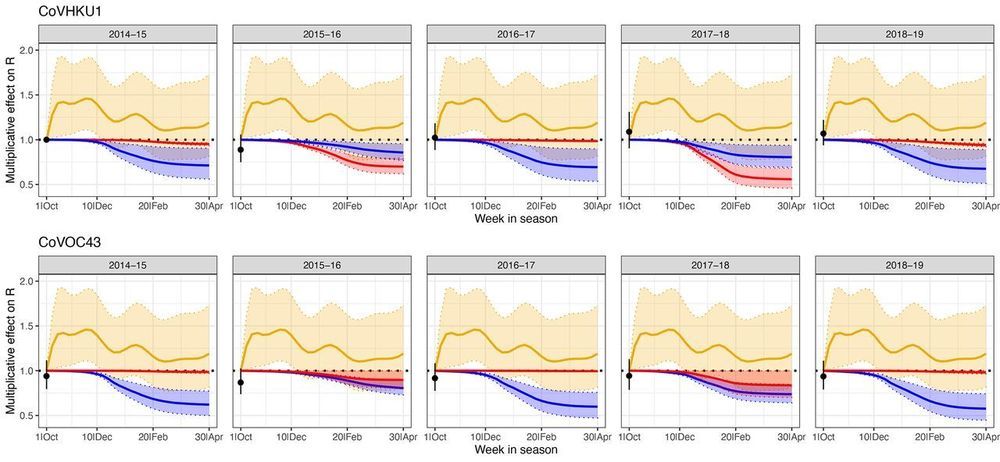

It is urgent to understand the future of severe acute respiratory syndrome–coronavirus 2 (SARS-CoV-2) transmission. We used estimates of seasonality, immunity, and cross-immunity for betacoronaviruses OC43 and HKU1 from time series data from the USA to inform a model of SARS-CoV-2 transmission. We projected that recurrent wintertime outbreaks of SARS-CoV-2 will probably occur after the initial, most severe pandemic wave. Absent other interventions, a key metric for the success of social distancing is whether critical care capacities are exceeded. To avoid this, prolonged or intermittent social distancing may be necessary into 2022. Additional interventions, including expanded critical care capacity and an effective therapeutic, would improve the success of intermittent distancing and hasten the acquisition of herd immunity. Longitudinal serological studies are urgently needed to determine the extent and duration of immunity to SARS-CoV-2. Even in the event of apparent elimination, SARS-CoV-2 surveillance should be maintained since a resurgence in contagion could be possible as late as 2024.

The ongoing severe acute respiratory syndrome–coronavirus 2 (SARS-CoV-2) pandemic has caused nearly 500,000 detected cases of coronavirus disease 2019 (COVID-19) illness and claimed over 20,000 lives worldwide as of 26 Mar 2020. Experience from China, Italy, and the United States demonstrates that COVID-19 can overwhelm even the healthcare capacities of well-resourced nations (2–4). With no pharmaceutical treatments available, interventions have focused on contact tracing, quarantine, and social distancing. The required intensity, duration, and urgency of these responses will depend both on how the initial pandemic wave unfolds and on the subsequent transmission dynamics of SARS-CoV-2. During the initial pandemic wave, many countries have adopted social distancing measures, and some, like China, are gradually lifting them after achieving adequate control of transmission.