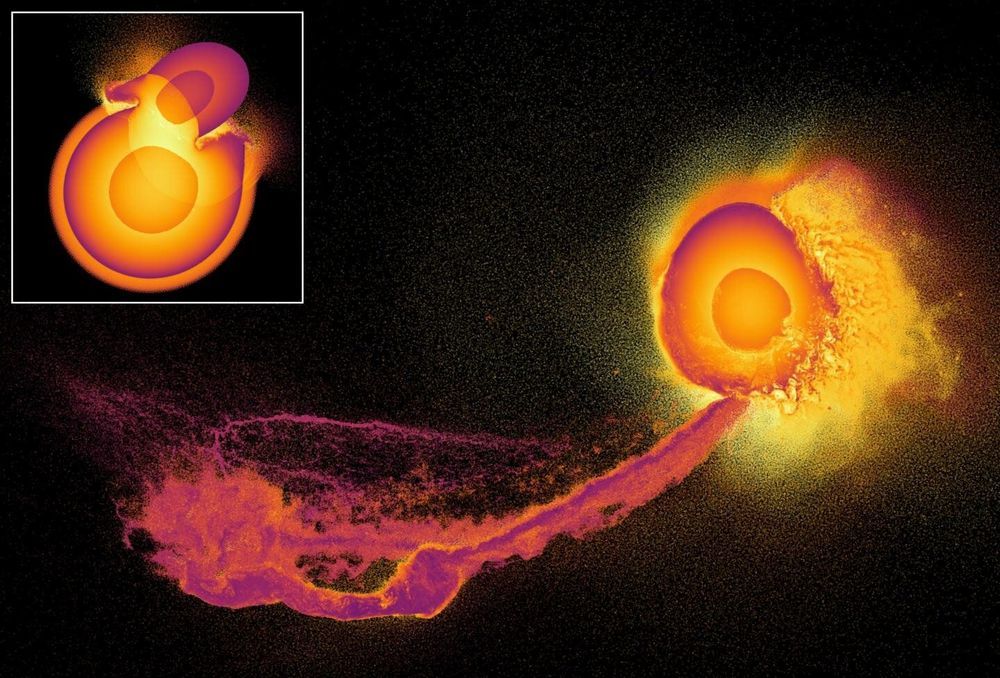

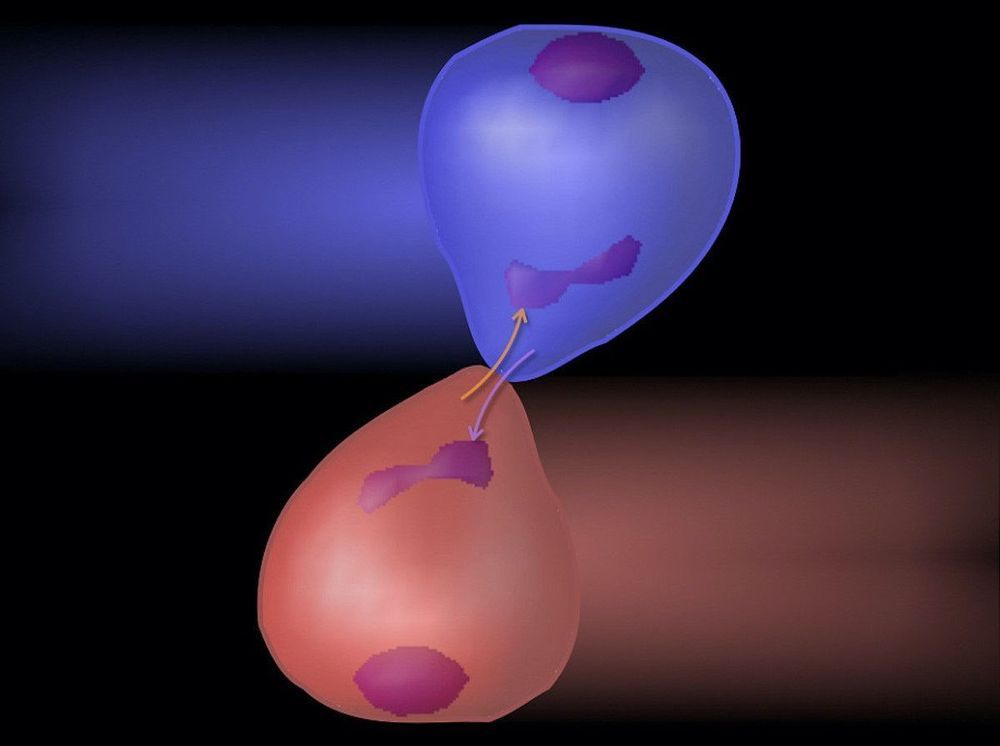

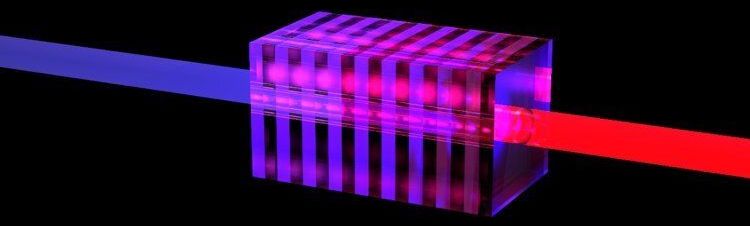

Fifty is a critical number for quantum computers capable of solving problems that classic supercomputers cannot solve. Proving quantum supremacy requires at least 50 qubits. For quantum computers working with light, it is equally necessary to have at least 50 photons. And what’s more, these photons have to be perfect, or else they will worsen their own quantum capabilities. It is this perfection that makes it hard to realize. Not impossible, however, which scientists of the University of Twente have demonstrated by proposing modifications of the crystal structure inside existing light sources. Their findings are published in Physical Review A.

Photons are promising in the world of quantum computing, with its demands of entanglement, superposition and interference. These are properties of qubits, as well. They enable building a computer that operates in a way that is entirely different from making calculations with standard bits that represent ones and zeroes. For many years now, researchers have predicted quantum computers able to solve very complex problems, like instantly calculating all vibrations in a complex molecule.

The first proof of quantum supremacy is already there, accomplished with superconducting qubits and on very complicated theoretical problems. About 50 quantum building blocks are needed as a minimum, whether they are in the form of photons or qubits. Using photons may have advantages over qubits: They can operate at room temperatures and they are more stable. There is one important condition: the photons have to be perfect in order to get to the critical number of 50. In their new paper, UT scientists have now demonstrated that this is feasible.