Aurora 21 will help the US keep pace among the other nations who own the fastest supercomputers. Scientists plan on using it to map the connectome of the human brain.

Category: supercomputing – Page 83

Quantum internet: the next global network is already being laid

Harun Šiljak, Trinity College Dublin

Google reported a remarkable breakthrough towards the end of 2019. The company claimed to have achieved something called quantum supremacy, using a new type of “quantum” computer to perform a benchmark test in 200 seconds. This was in stark contrast to the 10,000 years that would supposedly have been needed by a state-of-the-art conventional supercomputer to complete the same test.

Despite IBM’s claim that its supercomputer, with a little optimisation, could solve the task in a matter of days, Google’s announcement made it clear that we are entering a new era of incredible computational power.

Will Quantum Computing Supercharge Artificial Intelligence?

Another argument for government to bring AI into its quantum computing program is the fact that the United States is a world leader in the development of computer intelligence. Congress is close to passing the AI in Government Act, which would encourage all federal agencies to identify areas where artificial intelligences could be deployed. And government partners like Google are making some amazing strides in AI, even creating a computer intelligence that can easily pass a Turing test over the phone by seeming like a normal human, no matter who it’s talking with. It would probably be relatively easy for Google to merge some of its AI development with its quantum efforts.

The other aspect that makes merging quantum computing with AI so interesting is that the AI could probably help to reduce some of the so-called noise of the quantum results. I’ve always said that the way forward for quantum computing right now is by pairing a quantum machine with a traditional supercomputer. The quantum computer could return results like it always does, with the correct outcome muddled in with a lot of wrong answers, and then humans would program a traditional supercomputer to help eliminate the erroneous results. The problem with that approach is that it’s fairly labor intensive, and you still have the bottleneck of having to run results through a normal computing infrastructure. It would be a lot faster than giving the entire problem to the supercomputer because you are only fact-checking a limited number of results paired down by the quantum machine, but it would still have to work on each of them one at a time.

But imagine if we could simply train an AI to look at the data coming from the quantum machine, figure out what makes sense and what is probably wrong without human intervention. If that AI were driven by a quantum computer too, the results could be returned without any hardware-based delays. And if we also employed machine learning, then the AI could get better over time. The more problems being fed to it, the more accurate it would get.

How Graphene Could Help Us Build Bigger and Better Quantum Computers

Quantum computers can solve problems in seconds that would take “ordinary” computers millennia, but their sensitivity to interference is majorly holding them back. Now, researchers claim they’ve created a component that drastically cuts down on error-inducing noise.

» Subscribe to Seeker! http://bit.ly/subscribeseeker

» Watch more Elements! http://bit.ly/ElementsPlaylist

» Visit our shop at http://shop.seeker.com

Quantum computers use quantum bits, or qubits, which can represent a one, a zero, or any combination of the two simultaneously. This is thanks to the quantum phenomenon known as superposition.

Another property, quantum entanglement, allows for qubits to be linked together, and changing the state of one qubit will also change the state of its entangled partner.

Thanks to these two properties, quantum computers of a few dozen qubits can outperform massive supercomputers in certain very specific tasks. But there are several issues holding quantum computers back from solving the world’s toughest problems, one of them is how prone qubits are to error.

#computers #quantumcomputers #science #seeker #elements

New detector breakthrough pushes boundaries of quantum computing

Million-core neuromorphic supercomputer could simulate an entire mouse brain

Circa 2018

After 12 years of work, researchers at the University of Manchester in England have completed construction of a “SpiNNaker” (Spiking Neural Network Architecture) supercomputer. It can simulate the internal workings of up to a billion neurons through a whopping one million processing units.

The human brain contains approximately 100 billion neurons, exchanging signals through hundreds of trillions of synapses. While these numbers are imposing, a digital brain simulation needs far more than raw processing power: rather, what’s needed is a radical rethinking of the standard computer architecture on which most computers are built.

“Neurons in the brain typically have several thousand inputs; some up to quarter of a million,” Prof. Stephen Furber, who conceived and led the SpiNNaker project, told us. “So the issue is communication, not computation. High-performance computers are good at sending large chunks of data from one place to another very fast, but what neural modeling requires is sending very small chunks of data (representing a single spike) from one place to many others, which is quite a different communication model.”

Nvidia will power world’s fastest AI supercomputer, to be located in Europe

Nvidia is is going to be powering the world’s fastest AI supercomputer, a new system dubbed “Leonardo” that’s being built by the Italian multi-university consortium CINECA, a global supercomputing leader. The Leonardo system will offer as much as 10 exaflops of FP16 AI performance capabilities, and be made up of more than 14,000 Nvidia Ampere-based GPUS once completed.

Leonardo will be one of four new supercomputers supported by a cross-European effort to advance high-performance computing capabilities in the region, which will eventually offer advanced AI capabilities for processing applications across both science and industry. Nvidia will also be supplying its Mellanox HDR InfiniBand networks to the project in order to enable performance across the clusters with low-latency broadband connections.

The other computers in the cluster include MeluXina in Luxembourg and Vega in Slovenia, as well as a new supercooling unit coming online in the Czech Republic. The pan-European consortium also plans four more Supercomputers for Bulgaria, Finland, Portugal and Spain; though, those will follow later and specifics around their performance and locations aren’t yet available.

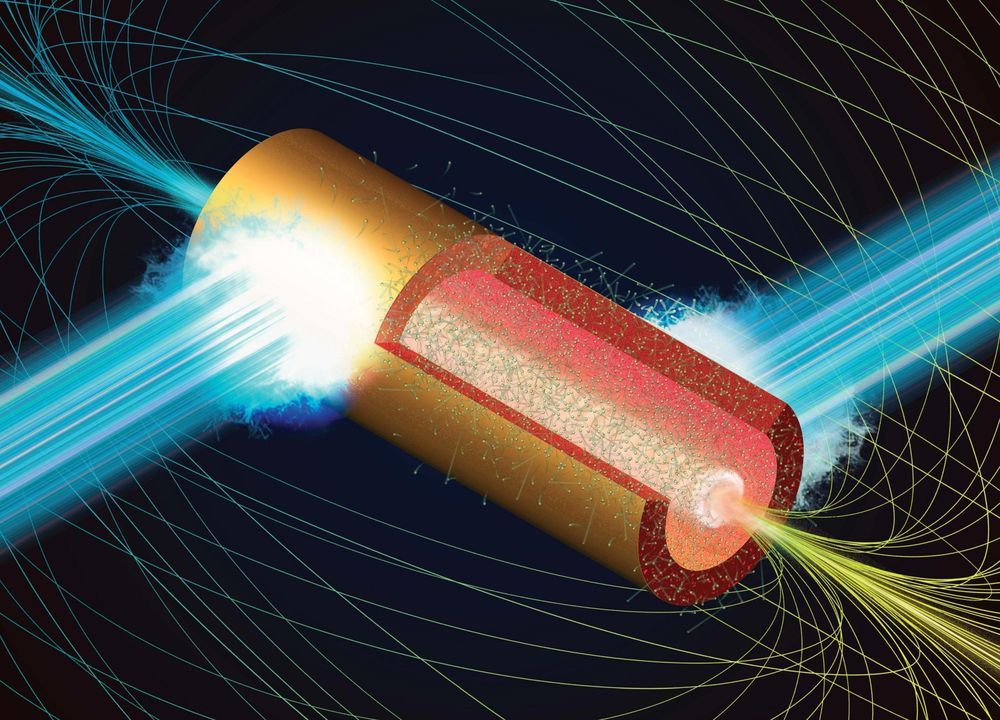

Generating Megatesla Magnetic Fields on Earth Using Intense-Laser-Driven Microtube Implosions

A team of researchers led by Osaka University discovers “microtube implosion,” a novel mechanism that demonstrates the generation of megatesla-order magnetic fields.

Magnetic fields are used in various areas of modern physics and engineering, with practical applications ranging from doorbells to maglev trains. Since Nikola Tesla’s discoveries in the 19th century, researchers have strived to realize strong magnetic fields in laboratories for fundamental studies and diverse applications, but the magnetic strength of familiar examples are relatively weak. Geomagnetism is 0.3−0.5 gauss (G) and magnetic tomography (MRI) used in hospitals is about 1 tesla (T = 104 G). By contrast, future magnetic fusion and maglev trains will require magnetic fields on the kilotesla (kT = 107 G) order. To date, the highest magnetic fields experimentally observed are on the kT order.

Recently, scientists at Osaka University discovered a novel mechanism called a “microtube implosion,” and demonstrated the generation of megatesla (MT = 1010 G) order magnetic fields via particle simulations using a supercomputer. Astonishingly, this is three orders of magnitude higher than what has ever been achieved in a laboratory. Such high magnetic fields are expected only in celestial bodies like neutron stars and black holes.