From Cerebras Systems’ AI supercomputer to OpenAI’s natural language processor GPT-3, these are the companies pushing machine learning to the edge.

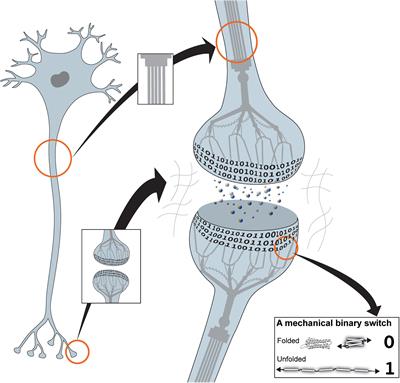

One of the major unsolved mysteries of biological science concerns the question of where and in what form information is stored in the brain. I propose that memory is stored in the brain in a mechanically encoded binary format written into the conformations of proteins found in the cell-extracellular matrix (ECM) adhesions that organise each and every synapse. The MeshCODE framework outlined here represents a unifying theory of data storage in animals, providing read-write storage of both dynamic and persistent information in a binary format. Mechanosensitive proteins that contain force-dependent switches can store information persistently, which can be written or updated using small changes in mechanical force. These mechanosensitive proteins, such as talin, scaffold each synapse, creating a meshwork of switches that together form a code, the so-called MeshCODE. Large signalling complexes assemble on these scaffolds as a function of the switch patterns and these complexes would both stabilise the patterns and coordinate synaptic regulators to dynamically tune synaptic activity. Synaptic transmission and action potential spike trains would operate the cytoskeletal machinery to write and update the synaptic MeshCODEs, thereby propagating this coding throughout the organism. Based on established biophysical principles, such a mechanical basis for memory would provide a physical location for data storage in the brain, with the binary patterns, encoded in the information-storing mechanosensitive molecules in the synaptic scaffolds, and the complexes that form on them, representing the physical location of engrams. Furthermore, the conversion and storage of sensory and temporal inputs into a binary format would constitute an addressable read-write memory system, supporting the view of the mind as an organic supercomputer.

I would like to propose here a unifying theory of rewritable data storage in animals. This theory is based around the realisation that mechanosensitive proteins, which contain force-dependent binary switches, can store information persistently in a binary format, with the information stored in each molecule able to be written and/or updated via small changes in mechanical force. The protein talin contains 13 of these switches (Yao et al., 2016; Goult et al., 2018; Wang et al., 2019), and, as I argue here, it is my assertion that talin is the memory molecule of animals. These mechanosensitive proteins scaffold each and every synapse (Kilinc, 2018; Lilja and Ivaska, 2018; Dourlen et al., 2019) and have been considered mainly structural. However, these synaptic scaffolds also represent a meshwork of binary switches that I propose form a code, the so-called MeshCODE.

Canadian startup Xanadu says their quantum computer is cloud-accessible, Python programmable, and ready to scale.

Quantum computers based on photons may have some advantages over electron-based machines, including operating at room temperature and not temperatures colder than that of deep space. Now, say scientists at quantum computing startup Xanadu, add one more advantage to the photon side of the ledger. Their photonic quantum computer, they say, could scale up to rival or even beat the fastest classical supercomputers—at least at some tasks.

Whereas conventional computers switch transistors either on or off to symbolize data as ones and zeroes, quantum computers use quantum bits or “qubits” that, because of the bizarre nature of quantum physics, can exist in a state known as superposition where they can act as both 1 and 0. This essentially lets each qubit perform multiple calculations at once.

The more qubits are quantum-mechanically connected entangled together, the more calculations they can simultaneously perform. A quantum computer with enough qubits could in theory achieve a “quantum advantage” enabling it to grapple with problems no classical computer could ever solve. For instance, a quantum computer with 300 mutually-entangled qubits could theoretically perform more calculations in an instant than there are atoms in the visible universe.

Pharma giants and computing titans increasingly partnering on quantum computing.

Theoretically, quantum computers can prove more powerful than any supercomputer. And recent moves from computer giants such as Google and pharmaceutical titans such as Roche now suggest drug discovery might prove to be quantum computing’s first killer app.

Energy researchers have been reaching for the stars for decades in their attempt to artificially recreate a stable fusion energy reactor. If successful, such a reactor would revolutionize the world’s energy supply overnight, providing low-radioactivity, zero-carbon, high-yield power – but to date, it has proved extraordinarily challenging to stabilize. Now, scientists are leveraging supercomputing power from two national labs to help fine-tune elements of fusion reactor designs for test runs.

In experimental fusion reactors, magnetic, donut-shaped devices called “tokamaks” are used to keep the plasma contained: in a sort of high-stakes game of Operation, if the plasma touches the sides of the reactor, the reaction falters and the reactor itself could be severely damaged. Meanwhile, a divertor funnels excess heat from the vacuum.

In France, scientists are building the world’s largest fusion reactor: a 500-megawatt experiment called ITER that is scheduled to begin trial operation in 2025. The researchers here were interested in estimating ITER’s heat-load width: that is, the area along the divertor that can withstand extraordinarily hot particles repeatedly bombarding it.

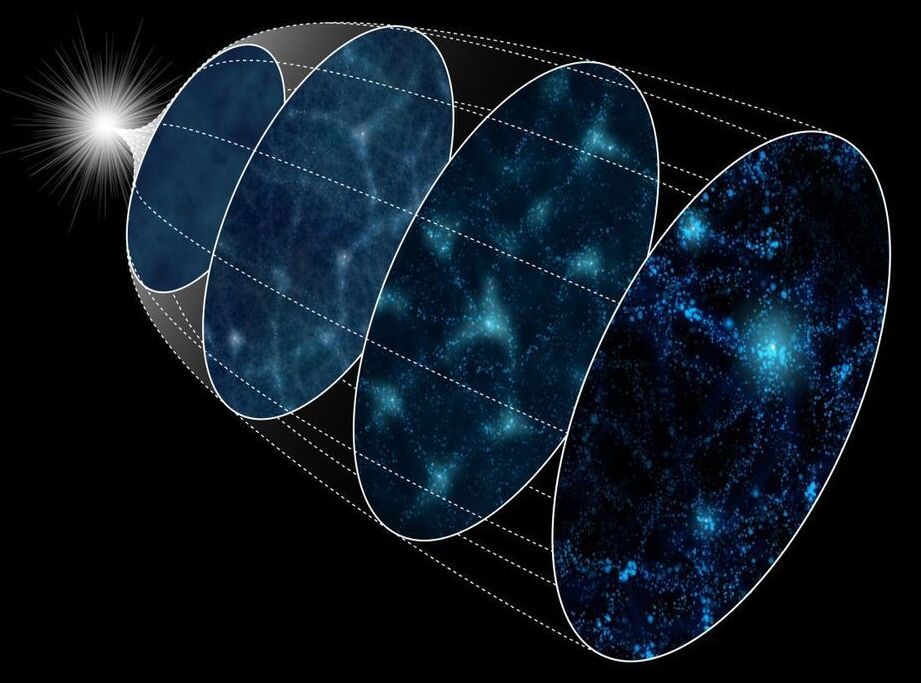

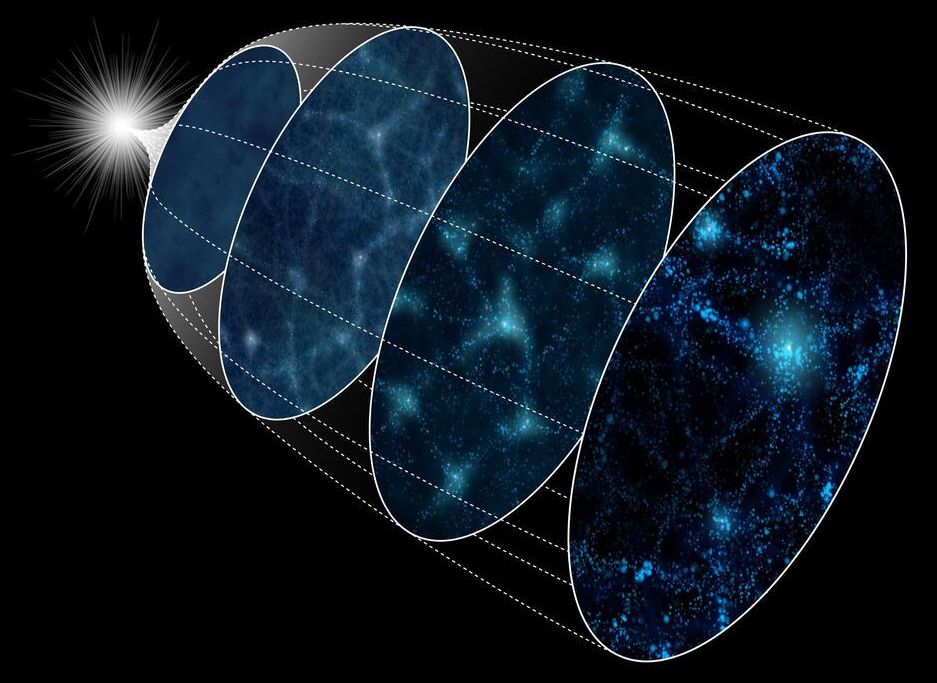

In the absence of a TARDIS or Doc Brown’s DeLorean, how can you go back in time to see what supposedly happened when the universe exploded into being?

Astronomers have tested a method for reconstructing the state of the early universe by applying it to 4000 simulated universes using the ATERUI II supercomputer at the National Astronomical Observatory of Japan (NAOJ). They found that together with new observations, the method can set better constraints on inflation, one of the most enigmatic events in the history of the universe. The method can shorten the observation time required to distinguish between various inflation theories.