Artificial intelligence (AI) has introduced a dynamic shift in various sectors, most notably by deploying autonomous agents capable of independent operation and decision-making. These agents, powered by large language models (LLMs), have significantly broadened the scope of tasks that can be automated, ranging from simple data processing to complex problem-solving scenarios. However, as the capabilities of these agents expand, so do the challenges associated with their deployment and integration.

Within this evolving landscape, a major hurdle has been the efficient management of LLM-based agents. The primary issues revolve around allocating computational resources, maintaining interaction context, and integrating agents with varying capabilities and functions. Traditional approaches often lead to bottlenecks and underutilization of resources, undermining these intelligent systems’ potential efficiency and effectiveness.

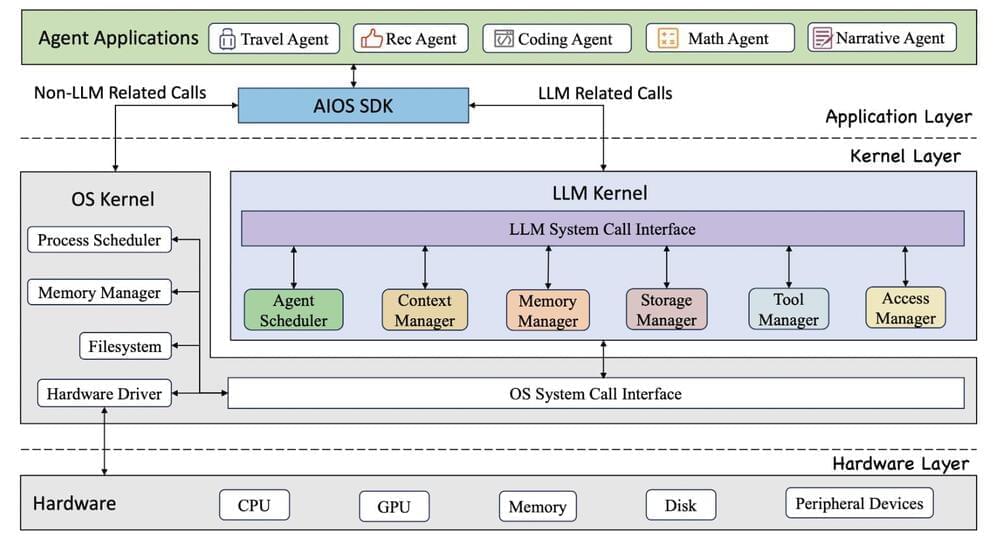

A research team from Rutgers University has developed the AIOS (Agent-Integrated Operating System), a pioneering LLM agent operating system designed to streamline the deployment and operation of LLM-based agents. This system is engineered to enhance resource allocation, enable the concurrent execution of multiple agents, and maintain a coherent context throughout agent interactions, optimizing agent operations’ overall performance and efficiency.