Discover AI school safety solutions with security technology from Xtract One. Protect your educational institution with cutting-edge threat detection solutions.

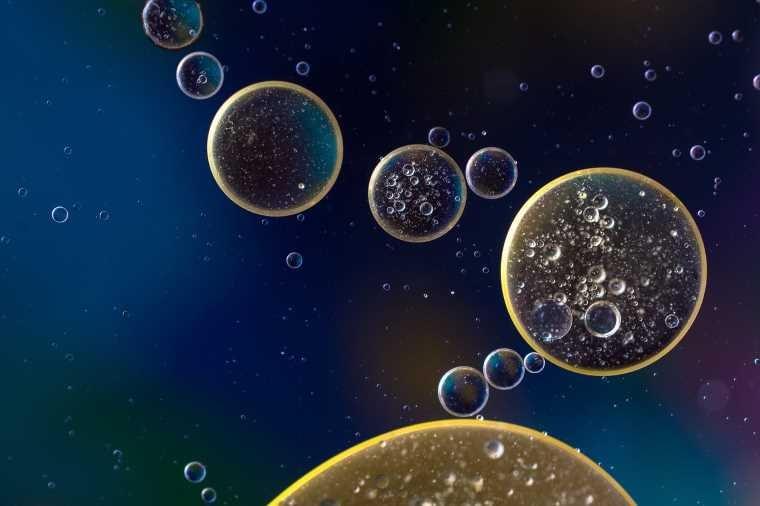

Harvard researchers say they have developed a programmable metafluid they are calling an ‘intelligent liquid’ that contains tunable springiness, adjustable optical properties, variable viscosity, and even the seemingly magical ability to shift between a Newtonian and non-Newtonian fluid.

The team’s exact formula is still a secret as they explore potential commercial applications. However, the researchers believe their intelligent liquid could be used in anything from programmable robots to intelligent shock absorbers or even optical devices that can shift between transparent and opaque states.

“We are just scratching the surface of what is possible with this new class of fluid,” said Adel Djellouli, a Research Associate in Materials Science and Mechanical Engineering at Harvard’s John A. Paulson School of Engineering and Applied Sciences (SEAS) and the first author of the paper. “With this one platform, you could do so many different things in so many different fields.”

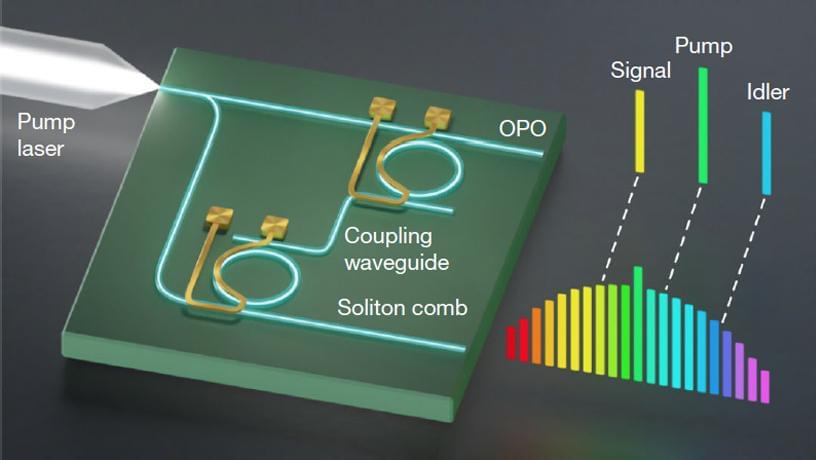

In a new Nature study, Columbia Engineering researchers have built a photonic chip that is able to produce high-quality, ultra-low-noise microwave signals using only a single laser. The compact device—a chip so small, it could fit on a sharp pencil point—results in the lowest microwave noise ever observed in an integrated photonics platform.

The achievement provides a promising pathway towards small-footprint ultra-low-noise microwave generation for applications such as high-speed communication, atomic clocks, and autonomous vehicles.

The challenge Electronic devices for global navigation, wireless communications, radar, and precision timing need stable microwave sources to serve as clocks and information carriers. A key aspect to increasing the performance of these devices is reducing the noise, or random fluctuations in phase, that is present on the microwave.

Learn More https://tinyurl.com/ThermonatorThermonator is the first-ever flamethrower-wielding robot dog. This quadruped is coupled with the ARC Flamethrower…

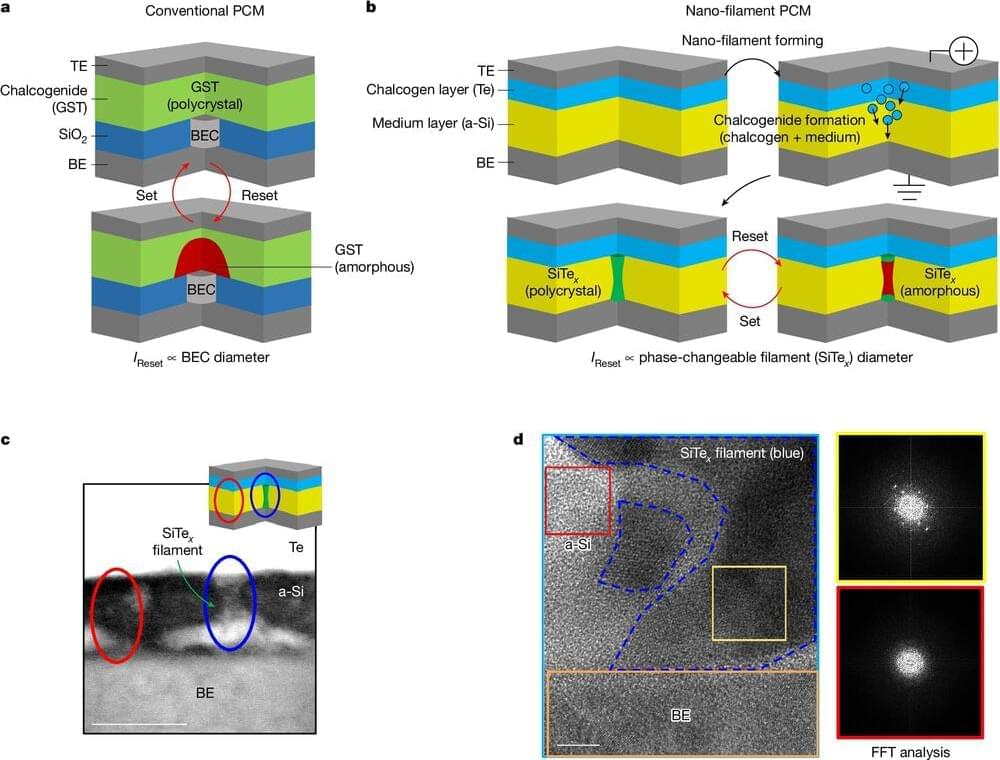

Globally, computation is booming at an unprecedented rate, fueled by the boons of artificial intelligence. With this, the staggering energy demand of the world’s computing infrastructure has become a major concern, and the development of computing devices that are far more energy-efficient is a leading challenge for the scientific community.

With 5,000 tiny robots in a mountaintop telescope, researchers can look 11 billion years into the past. The light from far-flung objects in space is just now reaching the Dark Energy Spectroscopic Instrument (DESI), enabling us to map our cosmos as it was in its youth and trace its growth to what we see today.

DESI Survey announces the most precise measurements of our expanding #universe using the BAO signal in 6.1 Million #galaxies and #Quasars from Year 1, tracing dark energy through cosmic time.

With 5,000 tiny robots in a mountaintop telescope, researchers can look 11 billion years into the past. The light from far-flung objects in space is just now reaching the Dark Energy Spectroscopic Instrument (DESI), enabling us to map our cosmos as it was in its youth and trace its growth to what we see today. Understanding how our universe has evolved is tied to how it ends, and to one of the biggest mysteries in physics: dark energy, the unknown ingredient causing our universe to expand faster and faster.

To study dark energy’s effects over the past 11 billion years, DESI has created the largest 3D map of our cosmos ever constructed, with the most precise measurements to date. This is the first time scientists have measured the expansion history of the young universe with a precision better than 1%, giving us our best view yet of how the universe evolved.