Over the past decade, organic luminescent materials have been recognized by academia and industry alike as promising components for light, flexible and versatile optoelectronic devices such as OLED displays. However, it is a challenge to find suitably efficient materials.

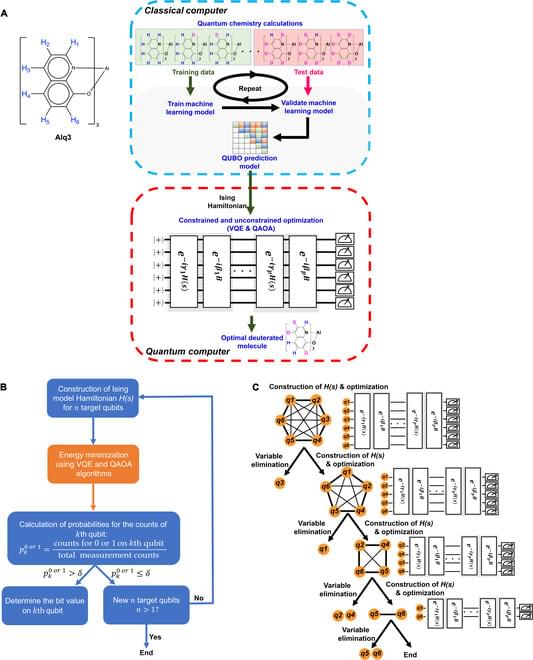

To address this challenge, a joint research team has developed a novel approach combining a machine learning model with quantum-classical computational molecular design to accelerate the discovery of efficient OLED emitters. This research was published May 17 in Intelligent Computing.

The optimal OLED emitter discovered by the authors using this “hybrid quantum-classical procedure” is a deuterated derivative of Alq3 and is both extremely efficient at emitting light and synthesizable.