Cerebras held an AI Day, and in spite of the concurrently running GTC, there wasn’t an empty seat in the house.

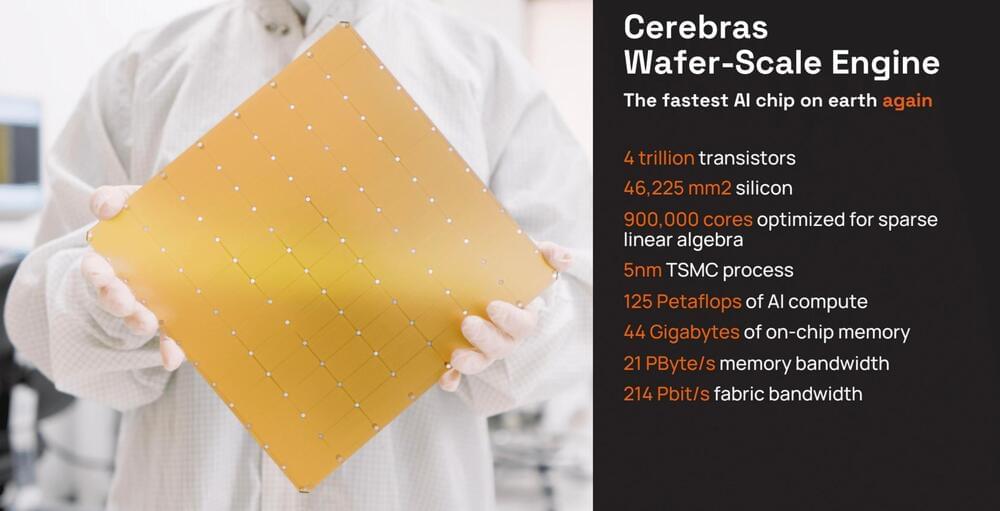

As we have noted, Cerebras Systems is one of the very few startups that is actually getting some serious traction in training AI, at least from a handful of clients. They just introduced the third generation of Wafer-Scale Engines, a monster of a chip that can outperform racks of GPUs, as well as a partnership with Qualcomm to provide custom training and Go-To-Market collaboration with the Edge AI leader. Here’s a few take-aways from the AI Day event. Lots of images from Cerebras, but they tell the story quite well! We will cover the challenges this bold startup still faces in the Conclusions at the end.

As the third generation of wafer-scale engines, the new WSE-3 and the system in which it runs, the CS-3, is an engineering marvel. While Cerebras likes to compare it to a single GPU chip, thats really not the point, which is to simplify scaling. Why cut up a a wafer of chips, package each with HBM, put the package on a board, connect to CPUs with a fabric, then tie them all back together with networking chips and cables? Thats a lot of complexity that leads to a lot of programing to distribute the workload via various forms of parallelism then tie them all back together into a supercomputer. Cerebras thinks it has a better idea.