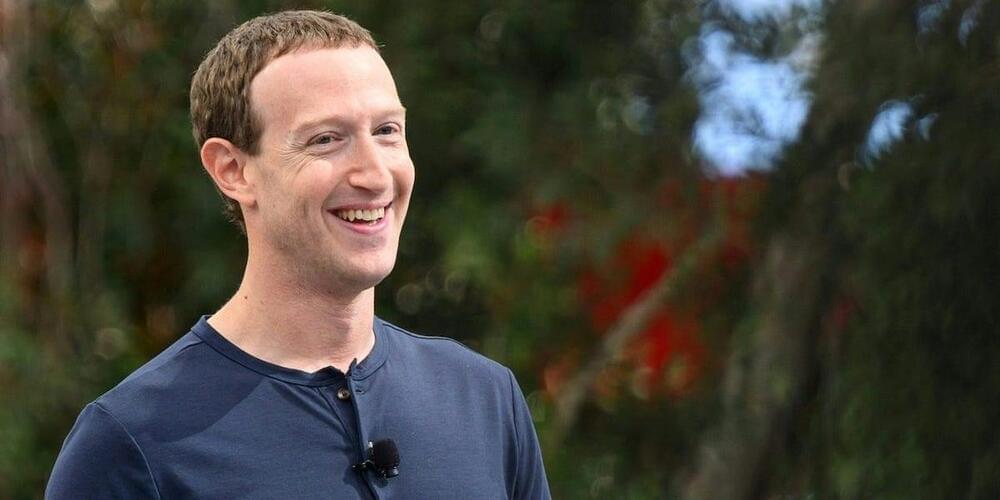

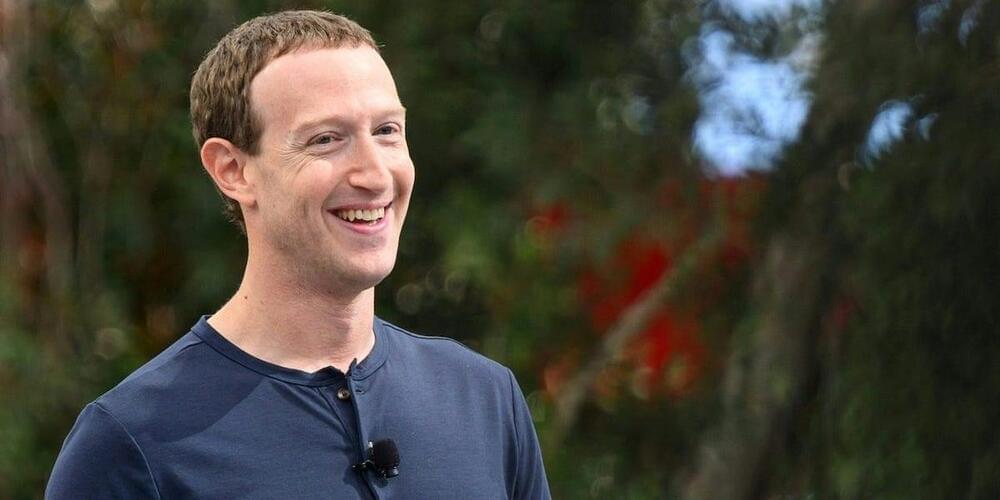

Meta CEO Mark Zuckerberg thinks ‘feedback loops,’ the process of AI learning from its own outputs, are more important than new data for developing AI.

Marshal Brain’s 2003 book Manna was quite ahead of its time in foreseeing that eventually, one way or another, we will have to confront and address the phenomenon of technological unemployment. In addition, Marshall is a passionate brainiac with a jovial personality, impressive background and a unique perspective. And so I was very happy to meet him in person for an exclusive interview. [Special thanks to David Wood without whose introduction this interview may not have happened!]

During our 82 min conversation with Marshall Brain we cover a variety of interesting topics such as: his books The Second Intelligent Species and Manna; AI as the end game for humanity; using cockroaches as a metaphor; logic and ethics; simulating the human brain; the importance of language and visual processing for the creating of AI; marrying off Siri to Watson; technological unemployment, social welfare and perpetual vacation; capitalism, socialism and the need for systemic change …

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation or become a patron on Patreon.

MIT scientists have tackled key obstacles to bringing 2D magnetic materials into practical use, setting the stage for the next generation of energy-efficient computers.

Globally, computation is booming at an unprecedented rate, fueled by the boons of artificial intelligence. With this, the staggering energy demand of the world’s computing infrastructure has become a major concern, and the development of computing devices that are far more energy-efficient is a leading challenge for the scientific community.

Use of magnetic materials to build computing devices like memories and processors has emerged as a promising avenue for creating “beyond-CMOS” computers, which would use far less energy compared to traditional computers. Magnetization switching in magnets can be used in computation the same way that a transistor switches from open or closed to represent the 0s and 1s of binary code.

New research led by Charité – Universitätsmedizin Berlin and published in Science reveals that the wiring of nerve cells in the human neocortex differs significantly from that in mice. The study discovered that human neurons predominantly transmit signals in a unidirectional manner, whereas mouse neurons typically send signals in looping patterns. This structural difference may enhance the human brain’s ability to process information more efficiently and effectively. The findings hold potential implications for advancing artificial neural network technologies.

The neocortex, a critical structure for human intelligence, is less than five millimeters thick. There, in the outermost layer of the brain, 20 billion neurons process countless sensory perceptions, plan actions, and form the basis of our consciousness. How do these neurons process all this complex information? That largely depends on how they are “wired” to each other.

A research group from the Ulsan National Institute of Science and Technology (UNIST), led by Professor Jonwoo Jeong of the Department of Physics, has recently discovered a groundbreaking principle of motion at the microscopic scale. Their findings reveal that objects can achieve directed movement simply by periodically changing their sizes within a liquid crystal medium. This innovative discovery holds significant potential for numerous fields of research and could lead to the development of miniature robots in the future.

In their research, the team observed that air bubbles within the liquid crystal could move in one direction by altering their sizes periodically, contrary to the symmetrical growth or contraction typically seen in air bubbles in other mediums. By introducing air bubbles, comparable in size to a human hair, into the liquid crystal and manipulating the pressure, the researchers were able to demonstrate this extraordinary phenomenon.

Scientists have demonstrated that facial recognition technology can predict a person’s political orientation with a surprising level of accuracy.

Researchers have demonstrated that facial recognition technology can predict political orientation from neutral expressions with notable accuracy, posing significant privacy concerns. This finding suggests our faces may reveal more personal information than previously understood.

Humanoid robots are robots that resemble and act like humans. Typically engineered to imitate authentic human expressions, interactions and movements, these robots are often outfitted with an array of cameras, sensors and, more recently, AI and machine learning technologies.

While more humanoid robots are being introduced into the world and making a positive impact in industries like logistics, manufacturing, healthcare and hospitality, their use is still limited, and development costs are high.

That said, the sector is expected to grow. The humanoid robot market is valued at $1.8 billion in 2023, according to research firm MarketsandMarkets, and is predicted to increase to more than $13 billion over the next five years. Fueling that growth and demand will be advanced humanoid robots with greater AI capabilities and human-like features that can take on more duties in the service industry, education and healthcare.

Elon Musk and Tesla are confident in their advancements in autonomous driving and robot taxis, with Musk emphasizing the importance of trusting his vision and long-term planning for the success of the company.

Questions to inspire discussion.

What is Elon Musk’s confidence in Tesla’s advancements in autonomous driving?