Herbert Ong Brighter with Herbert.

Category: robotics/AI – Page 745

China creates world’s first AI child — shows human emotion

Chinese scientists have unveiled what they are calling the world’s first artificial intelligence (AI) child.

Developed by the Beijing Institute for General Artificial Intelligence (BIGAI), Tong Tong or Little Girl’s virtual AI avatar was recently introduced for the first time in Beijing.

BIGAI sees Tong Tong as a giant step toward achieving a general artificial intelligence (AGI) agent when a machine can think and reason like a human being.

Tech Exec Predicts Billion-Dollar AI Girlfriend Industry

When witnessing the sorry state of men addicted to AI girlfriends, one Miami tech exec saw dollar signs instead of red flags.

In a blog-length post on X-formerly-Twitter, former WeWork exec Greg Isenberg said that after meeting a young guy who claims to spend $10,000 a month on so-called “AI girlfriends,” or relationship-simulating chatbots, he realized that eventually, someone is going to capitalize upon that market the way Match Group has with dating apps.

“I thought he was kidding,” Isenberg wrote. “But, he’s a 24-year-old single guy who loves it.”

Ray Kurzweil & Geoff Hinton Debate the Future of AI | EP #95

In this episode, recorded during the 2024 Abundance360 Summit, Ray, Geoffrey, and Peter debate whether AI will become sentient, what consciousness constitutes, and if AI should have rights.

Ray Kurzweil, an American inventor and futurist, is a pioneer in artificial intelligence. He has contributed significantly to OCR, text-to-speech, and speech recognition technologies. He is the author of numerous books on AI and the future of technology and has received the National Medal of Technology and Innovation, among other honors. At Google, Kurzweil focuses on machine learning and language processing, driving advancements in technology and human potential.

Geoffrey Hinton, often referred to as the “godfather of deep learning,” is a British-Canadian cognitive psychologist and computer scientist recognized for his pioneering work in artificial neural networks. His research on neural networks, deep learning, and machine learning has significantly impacted the development of algorithms that can perform complex tasks such as image and speech recognition.

Read Ray’s latest book, The Singularity Is Nearer: When We Merge with AI

Follow Geoffrey on X: https://twitter.com/geoffreyhinton.

Learn more about Abundance360: https://www.abundance360.com/summit.

———-

This episode is supported by exceptional companies:

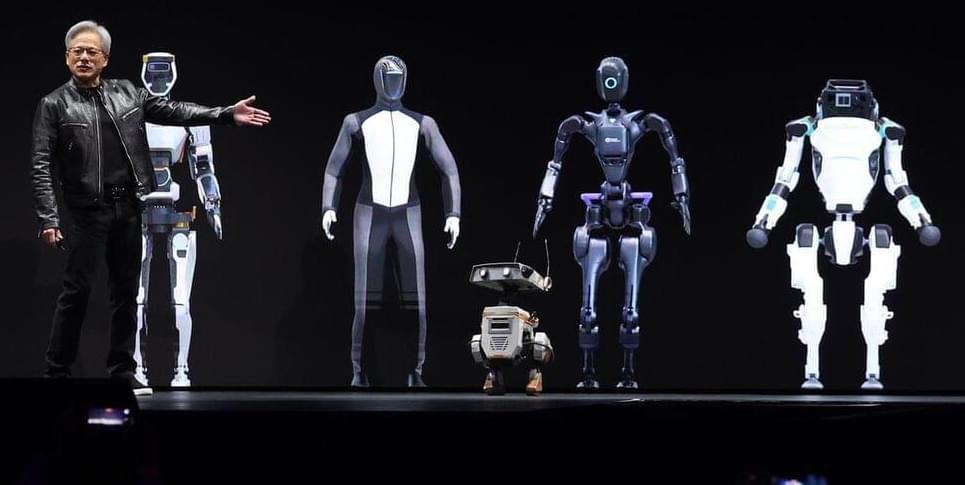

Amazon Grows To Over 750,000 Robots As World’s Second-Largest Private Employer Replaces Over 100,000 Humans

Amazon.com Inc. is rapidly advancing its use of robotics, deploying over 750,000 robots to work alongside its employees.

The world’s second-largest private employer employs 1.5 million people. While that’s a lot, it’s a decrease of over 100,000 employees from the 1.6 million workers it had in 2021. Meanwhile, the company had 520,000 robots in 2022 and 200,000 robots in 2019. While Amazon is bringing on hundreds of thousands of robots per year, the company is slowly decreasing its employee numbers.

The robots, including new models like Sequoia and Digit, are designed to perform repetitive tasks, thereby improving efficiency, safety and delivery speed for Amazon’s customers. Sequoia, for example, speeds up inventory management and order processing in fulfillment centers, while Digit, a bipedal robot developed in collaboration with Agility Robotics, handles tasks like moving empty tote boxes.