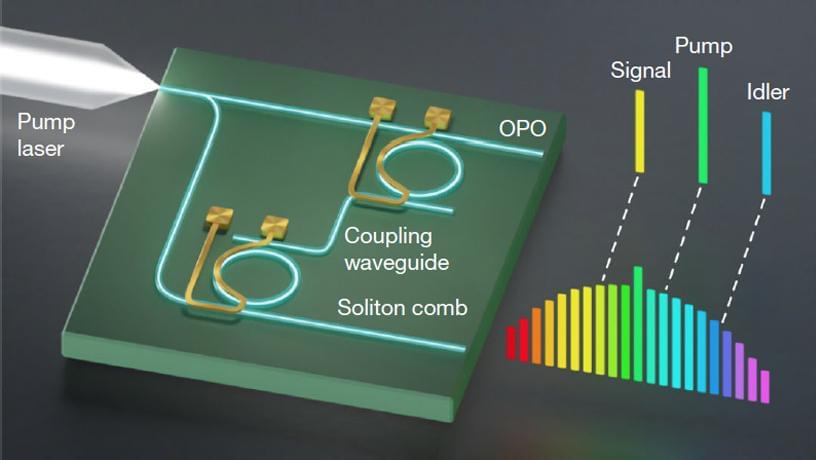

In a new Nature study, Columbia Engineering researchers have built a photonic chip that is able to produce high-quality, ultra-low-noise microwave signals using only a single laser. The compact device—a chip so small, it could fit on a sharp pencil point—results in the lowest microwave noise ever observed in an integrated photonics platform.

The achievement provides a promising pathway towards small-footprint ultra-low-noise microwave generation for applications such as high-speed communication, atomic clocks, and autonomous vehicles.

The challenge Electronic devices for global navigation, wireless communications, radar, and precision timing need stable microwave sources to serve as clocks and information carriers. A key aspect to increasing the performance of these devices is reducing the noise, or random fluctuations in phase, that is present on the microwave.