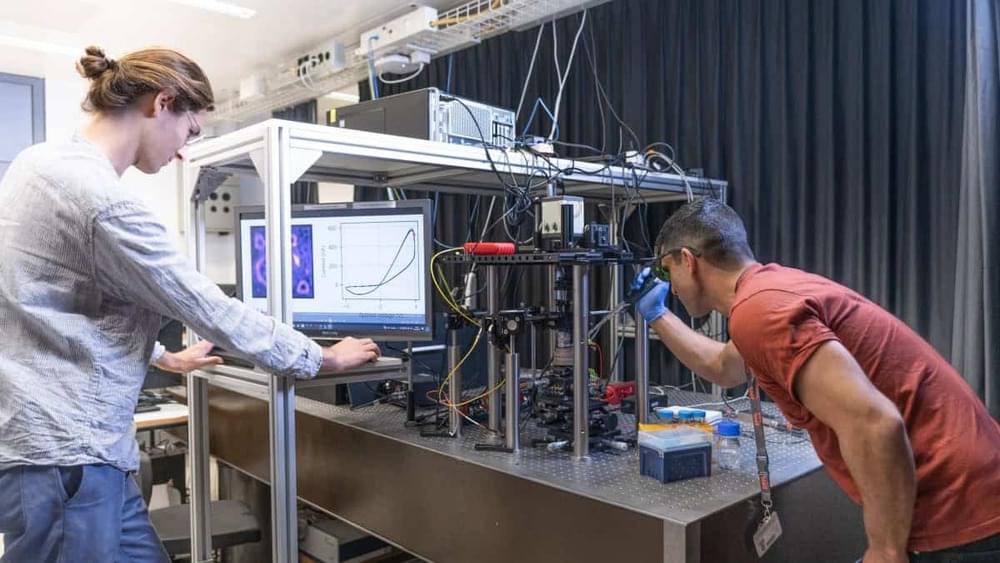

A memristor that uses changes in ion concentrations and mechanical deformations to store information has been developed by researchers at EPFL in Switzerland.

Research could lead to the development of electrolytic computers.

AI image generators that claim the ability to “undress” celebrities and random women are nothing new — but now, they’ve been spotted in monetized ads on Instagram.

As 404 Media reports, Meta — the parent company of Facebook and Instagram — contained in its ad library several paid posts promoting so-called “nudify” apps, which use AI to make deepfaked nudes out of clothed photos.

In one ad, a photo of Kim Kardashian was shown next to the words “undress any girl for free” and “try it.” In another, two AI-generated photos of a young-looking girl sit side by side — one with her wearing a long-sleeved shirt, another appearing to show her topless, with the words “any clothing delete” covering her breasts.

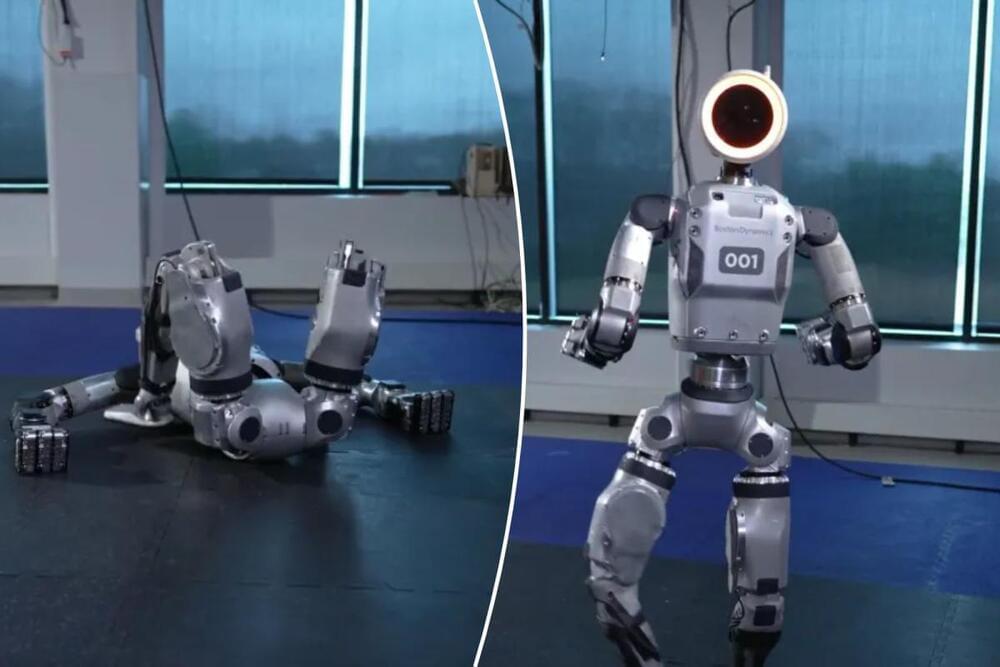

“This is the first look at a real product,” the company said. “But it certainly isn’t the last.”

“In the months and years ahead, we’re excited to show what the world’s most dynamic humanoid robot can really do – in the lab, in the factory, and in our lives,” the company also said.

Boston Dynamics partnered with the NYPD last year to roll out several crime-fighting robots to patrol Times Square and subway stations.

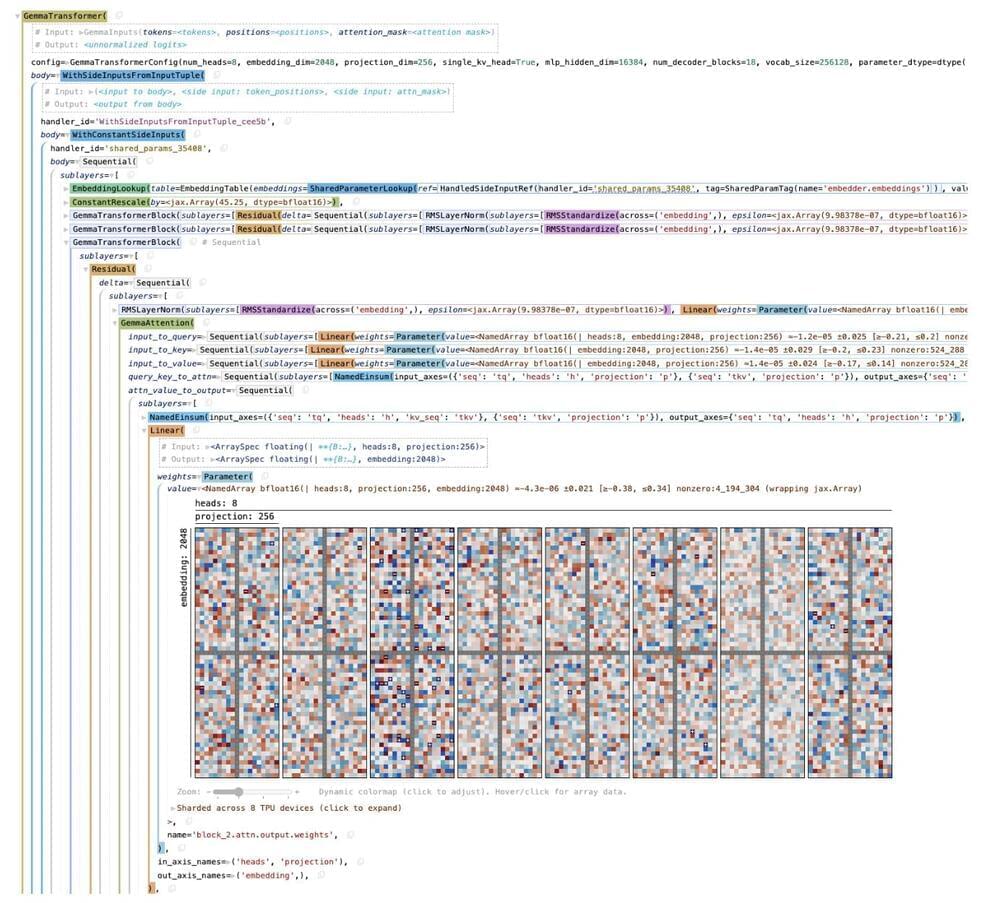

Google DeepMind has recently introduced Penzai, a new JAX library that has the potential to transform the way researchers construct, visualize, and alter neural networks. This innovative tool is designed to smoothly integrate with Google Colab and the JAX ecosystem, which is a major step forward in the accessibility and manipulability of AI models.

Penzai is a new approach to neural network development that emphasizes transparency and functionality. It allows users to view and edit models as legible pytree data structures, making it easier than ever to delve into the inner workings of a model. This feature is especially useful after a model has been trained, as it provides insights into how the model operates and allows for modifications that can help achieve desired outcomes.

Penzai aims to make AI research more accessible to researchers by simplifying the process of modifying pre-trained neural networks. This would enable a wider range of researchers to experiment and innovate on existing AI technologies, which is crucial for advancing the field and discovering new AI applications. Penzai’s user-friendly interface breaks down the barriers to AI research and makes it easier for everyone to benefit from the technology.