Watch Project Astra factorise a maths problem and even correct a graph. All shot on a prototype glasses device, in a single take in real time.

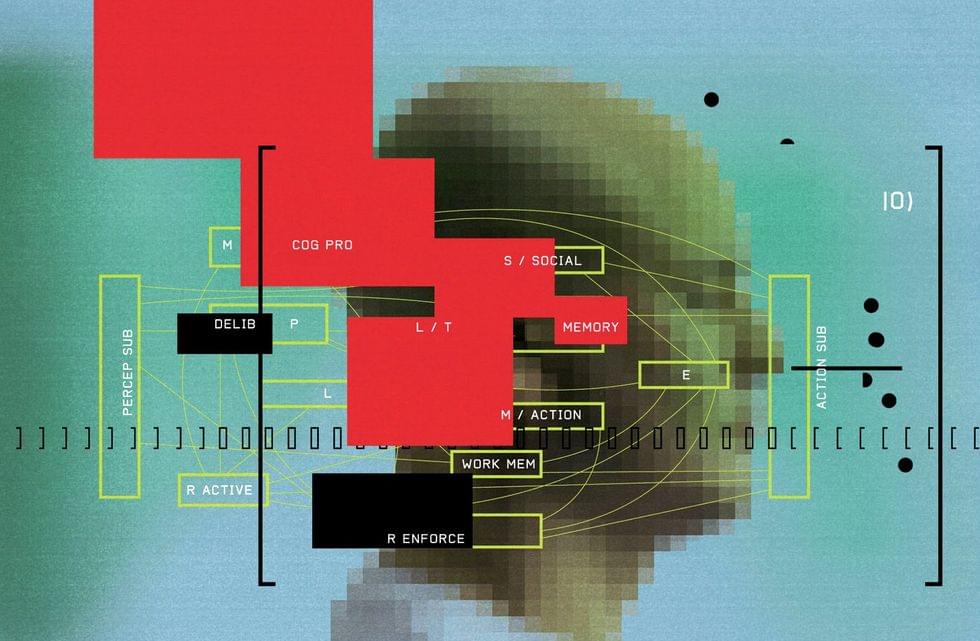

Project Astra is a prototype that explores the future of AI assistants. Building on our Gemini models, we’ve developed AI agents that can quickly process multimodal information, reason about the context you’re in, and respond to questions at a conversational pace, making interactions feel much more natural.

More about Project Astra: deepmind.google/project-astra