OpenAI has introduced GPT-4o, in the form of a digital personal assistant that can interact using text and visuals 🤖

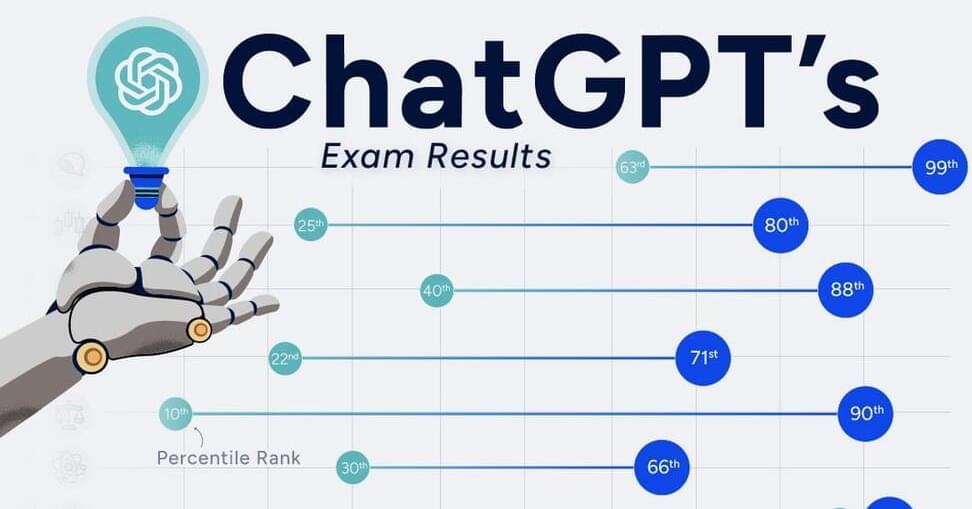

Here, we look back at GPT-4’s performance in human exams from a year ago for comparison.

From the archive:

A new Winows 11 feature that will remember what you did in your PC.

Microsoft has announced a new AI-powered feature for Windows 11 called ‘Recall,’ which records everything you do on your PC and lets you search through your historical activities.

Recall works like a photographic memory for your PC, letting you access everything you’ve seen or done on your computer in an organized way using queries in your native language.

With Recall, you can scroll through your timeline to find content from any app, website, or document you had opened. The feature utilizes snapshots to suggest actions based on what it recognizes, making it easy to return to specific emails in Outlook or the right chat in Teams.

Joints are an essential part in robotics, especially those that try to emulate the motion of (human) animals. Unlike the average automaton, animals are not outfitted with bearings and similar types of joints, but rather rely sometimes on ball joints and a lot on rolling contact joints (RCJs). These RCJs have the advantage of being part of the skeletal structure, making them ideal for compact and small joints. This is the conclusion that [Breaking Taps] came to as well while designing the legs for a bird-like automaton.

These RCJs do not just have the surfaces which contact each other while rotating, but also provide the constraints for how far a particular joint is allowed to move, both in the forward and backward directions as well as sideways. In the case of the biological version these contact surfaces are also coated with a constantly renewing surface to prevent direct bone-on-bone contact. The use of RCJs is rather common in robotics, with the humanoid DRACO 3 platform as detailed in a 2023 research article by [Seung Hyeon Bang] and colleagues in Frontiers in Robotics and AI.

The other aspect of RCJs is that they have to be restrained with a compliant mechanism. In the video [Breaking Taps] uses fishing line for this, but many more options are available. The ‘best option’ also depends on the usage and forces which the specific joint will be subjected to. For further reading on the kinematics in robotics and kin, we covered the book Exact Constraint: Machine Design Using Kinematic Principles by [Douglass L. Blanding] a while ago.

Chat gpt 4 is really excellent in physics work aiding the user very well much like wolfram alpha has done.

Artificial intelligence (AI) technologies have been consistently influencing the progress of education for an extended period, with its impact becoming more significant especially after the launch of ChatGPT-3.5 at the end of November 2022. In the field of physics education, recent research regarding the performance of ChatGPT-3.5 in solving physics problems discovered that its problem-solving abilities were only at the level of novice students, insufficient to cause outstanding alarm in the field of physics education. However, the release of ChatGPT-4 presented substantial improvements in reasoning and conciseness. How does this translate to performance in solving physics problems, and what kind of impact might it have on education?

Brain-machine interfaces are devices that enable direct communication between a brain’s electrical activity and an external device such as a computer or a robotic limb that allows people to control machines using their thoughts.

In the vast and ever-evolving landscape of technology, neuromorphic computing emerges as a groundbreaking frontier, reminiscent of uncharted territories awaiting exploration. This novel approach to computation, inspired by the intricate workings of the human brain, offers a path to traverse the complex terrains of artificial intelligence (AI) and advanced data processing with unprecedented efficiency and agility.

Neuromorphic computing, at its core, is an endeavor to mirror the human brain’s architecture and functionality within the realm of computer engineering. It represents a significant shift from traditional computing methods, charting a course towards a future where machines not only compute but also learn and adapt in ways that are strikingly similar to the human brain. This technology deploys artificial neurons and synapses, creating networks that process information in a manner akin to our cognitive processes. The ultimate objective is to develop systems capable of sophisticated tasks, with the agility and energy efficiency that our brain exemplifies.

The genesis of neuromorphic computing can be traced back to the late 20th century, rooted in the pioneering work of researchers who sought to bridge the gap between biological brain functions and electronic computing. The concept gained momentum in the 1980s, driven by the vision of Carver Mead, a physicist who proposed the use of analog circuits to mimic neural processes. Since then, the field has evolved, fueled by advancements in neuroscience and technology, growing from a theoretical concept to a tangible reality with vast potential.

These businesses are building tech that could exceed the abilities of today’s AI.

The field of artificial intelligence is still in its early years, yet several businesses are already working on technology that can become the foundation for AI’s future. These companies are developing quantum computing systems capable of processing mountains of data in seconds, which would take decades for a conventional computer.

Quantum machines can execute multiple computations simultaneously, accelerating processing time, while typical computers must process data in a linear fashion. This means quantum systems can evolve AI beyond the abilities of the most powerful supercomputers, enabling AI to drive cars and help find cures to diseases.

China’s first major sodium-ion battery energy storage station is now online, according to state-owned utility China Southern Power Grid Energy Storage.

The Fulin Sodium-ion Battery Energy Storage Station entered operation on May 11 in Nanning, the capital of the Guangxi Zhuang autonomous region in southern China. Its initial storage capacity is said to be 10 megawatt hours (MWh). Once fully developed, the Station is expected to reach a total capacity of 100 MWh.

The state utility says the 10 MWh sodium-ion battery energy storage station uses 210 Ah sodium-ion battery cells that charge to 90% in a mindblowing 12 minutes. The system comprises 22,000 cells.