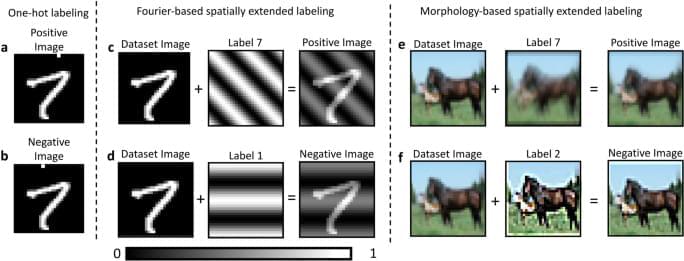

Scodellaro, R., Kulkarni, A., Alves, F. et al. Training convolutional neural networks with the Forward–Forward Algorithm. Sci Rep 15, 38,461 (2025). https://doi.org/10.1038/s41598-025-26235-2

Scodellaro, R., Kulkarni, A., Alves, F. et al. Training convolutional neural networks with the Forward–Forward Algorithm. Sci Rep 15, 38,461 (2025). https://doi.org/10.1038/s41598-025-26235-2

After years of fast expansion and billion-dollar bets, 2026 may mark the moment artificial intelligence confronts its actual utility. In their predictions for the next year, Stanford faculty across computer science, medicine, law, and economics converge on a striking theme: The era of AI evangelism is giving way to an era of AI evaluation. Whether it’s standardized benchmarks for legal reasoning, real-time dashboards tracking labor displacement, or clinical frameworks for vetting the flood of medical AI startups, the coming year demands rigor over hype. The question is no longer “Can AI do this?” but “How well, at what cost, and for whom?”

Learn more about what Stanford HAI faculty expect in the new year.

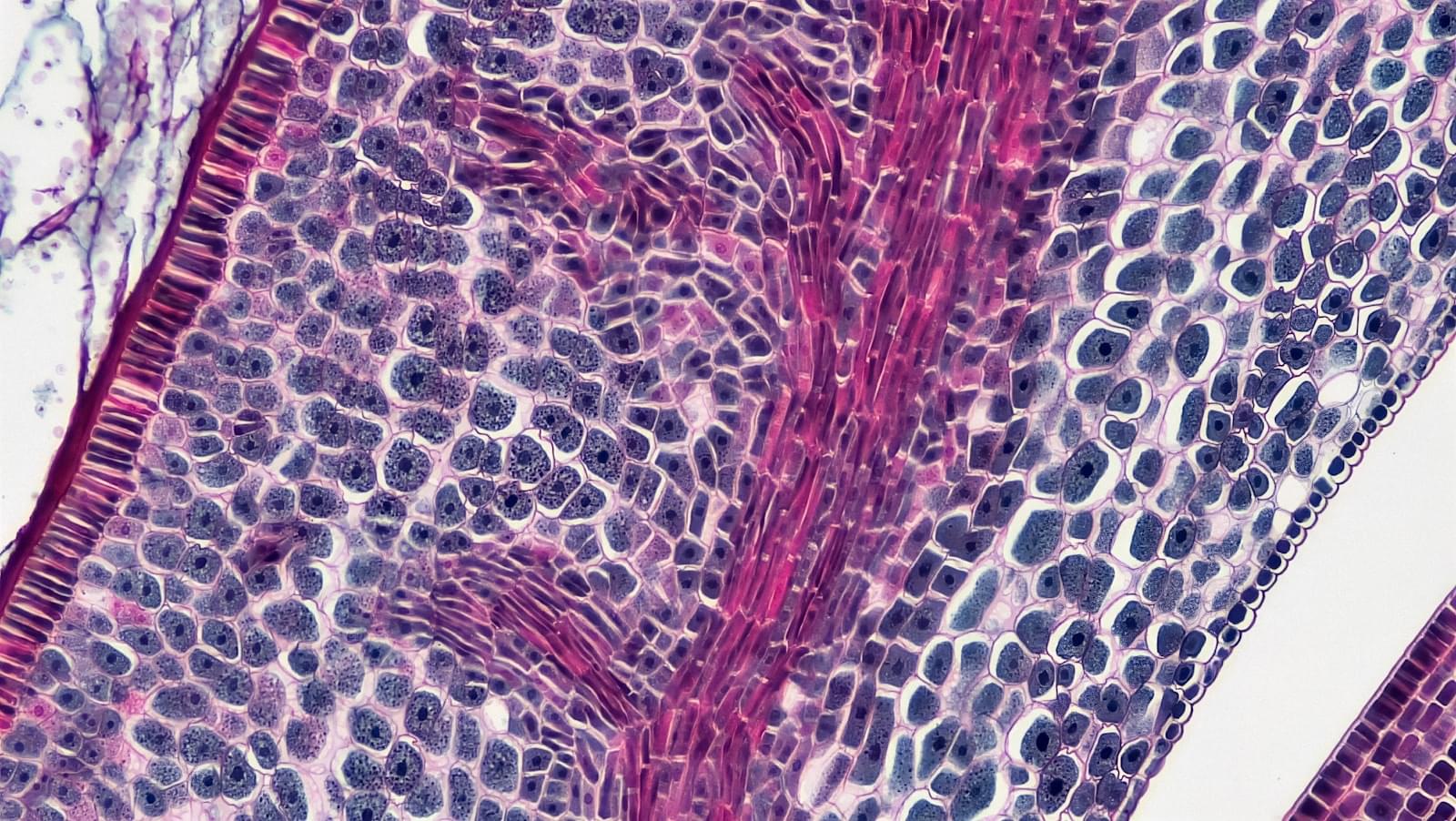

A research team affiliated with UNIST has introduced a technology that generates electricity from raindrops striking rooftops, offering a self-powered approach to automated drainage control and flood warning during heavy rainfall.

Led by Professor Young-Bin Park of the Department of Mechanical Engineering at UNIST, the team developed a droplet-based electricity generator (DEG) using carbon fiber-reinforced polymer (CFRP). This device, called the superhydrophobic fiber-reinforced polymer (S-FRP-DEG), converts the impact of falling rain into electrical signals capable of operating stormwater management systems without an external power source. The findings are published in Advanced Functional Materials.

CFRP composites are lightweight, yet durable, and are used in a variety of applications, such as aerospace and construction because of their strength and resistance to corrosion. Such characteristics make it well suited for long-term outdoor installation on rooftops and other exposed urban structures.

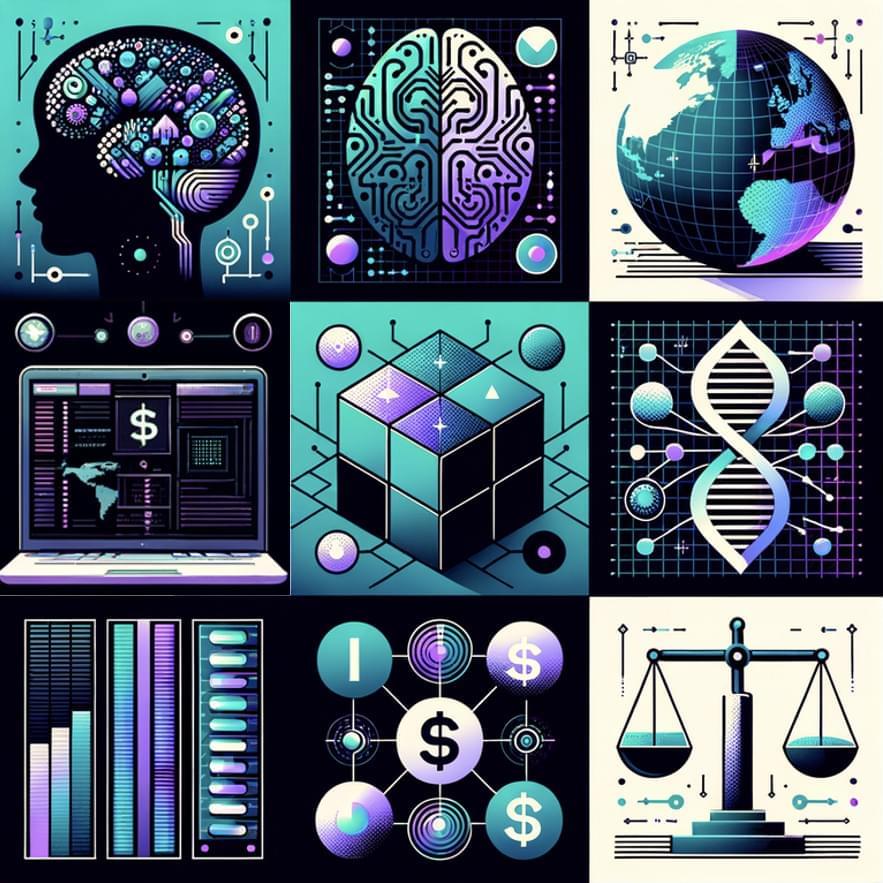

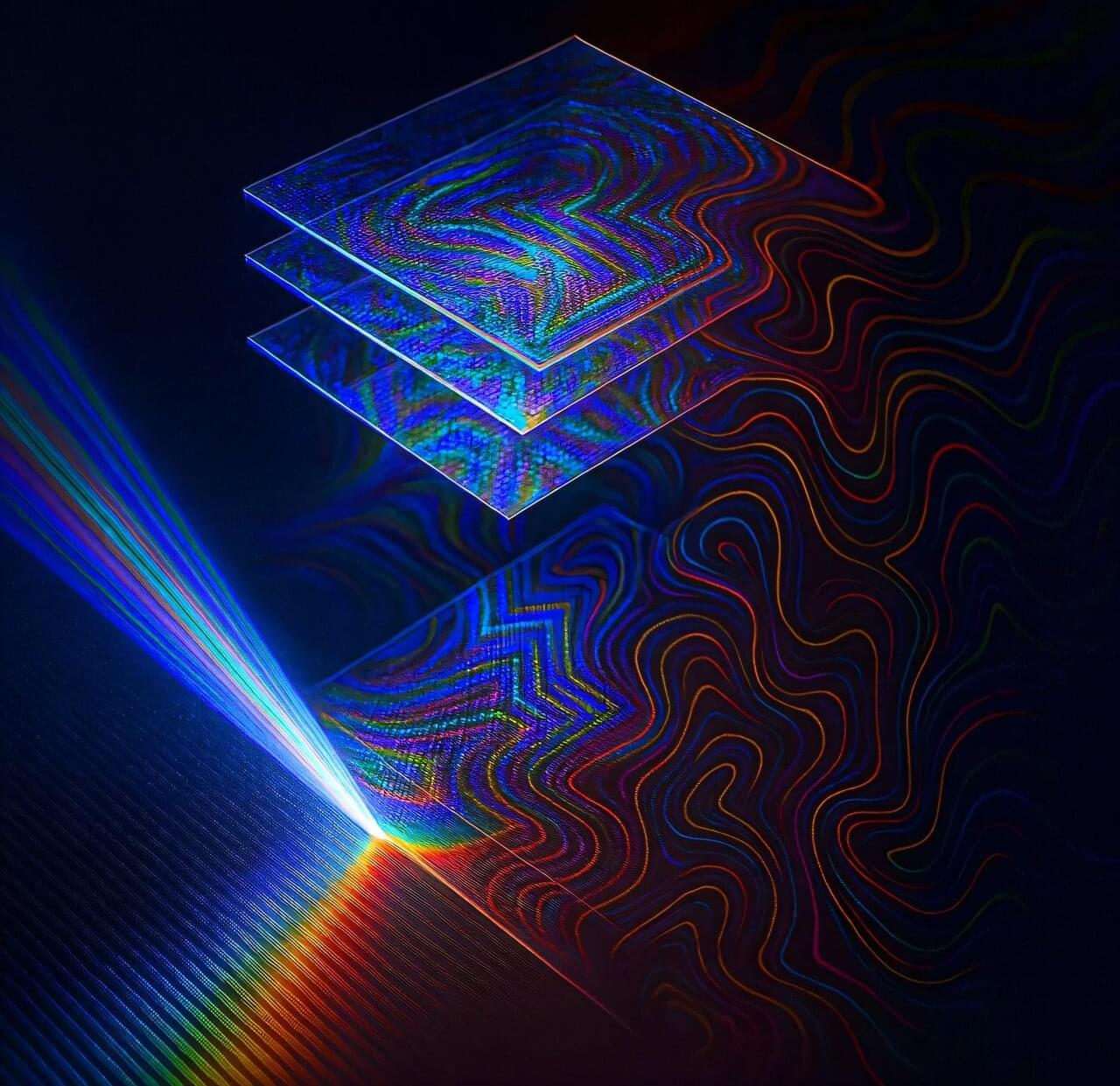

Optical computing has emerged as a powerful approach for high-speed and energy-efficient information processing. Diffractive optical networks, in particular, enable large-scale parallel computation through the use of passive structured phase masks and the propagation of light. However, one major challenge remains: systems trained in model-based simulations often fail to perform optimally in real experimental settings, where misalignments, noise, and model inaccuracies are difficult to capture.

In a new paper published in Light: Science & Applications, researchers at the University of California, Los Angeles (UCLA) introduce a model-free in situ training framework for diffractive optical processors, driven by proximal policy optimization (PPO), a reinforcement learning algorithm known for stability and sample efficiency. Rather than rely on a digital twin or the knowledge of an approximate physical model, the system learns directly from real optical measurements, optimizing its diffractive features on the hardware itself.

“Instead of trying to simulate complex optical behavior perfectly, we allow the device to learn from experience or experiments,” said Aydogan Ozcan, Chancellor’s Professor of Electrical and Computer Engineering at UCLA and the corresponding author of the study. “PPO makes this in situ process fast, stable, and scalable to realistic experimental conditions.”

Researchers at the University of California, Los Angeles (UCLA) have developed an optical computing framework that performs large-scale nonlinear computations using linear materials.

Reported in eLight, the study demonstrates that diffractive optical processors—thin, passive material structures composed of phase-only layers—can compute numerous nonlinear functions simultaneously, executed rapidly at extreme parallelism and spatial density, bound by the diffraction limit of light.

Nonlinear operations underpin nearly all modern information-processing tasks, from machine learning and pattern recognition to general-purpose computing. Yet, implementing such operations optically has remained a challenge, as most nonlinear optical effects are weak, power-hungry, or slow.

An AI model using deep transfer learning—the most advanced form of machine learning—has predicted spoken language outcomes with 92% accuracy from one to three years after patients received cochlear implants (implanted electronic hearing device).

The research is published in the journal JAMA Otolaryngology–Head & Neck Surgery.

Although cochlear implantation is the only effective treatment to improve hearing and enable spoken language for children with severe to profound hearing loss, spoken language development after early implantation is more variable in comparison to children born with typical hearing. If children who are likely to have more difficulty with spoken language are identified prior to implantation, intensified therapy can be offered earlier to improve their speech.

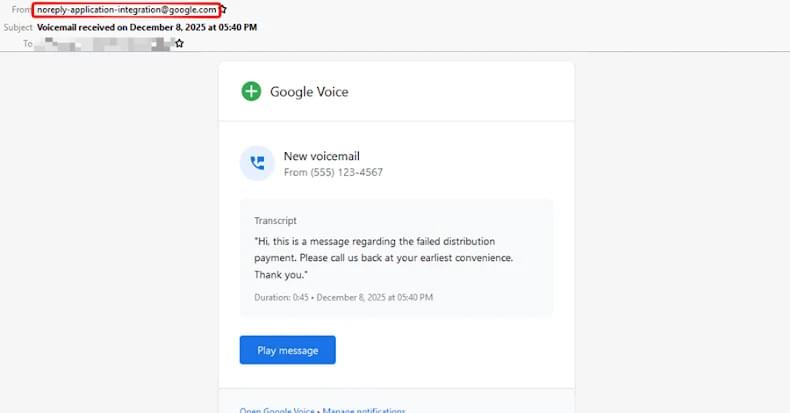

In response to the findings, Google has blocked the phishing efforts that abuse the email notification feature within Google Cloud Application Integration, adding that it’s taking more steps to prevent further misuse.

Check Point’s analysis has revealed that the campaign has primarily targeted manufacturing, technology, financial, professional services, and retail sectors, although other industry verticals, including media, education, healthcare, energy, government, travel, and transportation, have been singled out.

“These sectors commonly rely on automated notifications, shared documents, and permission-based workflows, making Google-branded alerts especially convincing,” it added. “This campaign highlights how attackers can misuse legitimate cloud automation and workflow features to distribute phishing at scale without traditional spoofing.”