Modern computer chips generate a lot of heat—and consume large amounts of energy as a result. A promising approach to reducing this energy demand could lie in the cold, as highlighted by a new Perspective article by an international research team coordinated by Qing-Tai Zhao from Forschungszentrum Jülich. Savings could reach as high as 80%, according to the researchers.

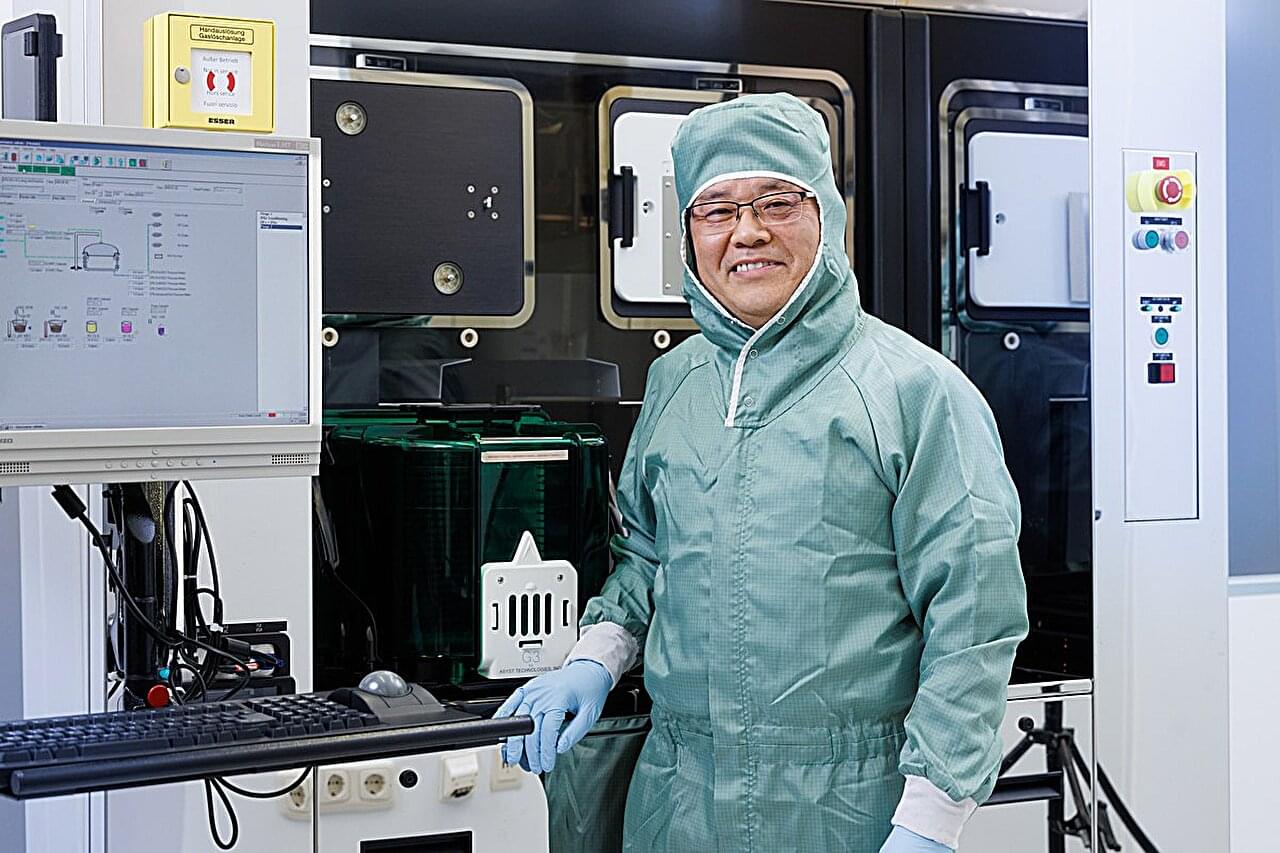

The work was conducted in collaboration with Prof. Joachim Knoch from RWTH Aachen University and researchers from EPFL in Switzerland, TSMC and National Yang Ming Chiao Tung University (NYCU) in Taiwan, and the University of Tokyo. In the article published in Nature Reviews Electrical Engineering, the authors outline how conventional CMOS technology can be adapted for cryogenic operation using novel materials and intelligent design strategies.

Data centers already consume vast amounts of electricity—and their power requirements are expected to double by 2030 due to the rising energy demands of artificial intelligence, according to the International Energy Agency (IEA). The computer chips that process data around the clock produce large amounts of heat and require considerable energy for cooling. But what if we flipped the script? What if the key to energy efficiency lay not in managing heat, but in embracing the cold?