Researchers at the University of California, Los Angeles (UCLA) have developed an optical computing framework that performs large-scale nonlinear computations using linear materials.

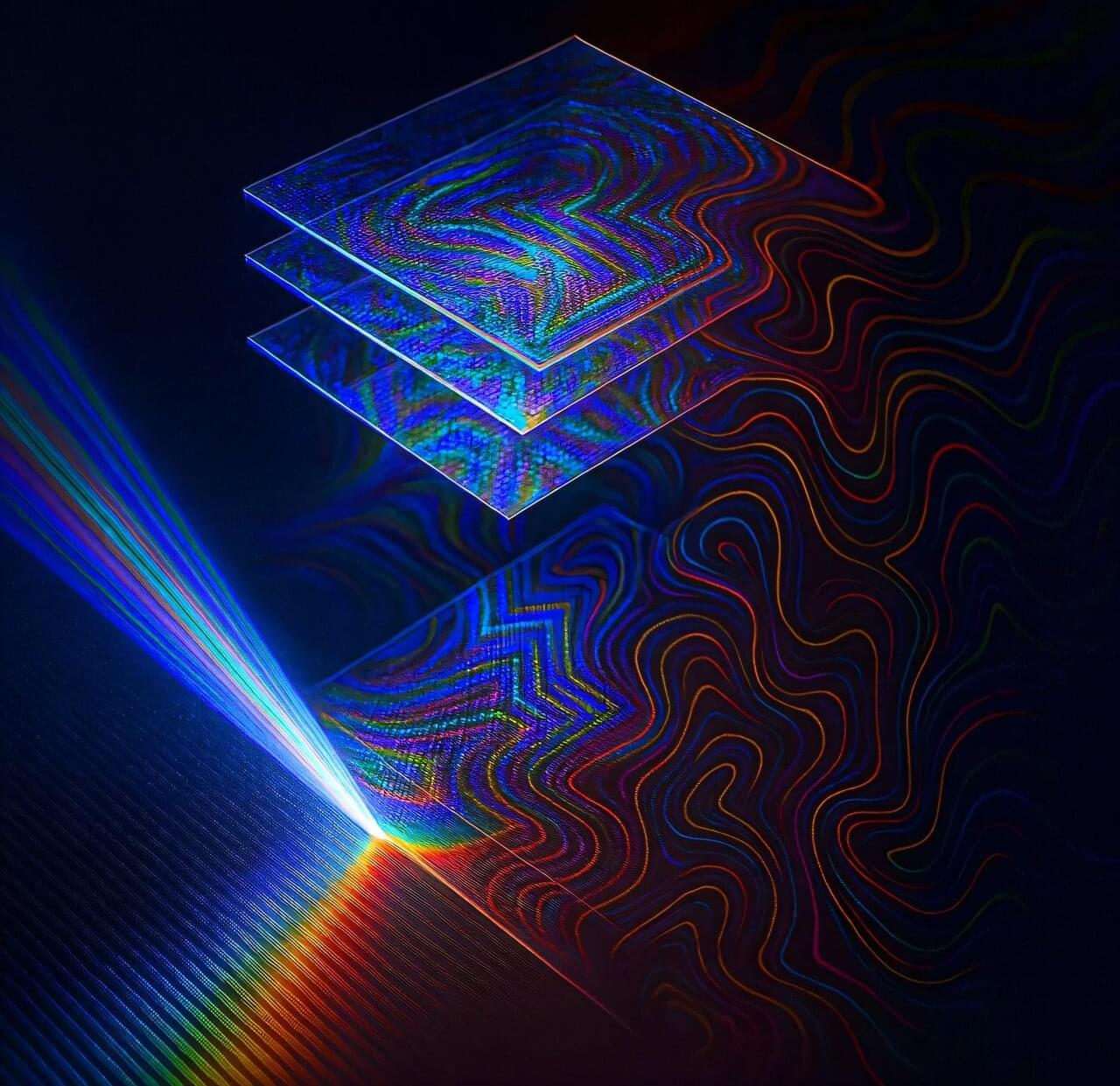

Reported in eLight, the study demonstrates that diffractive optical processors—thin, passive material structures composed of phase-only layers—can compute numerous nonlinear functions simultaneously, executed rapidly at extreme parallelism and spatial density, bound by the diffraction limit of light.

Nonlinear operations underpin nearly all modern information-processing tasks, from machine learning and pattern recognition to general-purpose computing. Yet, implementing such operations optically has remained a challenge, as most nonlinear optical effects are weak, power-hungry, or slow.