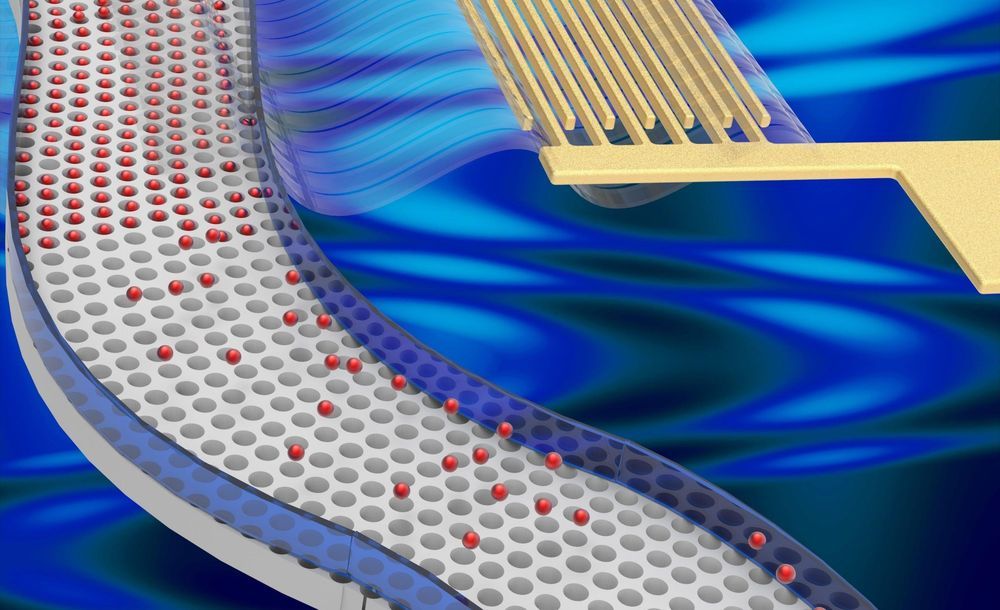

Acoustofluidics is the fusion of acoustics and fluid mechanics which provides a contact-free, rapid and effective manipulation of fluids and suspended particles. The applied acoustic wave can produce a non-zero time-averaged pressure field to exert an acoustic radiation force on particles suspended in a microfluidic channel. However, for particles below a critical size the viscous drag force dominates over the acoustic radiation forces due to the strong acoustic streaming resulting from the acoustic energy dissipation in the fluid. Thus, particle size acts as a key limiting factor in the use of acoustic fields for manipulation and sorting applications that would otherwise be useful in fields including sensing (plasmonic nanoparticles), biology (small bioparticle enrichment) and optics (micro-lenses).

Although acoustic nanoparticle manipulation has been demonstrated, terahertz (THz) or gigahertz (GHz) frequencies are usually required to create nanoscale wavelengths, in which the fabrication of very small feature sizes of SAW transducers is challenging. In addition, single nanoparticle positioning into discrete traps has not been demonstrated in nanoacoustic fields. Hence, there is a pressing need to develop a fast, precise and scalable method for individual nano- and submicron scale manipulation in acoustic fields using megahertz (MHz) frequencies.

An interdisciplinary research team led by Associate Professor Ye Ai from Singapore University of Technology and Design (SUTD) and Dr. David Collins from University of Melbourne, in collaboration with Professor Jongyoon Han from MIT and Associate Professor Hong Yee Low from SUTD, developed a novel acoustofluidic technology for massively multiplexed submicron particle trapping within nanocavities at the single-particle level.