The kickoff of Lyft’s second challenge comes months after Waymo expanded its public driving data set and launched the $110,000 Waymo Open Dataset competition. Winners were announced mid-June during a workshop at the 2020 Conference on Computer Vision and Pattern Recognition (CVPR), which was held online this year due to the coronavirus pandemic.

Following the release of the Perception Dataset and the conclusion of its 2019 object detection competition, Lyft today shared a new corpus — the Prediction Dataset — containing the logs of movements of cars, pedestrians, and other obstacles encountered by its fleet of 23 autonomous vehicles in Palo Alto. Coinciding with this, the company plans to launch a challenge that will task entrants with predicting the motion of traffic agents.

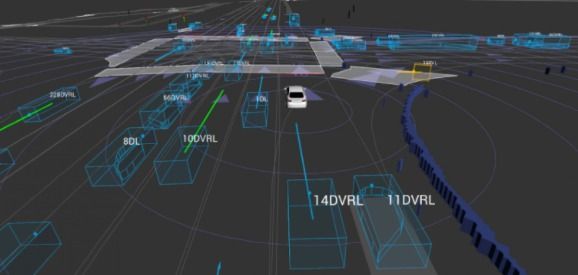

A longstanding research problem within the self-driving domain is creating models robust and reliable enough to predict traffic motion. Lyft’s data set focuses on motion prediction by including the movement of traffic types its fleet crossed paths with, like cars, cyclists, and pedestrians. This movement is derived from data collected by the sensor suite mounted to the roof of Lyft’s vehicles, which captures things like lidar and radar readings as the vehicles drive tens of thousands of miles:

Logs of over 1,000 hours of traffic agent movement.