Machines that grip, grapple, and maneuver will soon have their go at maintaining the fleet of small spacecraft that encircle Earth.

Category: robotics/AI – Page 1,725

Retina-inspired sensors for more adaptive visual perception

To monitor and navigate real-world environments, machines and robots should be able to gather images and measurements under different background lighting conditions. In recent years, engineers worldwide have thus been trying to develop increasingly advanced sensors, which could be integrated within robots, surveillance systems, or other technologies that can benefit from sensing their surroundings.

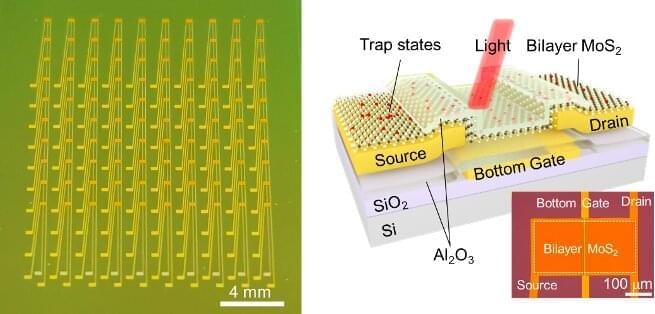

Researchers at Hong Kong Polytechnic University, Peking University, Yonsei University and Fudan University have recently created a new sensor that can collect data in various illumination conditions, employing a mechanism that artificially replicates the functioning of the retina in the human eye. This bio-inspired sensor, presented in a paper published in Nature Electronics, was fabricated using phototransistors made of molybdenum disulfide.

“Our research team started the research on optoelectronic memory five years ago,” Yang Chai, one of the researchers who developed the sensor, told TechXplore. “This emerging device can output light-dependent and history-dependent signals, which enables image integration, weak signal accumulation, spectrum analysis and other complicated image processing functions, integrating the multifunction of sensing, data storage and data processing in a single device.”

U.S. eliminates human controls requirement for fully automated vehicles

WASHINGTON, March 10 (Reuters) — U.S. regulators on Thursday issued final rules eliminating the need for automated vehicle manufacturers to equip fully autonomous vehicles with manual driving controls to meet crash standards.

Automakers and tech companies have faced significant hurdles to deploying automated driving system (ADS) vehicles without human controls because of safety standards written decades ago that assume people are in control.

Last month, General Motors Co (GM.N) and its self-driving technology unit Cruise petitioned the U.S. National Highway Traffic Safety Administration (NHTSA) for permission to build and deploy a self-driving vehicle without human controls like steering wheels or brake pedals.

Amazon and Virginia Tech launch AI and ML research initiative

Amazon and Virginia Tech today announced the establishment of the Amazon – Virginia Tech Initiative for Efficient and Robust Machine Learning.

The initiative will provide an opportunity for doctoral students in the College of Engineering who are conducting AI and ML research to apply for Amazon fellowships, and it will support research efforts led by Virginia Tech faculty members. Under the initiative, Virginia Tech will host an annual public research symposium to share knowledge with the machine learning and related research communities. And in collaboration with Amazon, Virginia Tech will co-host two annual workshops, and training and recruiting events for Virginia Tech students.

“This initiative’s emphasis will be on efficient and robust machine learning, such as ensuring algorithms and models are resistant to errors and adversaries,” said Naren Ramakrishnan, the director of the Sanghani Center and the Thomas L. Phillips Professor of Engineering. “We’re pleased to continue our work with Amazon and expand machine learning research capabilities that could address worldwide industry-focused problems.”

Army Special Operations Forces use Project Origin systems in latest Soldier experiment

DUGWAY, Utah — Army Green Berets from the 1st Special Forces Group conducted two weeks of hands-on experimentation with Project Origin Unmanned Systems at Dugway Proving Ground. Engineers from the U.S. Army DEVCOM Ground Vehicle Systems Center were on site to collect data on how these elite Soldiers utilized the systems and what technology and behaviors are desired.

Project Origin vehicles are the evolution of multiple Soldier Operational Experiments. This GVSC-led rapid prototyping effort allows the Army to conduct technology and autonomous behavior integration for follow-on assessments with Soldiers in order to better understand what Soldiers need from unmanned systems.

For the two-week experiment, Soldiers with the 1st Special Forces Group attended familiarization and new equipment training in order to develop Standard Operating Procedures for Robotic Combat Vehicles. The unit utilized these SOPs to conduct numerous mission-oriented exercises including multiple live-fire missions during the day and night.

Stepping Into the Future

The ‘Stepping Into the Future’ conference is coming up soon — April 23-24th to be exact. It’s online and it’s free (via zoom). It will be fun & exciting — I hope you can all make it. Many of the synopses of coming talks are already online (linked to from the agenda) — so check them out.

About | Speakers | Agenda.

We are in the midst of a technological avalanche – surprisingly to many, AI has made the impossible possible. In a rapidly changing world maintaining and expanding our capacity to innovate is essential.

Posthuman Mimesis, Keynote I: Cyborg Experiments (Kevin Warwick)

http://www.homomimeticus.eu/

Part of the ERC-funded project Homo Mimeticus, the Posthuman Mimesis conference (KU Leuven, May 2021) promoted a mimetic turn in posthuman studies. In the first keynote Lecture, Prof. Kevin Warwick (U of Coventry) argued that our future will be as cyborgs – part human, part technology. Kevin’s own experiments will be used to explain how implant and electrode technology can be employed to create cyborgs: biological brains for robots, to enable human enhancement and to diminish the effects of neural illnesses. In all cases the end result is to increase the abilities of the recipients. An indication is given of a number of areas in which such technology has already had a profound effect, a key element being the need for an interface linking a biological brain directly with computer technology. A look will be taken at future concepts of being, for posthumans this possibly involving a click and play body philosophy. New, much more powerful, forms of communication will also be considered.

HOM Videos is part of an ERC-funded project titled Homo Mimeticus: Theory and Criticism, which has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement n°716181)

Follow HOM on Twitter: https://twitter.com/HOM_Project.

Facebook: https://www.facebook.com/HOMprojectERC