UK-based AI chipmaker Graphcore has announced a project called The Good Computer. This will be capable of handling neural network models with 500 trillion parameters – large enough to enable what the company calls ‘ultra-intelligence’.

A team from the Max Planck Institute for Intelligent Systems in Germany have developed a novel thumb-shaped touch sensor capable of resolving the force of a contact, as well as its direction, over the whole surface of the structure. Intended for dexterous manipulation systems, the system is constructed from easily sourced components, so should scale up to a larger assemblies without breaking the bank. The first step is to place a soft and compliant outer skin over a rigid metallic skeleton, which is then illuminated internally using structured light techniques. From there, machine learning can be used to estimate the shear and normal force components of the contact with the skin, over the entire surface, by observing how the internal envelope distorts the structured illumination.

The novelty here is the way they combine both photometric stereo processing with other structured light techniques, using only a single camera. The camera image is fed straight into a pre-trained machine learning system (details on this part of the system are unfortunately a bit scarce) which directly outputs an estimate of the contact shape and force distribution, with spatial accuracy reported good to less than 1 mm and force resolution down to 30 millinewtons. By directly estimating normal and shear force components the direction of the contact could be resolved to 5 degrees. The system is so sensitive that it can reportedly detect its own posture by observing the deformation of the skin due its own weight alone!

We’ve not covered all that many optical sensing projects, but here’s one using a linear CIS sensor to turn any TV into a touch screen. And whilst we’re talking about using cameras as sensors, here’s a neat way to use optical fibers to read multiple light-gates with a single camera and OpenCV.

Physicists have discovered a new way to coat soft robots in materials that allow them to move and function in a more purposeful way. The research, led by the UK’s University of Bath, is described today in Science Advances.

Authors of the study believe their breakthrough modeling on ‘active matter’ could mark a turning point in the design of robots. With further development of the concept, it may be possible to determine the shape, movement and behavior of a soft solid not by its natural elasticity but by human-controlled activity on its surface.

The surface of an ordinary soft material always shrinks into a sphere. Think of the way water beads into droplets: the beading occurs because the surface of liquids and other soft material naturally contracts into the smallest surface area possible—i.e. a sphere. But active matter can be designed to work against this tendency. An example of this in action would be a rubber ball that’s wrapped in a layer of nano-robots, where the robots are programmed to work in unison to distort the ball into a new, pre-determined shape (say, a star).

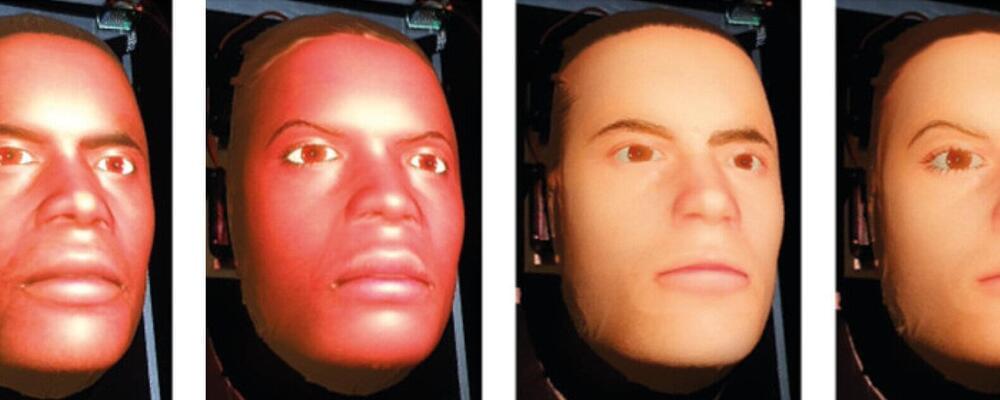

A new approach to producing realistic expressions of pain on robotic patients could help to reduce error and bias during physical examination.

A team led by researchers at Imperial College London has developed a way to generate more accurate expressions of pain on the face of medical training robots during physical examination of painful areas.

Findings, published today in Scientific Reports, suggest this could help teach trainee doctors to use clues hidden in patient facial expressions to minimize the force necessary for physical examinations.

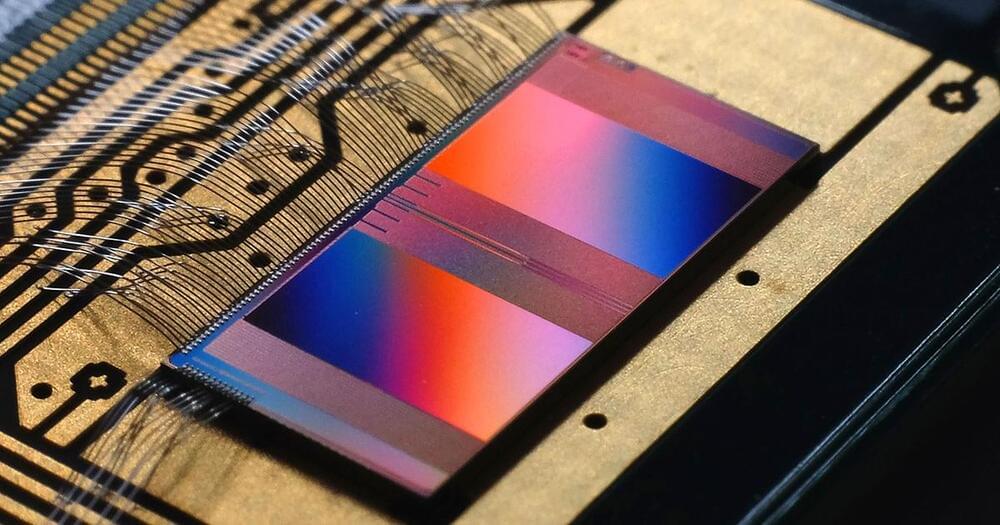

A Stanford University-led research team has set a new Guinness World Record for the fastest DNA sequencing technique using AI computing to accelerate workflow speed.

The research, led by Dr Euan Ashley, professor of medicine, genetics and biomedical data science at Stanford School of Medicine, in collaboration with Nvidia, Oxford Nanopore Technologies, Google, Baylor College of Medicine, and the University of California, achieved sequencing in just five hours and two minutes.

The study, published in The New England Journal of Medicine, involved speeding up every step of genome sequencing workflow by relying on new technology. This included using nanopore sequencing on Oxford Nanopore’s PromethION Flow Cells to generate more than 100 gigabases of data per hour, and Nvidia GPUs on Google Cloud to speed up the base calling and variant calling processes.

Artificial intelligence is making its significance in most of the major sectors these days. The top AI startups are making a buzz in the market that has the potential to revolutionize the world. There are thousands of AI startups available today and this blog will share some of the most promising AI Startups that are making waves in the AI technology field.

Before jumping into sharing about these startups, let’s understand what area of field AI is contributing to. Mentioned below are some of the major fields where AI is contributing and bringing a change.

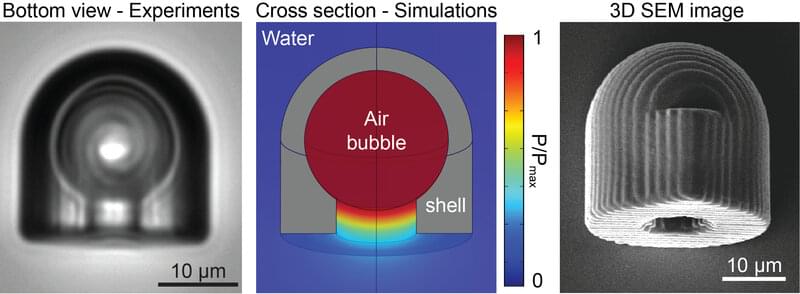

Researchers at the Max Planck Institute for Intelligent Systems in Stuttgart have designed and fabricated an untethered microrobot that can slip along either a flat or curved surface in a liquid when exposed to ultrasound waves. Its propulsion force is two to three orders of magnitude stronger than the propulsion force of natural microorganisms such as bacteria or algae. Additionally, it can transport cargo while swimming. The acoustically propelled robot hence has significant potential to revolutionize the future minimally invasive treatment of patients.

Stuttgart—Researchers at the Max Planck Institute for Intelligent Systems (MPI-IS) in Stuttgart developed a bullet-shaped, synthetic miniature robot with a diameter of 25 micrometers, which is acoustically propelled forward—a speeding bullet, in the truest sense of the word. Less than the diameter of a human hair in size, never before has such an actuated microrobot reached this speed. Its smart design is so efficient it even outperforms the swimming capabilities of natural microorganisms.

The scientists designed the 3D-printed polymer microrobot with a spherical cavity and a small tube-like nozzle towards the bottom (see figure 1). Surrounded by liquid such as water, the cavity traps a spherical air bubble. Once the robot is exposed to acoustic waves of around 330 kHz, the air bubble pulsates, pushing the liquid inside the tube towards the back end of the microrobot. The liquid’s movement then propels the bullet forward quite vigorously at up to 90 body lengths per second. That is a thrust force two to three orders of magnitude stronger than those of natural microorganisms such as algae or bacteria. Both are among the most efficient microswimmers in nature, optimized by evolution.