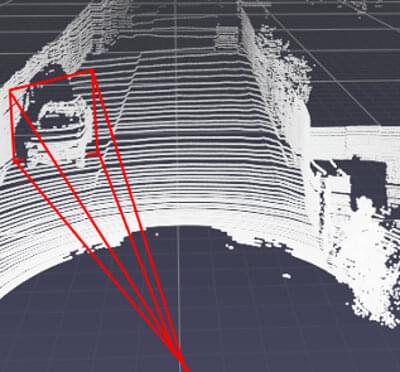

Researchers at Duke University have demonstrated the first attack strategy that can fool industry-standard autonomous vehicle sensors into believing nearby objects are closer (or further) than they appear without being detected.

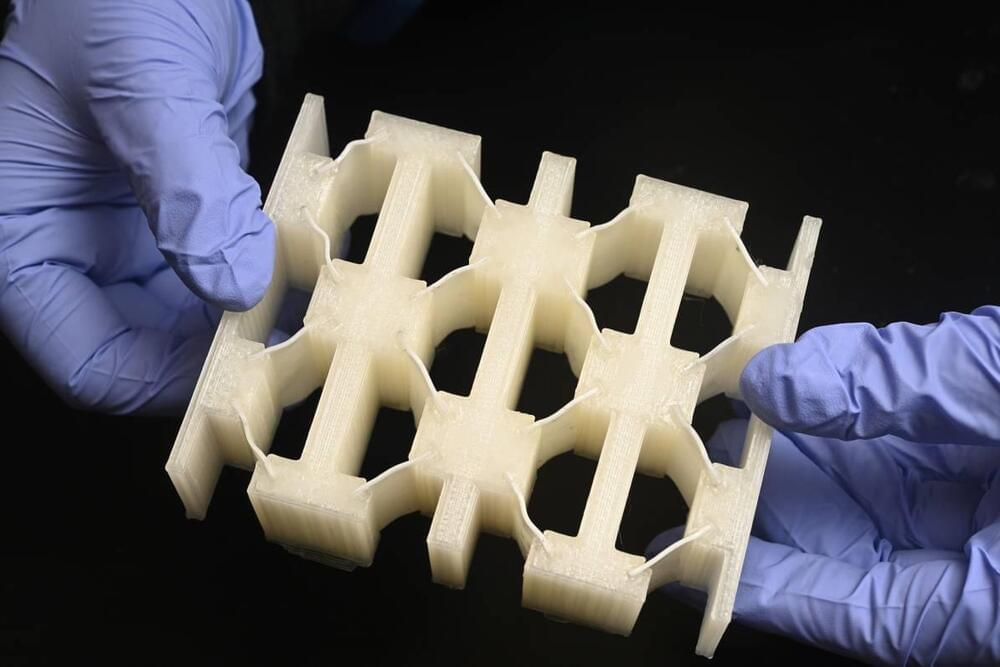

The research suggests that adding optical 3D capabilities or the ability to share data with nearby cars may be necessary to fully protect autonomous cars from attacks.

The results will be presented Aug. 10–12 at the 2022 USENIX Security Symposium, a top venue in the field.