Is neuromorphic computing the only way we can actually achieve general artificial intelligence?

Very likely yes, according to Gordon Wilson, CEO of Rain Neuromorphics, who is trying to recreate the human brain in hardware and “give machines all of the capabilities that we recognize in ourselves.”

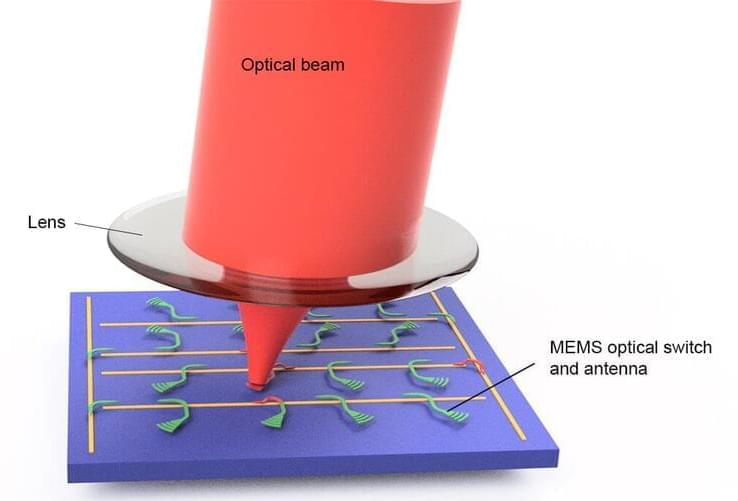

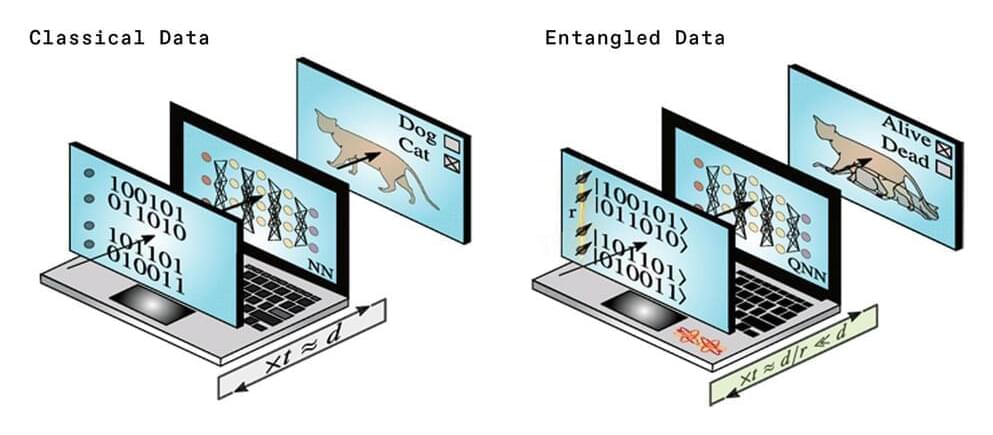

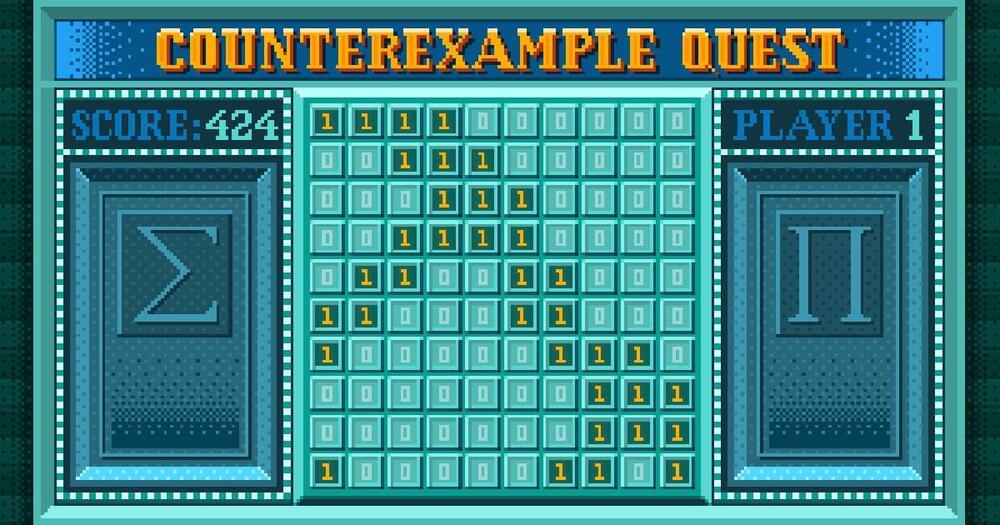

Rain Neuromorphics has built a neuromorphic chip that is analog. In other words it does not simulate neural networks: it is a neural network in analog, not digital. It’s a physical collection of neurons and synapses, as opposed to an abstraction of neurons and synapses. That means no ones and zeroes of traditional computing but voltages and currents that represent the mathematical operations you want to perform.

Right now it’s 1000X more energy efficient than existing neural networks, Wilson says, because it doesn’t have to spend all those computing cycles simulating the brain. The circuit is the neural network, which leads to some extraordinary gains in both speed improvement and power reduction, according to Wilson.

Links:

Rain Neuromorphics: https://rain.ai.

Episode sponsor: SMRT1 https://smrt1.ca/

Support TechFirst with $SMRT coins: https://rally.io/creator/SMRT/