The merger would bring Musk’s rockets, Starlink satellites, the X social media platform and Grok AI chatbot under one roof and could boost his efforts to launch data centers into orbit.

A landmark study suggests that this daily habit may reshape the way your mind activates.

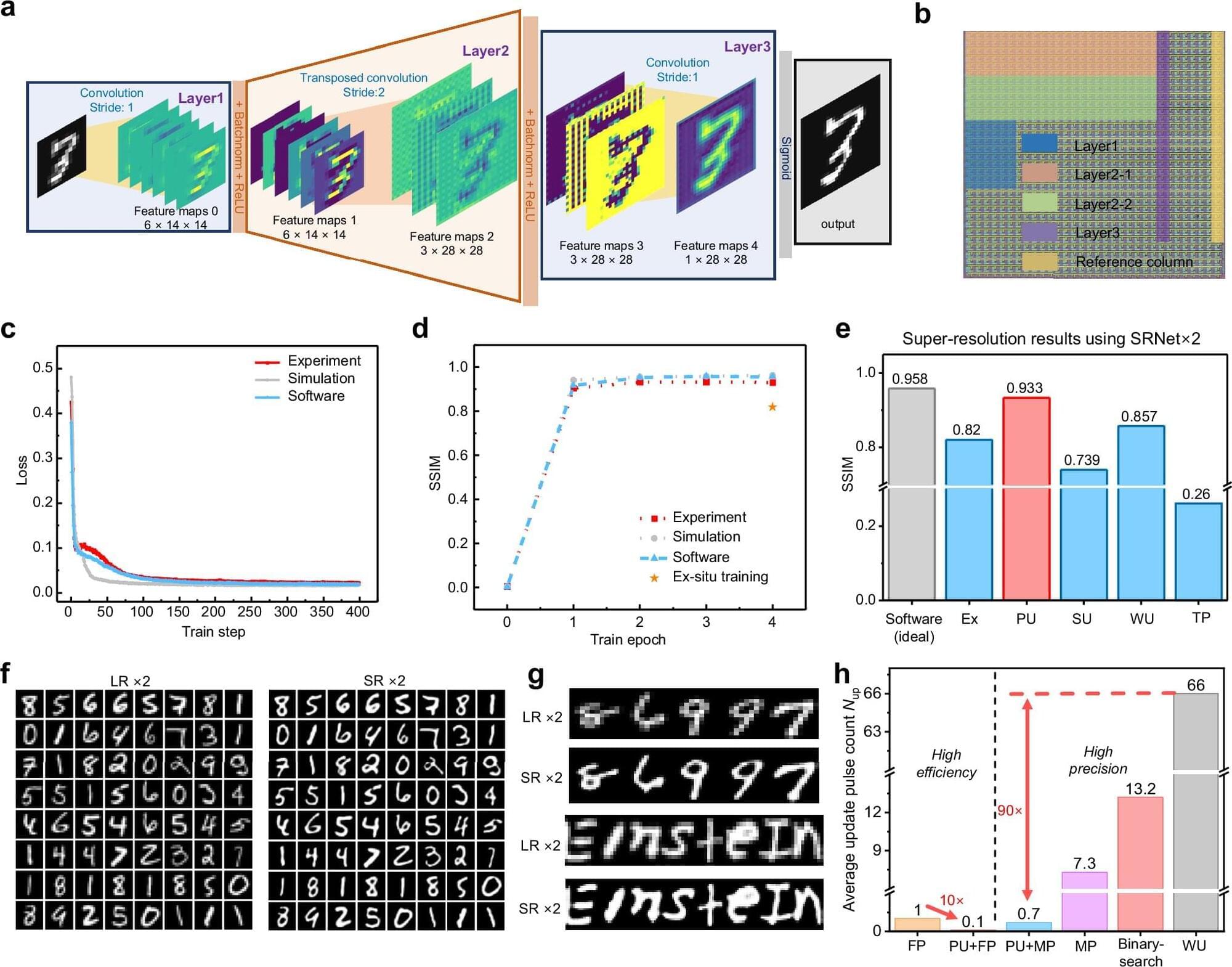

In a Nature Communications study, researchers from China have developed an error-aware probabilistic update (EaPU) method that aligns memristor hardware’s noisy updates with neural network training, slashing energy use by nearly six orders of magnitude versus GPUs while boosting accuracy on vision tasks. The study validates EaPU on 180 nm memristor arrays and large-scale simulations.

Analog in-memory computing with memristors promises to overcome digital chips’ energy bottlenecks by performing matrix operations via physical laws. Memristors are devices that combine memory and processing like brain synapses.

Inference on these systems works well, as shown by IBM and Stanford chips. But training deep neural networks hits a snag: “writing” errors when setting memristor weights.

Many advanced electronic devices – such as OLEDs, batteries, solar cells, and transistors – rely on complex multilayer architectures composed of multiple materials. Optimizing device performance, stability, and efficiency requires precise control over layer composition and arrangement, yet experimental exploration of new designs is costly and time-intensive. Although physics-based simulations offer insight into individual materials, they are often impractical for full device architectures due to computational expense and methodological limitations.

Schrödinger has developed a machine learning (ML) framework that enables users to predict key performance metrics of multilayered electronic devices from simple, intuitive descriptions of their architecture and operating conditions. This approach integrates automated ML workflows with physics-based simulations in the Schrödinger Materials Science suite, leveraging physics-based simulation outputs to improve model accuracy and predictive power. This advancement provides a scalable solution for rapidly exploring novel device design spaces – enabling targeted evaluations such as modifying layer composition, adding or removing layers, and adjusting layer dimensions or morphology. Users can efficiently predict device performance and uncover interpretable relationships between functionality, layer architecture, and materials chemistry. While this webinar focuses on single-unit and tandem OLEDs, the approach is readily adaptable to a wide range of electronic devices.

By Chuck Brooks

#artificialintelligence #tech #government #quantum #innovation #federal #ai

By Chuck Brooks, president of Brooks Consulting International

In 2026, government technological innovation has reached a key turning point. After years of modernization plans, pilot projects and progressive acceptance, government leaders are increasingly incorporating artificial intelligence and quantum technologies directly into mission-critical capabilities. These technologies are becoming essential infrastructure for economic competitiveness, national security and scientific advancement rather than merely scholarly curiosity.

We are seeing a deliberate change in the federal landscape from isolated testing to the planned implementation of emerging technology across the whole government. This evolution represents not only technology momentum but also policy leadership, public-private collaboration and expanded industrial capability.

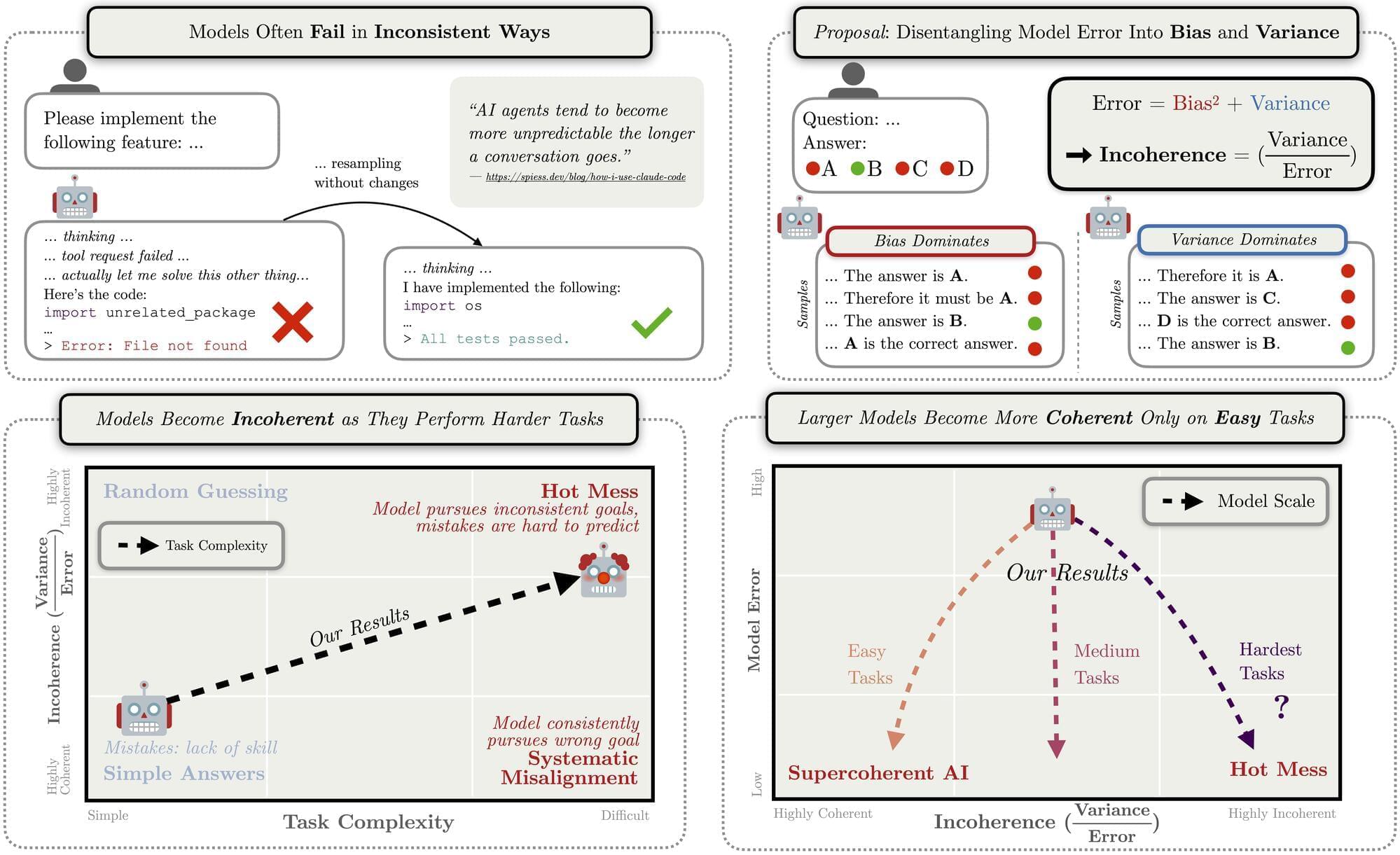

When AI systems fail, will they fail by systematically pursuing the wrong goals, or by being a hot mess? We decompose the errors of frontier reasoning models into bias (systematic) and variance (incoherent) components and find that, as tasks get harder and reasoning gets longer, model failures become increasingly dominated by incoherence rather than systematic misalignment. This suggests that future AI failures may look more like industrial accidents than coherent pursuit of a goal we did not train them to pursue.